Analysis of Explainable Deep Learning Models in Medical Image Analysis

The paper "Explainable Deep Learning Models in Medical Image Analysis" by Singh, Sengupta, and Lakshminarayanan addresses a critical challenge in the application of deep learning methods in the field of medical imaging: the interpretability of model predictions. The use of deep learning techniques in medical diagnostics is increasingly prevalent, as these methods demonstrate superior performance and, in some cases, even surpass human experts. However, the opaque nature of these algorithms impedes their broader clinical adoption due to the need for transparency and understanding of model decisions.

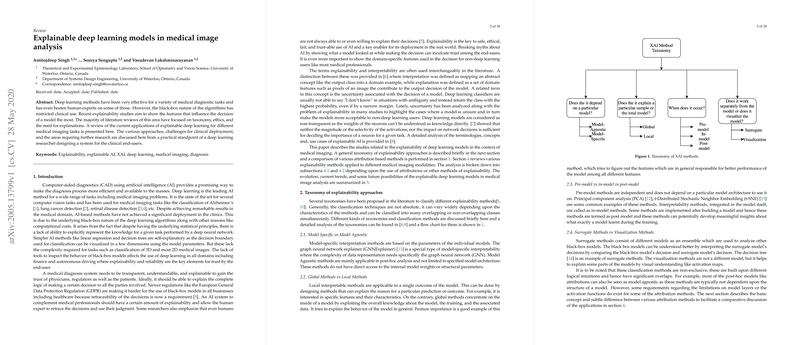

The authors provide a comprehensive review of current advancements in explainable artificial intelligence (XAI) as applied to medical imaging. They systematically examine a spectrum of methods used to elucidate which features most significantly affect model outcomes. The paper discusses the various methodological approaches and challenges involved in integrating these explainability techniques into clinical settings. This review adopts the perspective of a deep learning researcher focused on designing systems tailored for clinical use, particularly emphasizing practicality and user requirements.

Key insights from the paper include:

- Diversity of Explainability Techniques: The authors categorize current explainability methodologies, illustrating the range of strategies employed to interpret model decisions. This includes approaches based on feature attribution and others that aim to generate human-understandable explanations for model behavior.

- Challenges and Limitations: The paper highlights the obstacles faced in deploying explainable models in clinical environments. The primary concern is bridging the gap between technical explanations and clinical applicability, requiring systems that are not only accurate but also interpretable to clinicians with varying levels of familiarity with AI technologies.

- Practical Application: The paper underscores the necessity for explainable models tailored to specific medical imaging tasks, acknowledging that solutions may need to be customized to address the unique requirements of different diagnostic contexts.

- Future Research Directions: There is an identified need for further research to refine existing explainability frameworks and to create automated systems capable of integrating seamlessly into clinical workflows.

The paper's contribution resides in its thorough examination of the application of XAI in medical image analysis, which is pivotal for enhancing model acceptance and trust among healthcare professionals. By advancing explainability, these models could achieve wider integration into clinical practice, ultimately leading to improved diagnostic processes and patient outcomes.

Looking forward, the implications of this work suggest several avenues for future research and development. Continued exploration of user-centered design in XAI methods will be essential to enhance the usability of these models in clinical settings. Furthermore, developing standardized evaluation metrics for explainability will be crucial for assessing their effectiveness and reliability. As AI technology continues to evolve, the role of explainable models in medical diagnostics is expected to grow, fostering enhanced collaboration between AI researchers and clinical practitioners.