Overview

Retrieval-Augmented Generation (RAG) is a novel approach in LLMing that significantly enhances the ability of generative LLMs to perform knowledge-intensive tasks. This approach combines the advantages of large pre-trained models with the retrieval of factual information from non-parametric (external) data sources like Wikipedia. RAG models can be fine-tuned end-to-end and have been shown to outperform existing systems on multiple tasks, especially in open domain question answering (QA), where they set new benchmarks.

Methodology

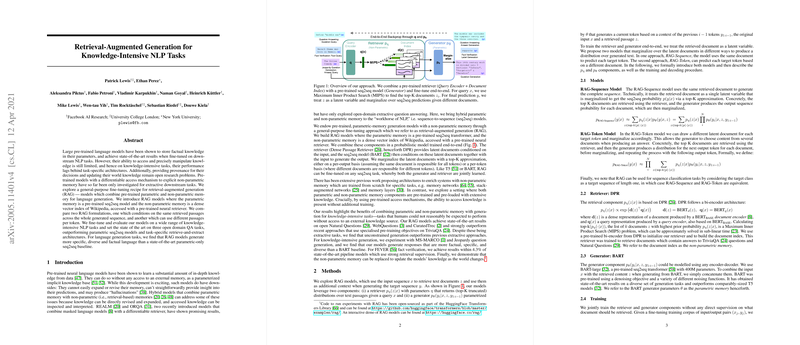

RAG employs a two-stage retrieval-generated architecture that first involves finding relevant documents using a retriever model, then these documents are fed into a seq2seq generator that produces the final output. The retriever uses a bi-encoder architecture to calculate the relevance of documents from a dense vector index of Wikipedia. The generator is a BART-large model, which combines the retrieved documents and the input to generate an output sequence. Distinctly, RAG can predict each token in the output using a different retrieved document, allowing for a rich and diverse generation.

Experimental Results

The paper shares a series of experimental validations across various NLP tasks. On open-domain QA datasets like Natural Questions, TriviaQA, WebQuestions, and CuratedTrec, RAG models establish new state-of-the-art performance. Impressively, in the absence of the correct answer in retrieved documents, RAG still managed an 11.8% accuracy, showcasing its ability to rely on parametric knowledge stored in the generator model. For the FEVER fact verification task, which requires the validation of claims using Wikipedia content, RAG shows performance within a close range of more complex models that have access to stronger supervision signals.

Implications and Future Work

RAG's general-purpose fine-tuning approach could revolutionize how we handle knowledge-intensive tasks in NLP by leveraging both parametric and non-parametric knowledge sources. The approach is particularly beneficial for scenarios where providing updated information is critical, as the non-parametric memory (like the Wikipedia index) can be easily updated or replaced without retraining the entire model. Moreover, the fact that it can generate plausible answers even when the explicit information is missing from the external knowledge source, suggests independence from certain types of training data and robustness to knowledge drift.

The research showcases the potential of RAG for diverse application areas — from more informed, reliable chatbots to robust models for information retrieval that goes beyond fixed datasets. The future could see these models being applied and further tuned for specific professional domains like legal, medical, or scientific research, improving access to and the synthesis of complex information.