XGLUE: Benchmark Dataset for Cross-lingual NLP Tasks

The advent of pre-training plus fine-tuning in NLP has enabled significant strides in achieving state-of-the-art results, especially for cross-lingual tasks. The paper introduces XGLUE, a comprehensive benchmark designed to evaluate and enhance the capabilities of cross-lingual pre-trained models. XGLUE distinguishes itself from its predecessor, GLUE, by offering a wider variety of tasks across multiple languages, thereby addressing both language understanding and generation. This benchmark is crucial for advancing cross-lingual model capabilities and addressing the limitations of current monolingual resources, particularly for low-resource languages.

Contribution and Features of XGLUE

The contribution of XGLUE is twofold:

- Diverse Tasks: The benchmark presents 11 cross-lingual tasks, including single-input understanding tasks, pair-input understanding tasks, and generation tasks. This diversity extends beyond the natural language understanding tasks provided by GLUE. While XTREME, a concurrent effort, addresses a similar domain of cross-lingual tasks, XGLUE distinguishes itself by including both understanding and generation scenarios, introducing tasks in real-world applications such as search and ad relevance.

- Extended Baseline Models: An extension of the cross-lingual pre-trained model, Unicoder, provides a robust baseline for these tasks. Unicoder is evaluated against several models like Multilingual BERT and XLM-R, demonstrating its capability in handling both language understanding and generation across multiple languages.

Datasets and Tasks

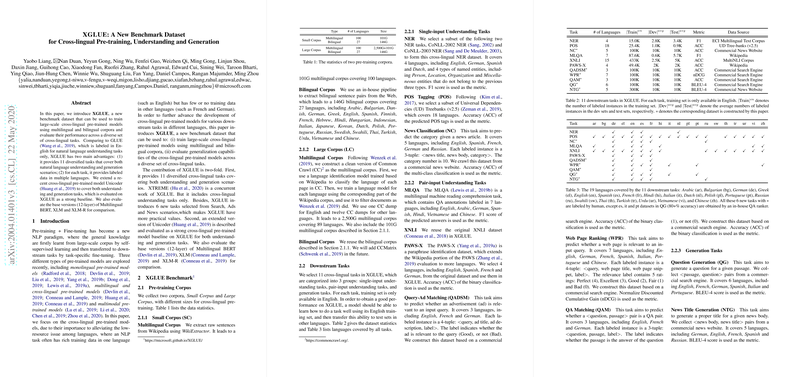

XGLUE encompasses a variety of datasets curated from multilingual and bilingual corpora. The pre-training corpus includes a Small Corpus (SC) covering 27 languages and a Large Corpus (LC) encompassing a more extensive multilingual dataset. The benchmark addresses 19 languages in total, spanning a variety of natural language processing tasks:

- Single-input Understanding Tasks: These tasks include Named Entity Recognition (NER), Part-of-Speech (POS) tagging, and News Classification (NC).

- Pair-input Understanding Tasks: Multilingual QA (MLQA), Cross-Lingual Natural Language Inference (XNLI), and Question-Answering Matching (QAM), among others, are part of this group.

- Generation Tasks: Tasks such as Question Generation (QG) and News Title Generation (NTG) require the model to generate coherent sequences in multiple languages.

Evaluation and Baseline Models

The paper provides a thorough evaluation of several pre-trained models, with Unicoder taking a notable position due to its adaptation to both SC and LC datasets. Evaluations indicate:

- Baseline Performance: Unicoder outperforms both M-BERT and XLM-R in most tasks, indicating that leveraging both multilingual and bilingual corpora with tasks like Translation LLMs (TLM) improves cross-lingual efficacy.

- Generation Capabilities: The introduction of multilingual denoising auto-encoding (xDAE) and future n-gram prediction (xFNP) in Unicoder underscores its strong performance in generation tasks, outperforming BERT and similar architectures.

Implications and Future Directions

XGLUE's addition of generation tasks represents a significant expansion of the cross-lingual research space, providing benchmarks that encourage the development of models capable of generating natural and coherent outputs across diverse languages. This development indicates promising directions for improving machine translation and multilingual conversational AI systems.

The findings in the XGLUE benchmark suggest areas for further research, particularly in optimizing pivot-language selection and exploring multi-language and multi-task fine-tuning. These strategies can significantly enhance model performance across languages and reduce the resource disparity across languages.

In conclusion, XGLUE is a substantial contribution to the field of cross-lingual NLP, providing a comprehensive framework and dataset to facilitate the development of more efficient, capable, and inclusive LLMs. This benchmark serves a critical role in advancing NLP capabilities across diverse languages, setting the stage for future innovations and applications.