An Analysis of Cross-Lingual Representation Learning with XLM-R

The paper presents an extensive paper on the performance of XLM-R, a robust cross-lingual LLM trained on the CommonCrawl corpus with a focus on multilingual understanding. The researchers highlight the challenges and methodologies related to scaling neural LLMs across multiple languages, especially low-resource ones.

Multilingual Training Strategies

The paper discusses various strategies in training multilingual models, including Cross-lingual Transfer, TRANSLATE-TEST, TRANSLATE-TRAIN, and TRANSLATE-TRAIN-ALL. The primary objective is to evaluate the efficiency of these methods in terms of accuracy across multiple languages:

- Cross-lingual Transfer: Fine-tunes a multilingual model on an English dataset.

- TRANSLATE-TEST: Translates all test sets into English and uses an English-only model.

- TRANSLATE-TRAIN: Fine-tunes a multilingual model on each training set separately.

- TRANSLATE-TRAIN-ALL: Fine-tunes a multilingual model on all available multilingual training sets.

Empirical Results

The authors provide comprehensive results of XLM-R compared to existing models, including mBERT, XLM, and Unicoder. The evaluation metrics focus on average accuracy across multiple languages for tasks like cross-lingual classification (XNLI), question answering (MLQA), and named entity recognition (CoNLL).

XNLI Performance

XLM-R achieves an average accuracy of 83.6% using the TRANSLATE-TRAIN-ALL method, outperforming prior models significantly (Table 1). Specific highlights include:

- English: 89.1% accuracy

- French: 85.1% accuracy

- German: 85.7% accuracy

This robustness across languages indicates superior cross-lingual representations provided by XLM-R.

MLQA Performance

Evaluating on MLQA, XLM-R demonstrates leading performance in both F1 and EM scores across languages. It outperforms other models such as mBERT and XLM-15, showcasing improvement in zero-shot classification from the English SQuAD dataset to multiple languages (Table 2).

For example, XLM-R recorded:

- F1/EM for English: 80.6/67.8

- F1/EM for Spanish: 74.1/56.0

- F1/EM for Arabic: 63.1/43.5

Named Entity Recognition

In Named Entity Recognition tasks (Table 3), XLM-R achieved superior F1 scores compared to previous methodologies. Notably:

- English: 92.92

- Dutch: 92.53

- German: 85.81

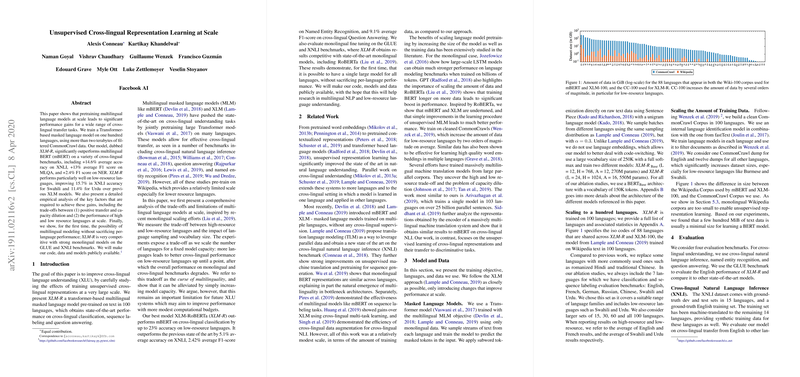

Training Corpus: CommonCrawl vs. Wikipedia

The transition from Wikipedia to CommonCrawl data is a notable factor in XLM-R's enhanced performance. The CommonCrawl corpus offers significantly larger and more diverse datasets, particularly aiding low-resource languages (Figure 2).

For instance:

- Vietnamese: 137.3 GiB of data

- Swahili: 1.6 GiB of data

- Tamil: 12.2 GiB of data

This increased data volume alleviates issues of limited language understanding in previous models and supports more balanced multilingual models.

Implications and Future Directions

The paper's findings suggest significant theoretical and practical implications for cross-lingual research and applications. The enhanced performance of XLM-R across diverse linguistic tasks underscores the potential for more inclusive AI models that understand and process a wide array of languages with varying data resources.

Future research should explore:

- Further scaling of model capacity while maintaining efficiency.

- Advanced training techniques to better handle the interference phenomenon in multilingual settings.

- Optimization of tokenization methods to improve representation across languages.

In conclusion, the paper sheds light on the advancements in multilingual representation learning with XLM-R, setting a new benchmark for cross-lingual NLP tasks. The systematic approach and robust empirical evaluation reinforce the potential for developing more inclusive and capable AI systems.