An Evaluation of Transformer-Based Pre-trained Models for Data Augmentation in NLP

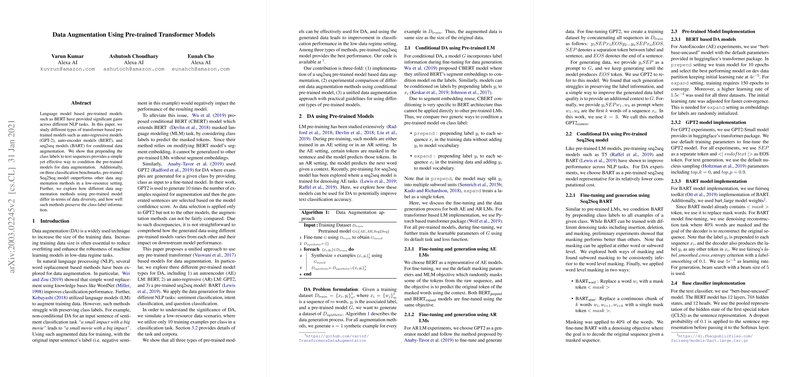

In the paper "Data Augmentation Using Pre-trained Transformer Models," the authors examine the application of various transformer-based pre-trained LLMs for conditional data augmentation in NLP tasks. The work provides a comparative analysis of the effectiveness of auto-regressive (AR), auto-encoder (AE), and sequence-to-sequence (Seq2Seq) models in improving text classification accuracy in low-data scenarios. Among the models assessed are GPT-2, BERT, and BART, which are representative of the AR, AE, and Seq2Seq architectures, respectively.

Key Contributions and Methodology

The research delineates a unified framework that leverages the capabilities of any pre-trained transformer model for data augmentation in low-resource NLP tasks. The authors focus on sentiment classification, intent classification, and question classification across three different text benchmarks. A novel contribution of the paper is the use of class labels appended to text sequences as a conditional mechanism for data augmentation, a method that shows promise for achieving superior model performance.

- Application of pre-trained models: The paper implements three pre-trained model structures: BERT as an AE model, GPT-2 as an AR model, and BART as a Seq2Seq model. The models are fine-tuned with their respective objectives, such as masked LLMing for BERT and conditional generation tasks for GPT-2.

- Focus on label conditioning: The paper rigorously evaluates two primary techniques for conditioning pre-trained models with class labels—prepending labels to text sequences and expanding model vocabulary. This conditioning is crucial for preserving class label information during data augmentation.

- Experimental setup: The authors use a simulated low-resource scenario with 10 or 50 training examples per class, evaluating the performance enhancements afforded by the augmented data through a BERT-based classifier. The paper also assesses the intrinsic qualities of generated data, emphasizing semantic fidelity and diversity.

Empirical Findings

The experimental results indicate that Seq2Seq models, particularly BART, outperform AE and AR models in terms of classification performance, in addition to retaining a satisfactory balance of data diversity and class fidelity. The BART model's ability to leverage varying denoising objectives, such as word or span masking, offers a degree of flexibility advantageous in improving classification outcomes.

- Semantic fidelity and diversity: The paper evaluates these aspects by deploying BERT-trained classifiers to measure label preservation accuracy in generated texts. BART's incorporation of span masking is shown to enhance the fidelity and diversity alignment of augmented data compared to EDA, back translation, and other approaches.

- Baseline comparisons: Back translation emerges as a robust baseline, often surpassing other pre-trained model-based augmentation methods in terms of label fidelity. This underlines the efficacy of traditional translation models in semantic retention during data augmentation.

Theoretical and Practical Implications

Theoretically, the findings underscore the versatility of Seq2Seq models in data augmentation tasks within NLP, highlighting their potential for surpassing AE and AR models when equipped with appropriate conditioned labeling strategies. Practically, these insights support the development of more effective data augmentation techniques, especially in low-data paradigms, which is particularly pertinent for real-world applications where extensive labeled datasets may not be available.

Future Directions

The unified augmentation approach presented in this paper opens avenues for extending these methods to more complex NLP tasks and integrating advanced co-training techniques. Future research could focus on optimizing model-specific hyperparameters to fully exploit the potential of each architecture in varying dataset characteristics and task requirements. It also suggests that advancements in latent space manipulation and model co-training could be integrated with the current augmentation methods to further enhance model robustness and performance.

In conclusion, this paper contributes a valuable perspective on utilizing transformer-based models for data augmentation in NLP, offering practical guidelines and setting a foundation for subsequent explorations into model architecture-specific enhancements for data-limited scenarios.