The paper "Meta-Embeddings Based On Self-Attention" proposes an innovative approach to improving LLMing performance through the use of meta-embeddings. The research introduces a novel model known as the "Duo," which is a meta-embedding mechanism utilizing self-attention to enhance the capabilities of text classification and machine translation systems.

Key Contributions and Innovations:

- Duo Meta-Embedding Model:

- The paper presents the Duo model, a meta-embedding approach that leverages the self-attention mechanism. This model utilizes multiple pre-trained word embeddings and integrates them to enhance LLMing tasks.

- Efficiency and Parameter Reduction:

- Despite having fewer than 0.4 million parameters, the Duo mechanism achieves state-of-the-art results on text classification benchmarks, such as the 20-Newsgroups (20NG). This parameter efficiency is achieved by employing weight sharing in the multi-head attention layers.

- LLMing and Text Classification:

- In text classification tasks, the Duo model improves performance by exploiting the information contained in two separately trained embeddings (e.g., GloVe and fastText). Each embedding provides independent, complementary information, which the model utilizes to gain better results in classification tasks.

- Machine Translation:

- The paper introduces the first sequence-to-sequence machine translation model that employs more than one word embedding. The Duo-enhanced Transformer outperforms the standard Transformer model in both accuracy and convergence speed, notably on the WMT 2014 English-to-French translation task.

- General Applicability:

- The Duo mechanism is designed to be flexible and can be integrated into various Transformer-based models, including those tailored for long-sequence learning, such as Transformer-XL and other advanced variants.

- Improved Convergence and Performance:

- The experiments reveal that the meta-embedding approach not only elevates performance in terms of BLEU scores in translation tasks but also accelerates convergence. This suggests that the meta-embedding strategy effectively captures richer semantic information from multiple embeddings.

Technical Insights:

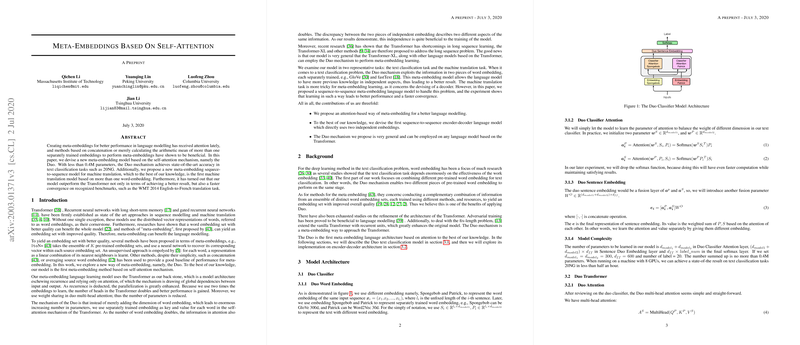

- Duo Classifier Architecture:

The Duo Classifier employs independently trained embeddings as keys and values in the self-attention mechanism of a Transformer. Importantly, this setup allows for a broader representation of the input, capturing more nuanced aspects of the data.

- Duo Transformer:

The Duo multi-head attention differentiates itself by leveraging different embeddings for queries, keys, and values, enhancing the ability to depict various semantic aspects. This setup, combined with parameter sharing, reduces complexity and enhances performance.

- Layer Normalization Enhancement:

A unique modification called Duo Layer Normalization synergizes learning across the different embedding dimensions, facilitating efficient cross-information flow. This modification contributes to robust model performance without excessively increasing complexity.

The paper collectively establishes that the proposed meta-embedding mechanisms, complemented by the Duo architecture, significantly enhance LLMs' efficacy, exemplified in both text classification and machine translation tasks. The research elucidates the benefits of integrating multiple embeddings into self-attention mechanisms, arguably paving the way for further exploration in meta-embedding and LLMing.