Analysis of "Channel Pruning via Automatic Structure Search"

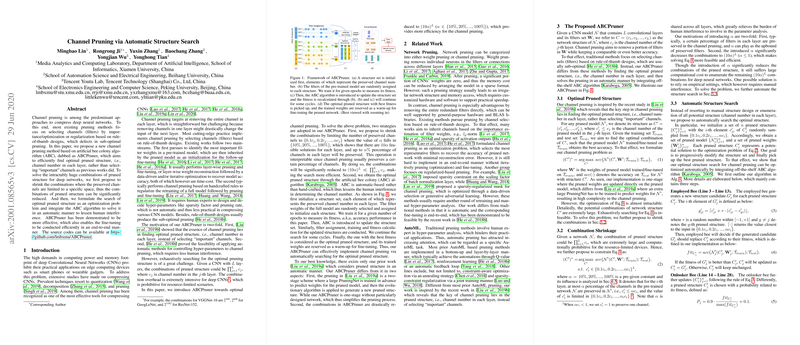

The paper "Channel Pruning via Automatic Structure Search" presents a novel approach to optimize the pruning of deep convolutional neural networks (CNNs) by focusing on determining the optimal pruned structure rather than merely selecting important channels. This method, entitled ABCPruner, introduces a paradigm shift in channel pruning algorithms by utilizing an artificial bee colony (ABC) approach to automatically discover the most efficient network structure. The paper addresses several limitations of traditional channel pruning methodologies, which often lay undue emphasis on selecting specific channels based on predetermined heuristics, resulting in suboptimal pruning decisions.

Core Contributions

- Optimal Structure Discovery: Unlike previous models that focus on identifying and preserving specific "important" channels, ABCPruner redefines the objective to find the optimal pruned structure, defined as the specific number of channels to preserve in each network layer.

- Efficient Search Space Reduction: The method employs a strategy to reduce the search space by constraining the number of channels to selected percentages of the original network, thus drastically cutting down the enumeration burden associated with potential pruning configurations.

- Automatic Optimization via ABC Algorithm: The ABCPruner leverages the ABC algorithm to automate the search process, significantly minimizing human intervention in hyperparameter tuning and making the pruning process more feasible and less time-consuming.

Empirical Results and Insights

The paper provides comprehensive evaluations on widely-used networks such as VGGNet, GoogLeNet, and various architectures of ResNet, on datasets like CIFAR-10 and ILSVRC-2012. Key findings illustrate that ABCPruner achieves substantial reductions in parameters, FLOPs, and channel counts without appreciable losses in accuracy:

- For VGGNet-16 on CIFAR-10, it reduced parameters by 88.68% while improving model accuracy slightly.

- On ResNet architectures applied to ILSVRC-2012, ABCPruner demonstrated a balanced trade-off between model accuracy and computational efficiency, especially in deeper architectures like ResNet-50 and ResNet-152.

Comparative Evaluation

ABCPruner was benchmarked against other state-of-the-art methods, both manual and automated, such as ThiNet and MetaPruning. It showed superior performance in terms of FLOP reduction and accuracy retention while requiring fewer epochs for training and fine-tuning. Notably, ABCPruner's one-stage pruning and fine-tuning process was more effective compared to the two-stage approaches of some automatic methods.

Implications and Future Directions

ABCPruner proposes a meaningful advancement in neural network pruning by demonstrating that the architecture's efficacy greatly hinges on identifying optimal pruned structures rather than on heuristic-based channel selection. Practically, this implies a more streamlined and potentially adaptable framework for deploying deep CNNs on resource-constrained devices. Theoretically, it reinforces the narrative that beyond optimizing network weights, architectural innovations are crucial for efficient model design.

Future research could explore the integration of ABCPruner with more complex neural architectures and real-time implementations, expanding the scope of channel pruning in adaptive neural network deployment. Moreover, there could be potential in exploring hybrid approaches that blend the simplicity and speed of ABCPruner with other advanced optimization algorithms to further the efficiency of network pruning.

In conclusion, "Channel Pruning via Automatic Structure Search" significantly elevates the conversation on channel pruning towards achieving automatically deduced optimal network structures, thus marking a potent stride in CNN compression methodologies.