Unmasking DeepFakes with Simple Features

This paper presents an innovative approach to detecting AI-generated fake images, known as DeepFakes, through classical frequency domain analysis. The authors propose a method utilizing frequency components combined with a straightforward classifier to differentiate between real and fake face images. This method stands out due to its ability to achieve high accuracy with minimal training data, and it performs well even in unsupervised settings.

The paper begins by addressing the urgent need for efficient DeepFake detection mechanisms due to the proliferation of fake digital content fostered by advancements in deep generative models. Despite the realism offered by modern deep learning techniques, the paper identifies that fake images often exhibit artifacts in certain domains invisible to the naked eye.

Methodology

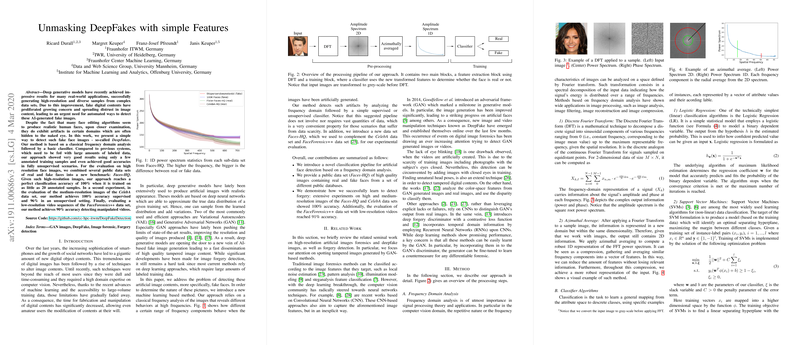

The core of the proposed method involves transforming images from the spatial domain to the frequency domain using Discrete Fourier Transform (DFT). The subsequent azimuthal averaging allows the authors to compress the 2D frequency information into a robust 1D power spectrum representation. By focusing on the frequency domain, the method leverages the visible distinctions in high-frequency behaviors between real and fake images, which serves as a dependable feature for classification.

The classifier component is flexible, accommodating both supervised and unsupervised learning scenarios. The authors test multiple classifiers, including Support Vector Machines (SVM), Logistic Regression, and K-Means clustering, demonstrating the method's versatility across different learning paradigms.

Experimental Evaluation

The authors conduct extensive experiments using large datasets composed of both real and fake faces, including a newly introduced high-resolution dataset called Faces-HQ. The results are compelling:

- The proposed method achieves perfect binary classification accuracy (100%) for high-resolution face images using as few as 20 labeled training examples when tested on the Faces-HQ dataset.

- For medium-resolution images from the CelebA dataset, the method maintains 100% accuracy in a supervised setting and 96% in an unsupervised scenario.

- When applied to low-resolution video data from the FaceForensics++ dataset, the method achieves 90% accuracy in detecting manipulated videos, underscoring its robustness even when confronted with lower resolution content.

Implications and Future Directions

The paper's findings suggest significant implications for the field of image forensics, particularly in the field of DeepFake detection. The ability to effectively distinguish between genuine and artificially manipulated content using minimal training data offers a promising solution for environments with limited labeled data availability.

The theoretical contributions of this work lie in the demonstration that simple frequency domain features can be sufficiently informative to distinguish forged images effectively. This challenges the common reliance on data-intensive deep learning methods and opens possibilities for novel detection strategies that emphasize feature economy and interpretability.

In terms of future developments, the authors' method provides a groundwork for exploring frequency domain analysis benefits on other AI-generated content forms, potentially extending beyond facial images. Furthermore, an exploration into more intricate or adaptive frequency domain transformations could yield even more refined results.

Overall, this paper offers a substantial contribution to the field of digital forensics, with practical applications in safeguarding against the misuse of AI-generated content. As deep generative models continue to evolve, lightweight and efficient detection methods like the one proposed here will be crucial assets in maintaining digital content integrity.