Comprehensive Evaluation and Insight into Detecting AI-Synthesized Human Face Images

Introduction

The advent of generative AI and Deep Learning has significantly enhanced the realism of synthesized human face images, raising concerns about their potential misuse. While considerable progress has been made in detecting manipulations in existing images (deepfakes), the detection of entirely synthesized faces presents unique challenges, exacerbated by the emergence of Diffusion Models (DMs) known for their photorealistic outputs. This paper meticulously constructs a benchmark to evaluate state-of-the-art detectors' ability to generalize across images produced by varied generative models, including Generative Adversarial Networks (GANs) and DMs. Furthermore, it explores the detection efficiency through frequency domain analysis, introducing a novel approach that significantly improves detection performance on synthesized human faces.

Benchmark Development

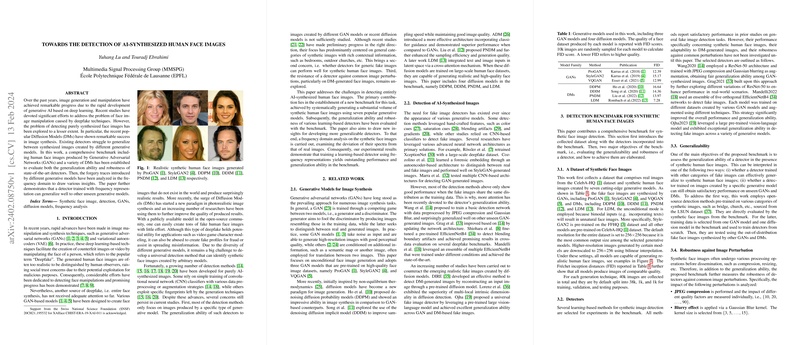

The establishment of a comprehensive benchmark forms the core of this work, involving a collection of synthetic images generated through seven leading generative models, including both GANs and DMs. The dataset aims to explore two key aspects: the generalization ability of existing detectors to identify synthetic faces across different generation techniques and their robustness against common image perturbations such as compression and noise.

- Dataset and Generative Models: The paper opts for a diverse range, including three GAN models (ProGAN, StyleGAN2, VQGAN) and four diffusion models (DDPM, DDIM, PNDM, LDM), synthesized using the CelebA-HQ dataset ensuring realism and challenge in detection tasks.

- Detectors in Benchmark: Four existing methods with noted performances in fake image detection are assessed on this benchmark, highlighting the challenges in applying models trained on generic data to the specific case of synthetic human faces.

Insights from Frequency Domain Analysis

A novel insight from this work is the exploration of fake image detection through frequency domain analysis. Unlike spatial analysis, which looks for patterns and discrepancies in the image composition, frequency domain analysis examines the image's spectra for anomalies introduced by generative processes.

- Forgery Traces in Frequency Domain: The analysis reveals distinct signatures in the frequency spectra of synthetic faces, particularly those generated by diffusion models which often evade detection in spatial examinations.

- Frequency Representation for Detector Training: Building on this insight, the paper demonstrates that detectors trained on frequency representations of images show a marked improvement in detecting synthetic faces across a variety of generative models.

Performance and Generalization Ability

The experimental results showcase the limits of existing detection methods, particularly their struggle to generalize across different synthetic generation techniques and to remain robust against image perturbations. Notably, a significant enhancement in generalization ability is observed when employing frequency domain analysis during detector training.

- Detector Evaluation: Among tested detectors, a notable variation in effectiveness is observed, with models trained on frequency representations outperforming their counterparts trained on raw images.

- Robustness Against Perturbations: The benchmark also evaluates the selected detectors against various image perturbations, underscoring the importance of robustness in practical applications of synthetic image detection.

Future Directions

This work sets a precedent for future research in detecting AI-synthesized human faces, highlighting the effectiveness of frequency domain analysis. The demonstrated approach not only broadens the scope for developing more resilient detectors but also offers a pathway for enhancing existing models.

Conclusion

The detection of AI-synthesized human face images presents considerable challenges, accentuated by the evolving capabilities of generative models. This paper contributes a valuable benchmark for the evaluation of detection methods and introduces an innovative approach leveraging frequency domain analysis to improve detection performance. As generative technologies advance, continued adaptation and enhancement of detection methodologies will remain crucial in mitigating potential misuses of synthesized imagery.