Text Summarization with Pretrained Encoders

"Text Summarization with Pretrained Encoders" by Yang Liu and Mirella Lapata, focuses on applying Bidirectional Encoder Representations from Transformers (BERT) for text summarization tasks. The paper proposes a unified framework for both extractive and abstractive summarization leveraging the capabilities of pretrained LLMs.

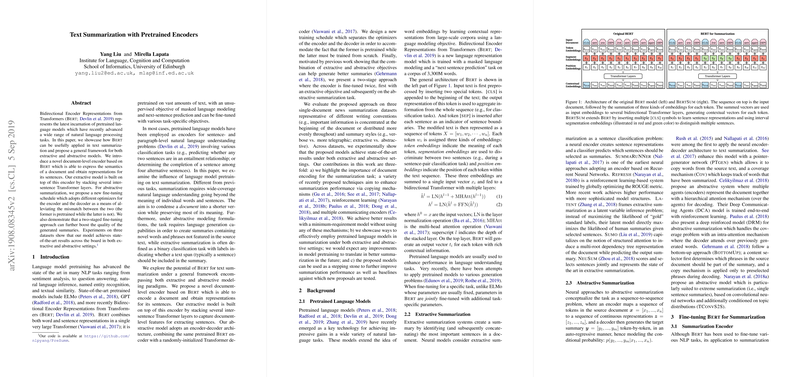

Document-Level Encoder Based on BERT

The cornerstone of this paper is a novel document-level encoder called "BertSum", which is a modification of the BERT architecture augmented for summarization tasks. Traditional BERT models are designed for token-level tasks, making their adaptation to summarization non-trivial. The authors resolve this by inserting multiple [CLS] tokens to demarcate sentence boundaries and by using interval segmentation embeddings to distinguish these sentences within a document. This allows BertSum to generate sentence-level representations essential for the summarization task.

Extractive Summarization Model

For extractive summarization, the paper introduces "BertSumExt," which employs inter-sentence Transformer layers stacked atop the BertSum encoder. These additional layers facilitate the capture of document-level features, crucial for extracting summary-worthy sentences. The extractive model treats the task as a binary classification problem, assigning a label to each sentence in a document to indicate its inclusion in the summary. Extensive testing on the CNN/DailyMail and New York Times (NYT) datasets demonstrates that BertSumExt significantly outperforms prior extractive summarization models.

Abstractive Summarization Model

In the context of abstractive summarization, the researchers implement an encoder-decoder architecture. The encoder is the same BertSum model, while the decoder is a randomly initialized 6-layer Transformer. Recognizing the potential training instability caused by the mismatch between the pretrained encoder and the randomly initialized decoder, the authors propose a novel fine-tuning schedule that employs distinct optimizers for the encoder and decoder, each with tailored learning rates and warmup steps. Furthermore, they suggest a two-stage fine-tuning strategy, initially training the encoder with an extractive objective before switching to the abstractive objective. Models like "BertSumAbs" and "BertSumExtAbs" exhibit robust performance across datasets in this setting, often matching or surpassing state-of-the-art results.

Evaluation and Human Assessment

Experiments conducted on the CNN/DailyMail, NYT, and XSum datasets reveal that the proposed models consistently reach new performance benchmarks, particularly in terms of ROUGE scores. BertSum-based models outperform both extractive and abstractive state-of-the-art summarization systems.

Beyond automatic evaluation, human assessments via a question-answering (QA) paradigm and Best-Worst Scaling provide additional evidence of the superior quality and informativeness of abstracts generated by BertSum models compared to existing baselines.

Implications and Future Work

The implications of incorporating pretrained encoders like BERT in summarization are manifold. Practically, it signifies a step towards more intelligent and nuanced automated summarization systems capable of handling diverse document styles and summary lengths. Theoretically, it underscores the importance of hierarchical and contextual embeddings in understanding and condensing extensive textual data.

The paper opens several avenues for future exploration. One potential direction is extending the current framework to multi-document summarization tasks, addressing challenges related to diverse information integration across documents. Another area worth exploring is enhancing the abstractive capabilities of BertSum by investigating more sophisticated decoder architectures or integrating attention mechanisms.

In conclusion, this research solidifies the role of pretrained LLMs in advancing text summarization technology, illustrating their adaptability, robustness, and potential as foundational components for future innovations in natural language processing.