An Overview of Fast Abstractive Summarization with Reinforce-Selected Sentence Rewriting

The paper "Fast Abstractive Summarization with Reinforce-Selected Sentence Rewriting" presents an innovative approach to document summarization by combining extractive and abstractive methods. The authors propose a model that first selects salient sentences from the source document (extractive) and then rewrites them (abstractive) to generate a concise summary. This hybrid approach seeks to leverage the strengths of both paradigms to handle the challenges of redundancy, slow inference, and loss of language fluency commonly associated with traditional abstractive summarization.

Model Architecture and Training

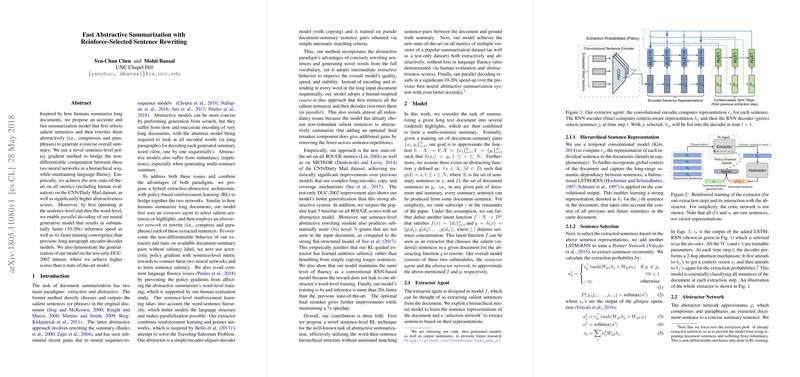

The model operates in two main phases: extraction and abstraction. For extraction, the paper introduces an agent that uses a hierarchical neural model to select the most relevant sentences. This agent is trained with a novel sentence-level policy gradient method, using actor-critic reinforcement learning (RL), which allows the system to learn sentence saliency based on summary-level rewards.

In the abstraction phase, each selected sentence is rewritten using an encoder-aligner-decoder model with a copy mechanism, enabling the generation of novel words while retaining the crucial content. By separating the tasks of sentence selection and sentence rewriting, the model benefits from reduced redundancy and improved fluency.

Empirical Results

The proposed model demonstrates superior performance on the CNN/Daily Mail dataset, achieving new state-of-the-art results across all ROUGE metrics and METEOR scores while maintaining fluency in human evaluations. The model not only excels in quality but also significantly enhances speed, boasting a 10-20x faster inference and 4x quicker training convergence compared to prior long-paragraph encoder-decoder models. Moreover, the model exhibits strong generalization capabilities on the DUC-2002 dataset, surpassing a leading abstractive system.

Theoretical and Practical Implications

The integration of extractive and abstractive methodologies facilitates parallel decoding and contributes to notable improvements in processing speed. This hybrid approach is particularly relevant in applications demanding real-time summarization, where latency is crucial.

Theoretically, the use of reinforcement learning to train the sentence extractor offers a new perspective on handling the non-differentiable aspects of summarization tasks. This methodological advance might inspire further exploration of RL in other NLP tasks where structured output is necessary.

Future Directions

The paper opens up several avenues for future research. Enhancing the abstractive module to operate with even more abstract representations, thus allowing further reduction in redundancy, may improve summary quality. Additionally, expanding the model's capabilities to handle diverse datasets with varying styles and formats would strengthen its generalization and applicability.

The release of the source code, pretrained models, and output summaries promotes transparency and fosters continued research in the NLP community. Subsequent work might consider adapting the model to other languages or exploring its application in different domains such as legal or biomedical summaries.

In conclusion, the paper effectively blends extractive and abstractive summarization techniques, offering a novel and efficient solution for the document summarization challenge. Its contributions to speed, scalability, and fluency set a new benchmark for future research in the field.