Analysis of Multi-headed Attention: Efficiency and Importance in NLP Models

The paper "Are Sixteen Heads Really Better than One?" investigates the practical contributions of multiple attention heads in popular NLP models, specifically Transformer-based and BERT-based models. The work scrutinizes the necessity of multi-headed attention (MHA) mechanisms, which have been integral to achieving state-of-the-art results in tasks like machine translation, natural language inference, and more.

Key Observations and Methodologies

The central finding of the paper is the surprising redundancy of many attention heads in these NLP models. The authors demonstrate that a significant percentage of heads can be eliminated post-training without adversely affecting model performance. This observation holds across different models and tasks, implying that the theoretical advantages of MHA are not fully utilized in practical settings.

Experimental Setup

Two primary models are considered:

- WMT Model: The "large" transformer model used for English-to-French machine translation, trained on the WMT2014 dataset. BLEU scores computed on newstest2013 and newstest2014 serve as the evaluation metric.

- BERT Model: A pre-trained BERT base model, fine-tuned on the MultiNLI dataset for natural language inference. Performance is measured using accuracy on the validation set.

Ablation Studies

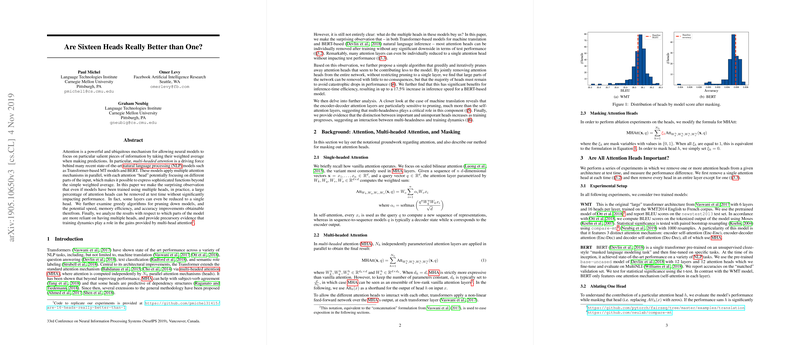

Single Head Ablation

The authors systematically remove individual attention heads at test time and evaluate the performance impact. The findings indicate that most heads are dispensable. For instance, in the encoder's self-attention layers of the WMT model, only 8 out of 96 heads showed a statistically significant change in BLEU score upon removal. Similarly, BERT's performance remained stable when heads were independently masked.

Layer-wise Pruning to a Single Head

The experiments extended to reducing each layer to a single attention head, revealing that while some layers can indeed operate with just one head, encoder-decoder attention layers are more sensitive to such ablation. This reduction suggests that these particular layers rely more on multiple heads to function effectively.

Iterative Pruning Algorithm

To explore the cumulative effect of removing multiple heads, the authors propose a greedy pruning algorithm based on an importance score derived from the sensitivity of the model's loss to each head. This score, inspired by pruning literature, is computationally efficient to estimate and allows for systematic head removal.

The iterative pruning process shows that:

- Up to 40% of heads in BERT and 20% in the WMT model can be pruned without noticeable degradation in performance.

- Beyond these thresholds, performance drops off sharply, underscoring the necessity of a critical mass of attention heads for maintaining accuracy.

Efficiency Gains

An intriguing aspect of pruning is its impact on model efficiency. The authors report an inference speed increase of up to 17.5% for the pruned BERT model, making a strong case for the practical benefits of head pruning in deployment scenarios constrained by memory and speed requirements.

Analysis of Importance and Training Dynamics

Further analysis highlights that encoder-decoder attention layers in machine translation models are more reliant on multiple heads compared to self-attention layers. Additionally, the importance of heads appears to crystallize early in the training process, and the distinction between critical and redundant heads grows as training progresses.

Theoretical and Practical Implications

The findings suggest several implications for both theory and practice:

- Theoretical: The results challenge the commonly held assumption that all attention heads are equally critical for the expressive power of MHA-based models. They also reveal an intricate interplay between training dynamics and head importance.

- Practical: By leveraging head pruning, practitioners can deploy more efficient models without compromising on performance. This has direct benefits for real-world applications where computational resources are at a premium.

Future Research Directions

This paper opens several avenues for future exploration:

- Improved Pruning Techniques: Developing more sophisticated methods that can dynamically adjust the number of heads during training could lead to even more optimal models.

- Cross-Task Generalization: Investigating the generalizability of head importance across a broader array of NLP tasks can further refine the pruning strategies.

- Training Dynamics: A deeper examination of the phases of training could provide more insights into why certain heads become crucial earlier and how this process can be controlled.

In summary, "Are Sixteen Heads Really Better than One?" offers a rigorous analysis of the necessity of multiple attention heads in Transformer and BERT models, highlighting significant opportunities for model optimization. This work underscores the balance between theoretical model capacity and practical operational efficiency, providing a roadmap for future research and development in efficient NLP model design.