Few-Shot NLG with Pre-Trained LLM: A Comprehensive Review

The paper "Few-Shot NLG with Pre-Trained LLM," authored by Zhiyu Chen et al., presents an innovative exploration into few-shot natural language generation (NLG) from structured data. The research addresses a critical challenge in neural-based NLG systems—their dependency on substantial datasets—which often becomes a prohibitive factor for real-world applications.

Research Context and Motivation

Neural end-to-end NLG models, while successful in domains with large datasets, are frequently impractical in applications where data is scarce. This paper proposes a new paradigm of few-shot NLG, aiming to significantly reduce human annotation effort and data requirements while maintaining reasonable performance levels. The central motivation is to emulate how humans derive concise textual representations from tabular data using minimal examples, thereby expanding NLG's applicability across diverse domains.

Methodological Approach

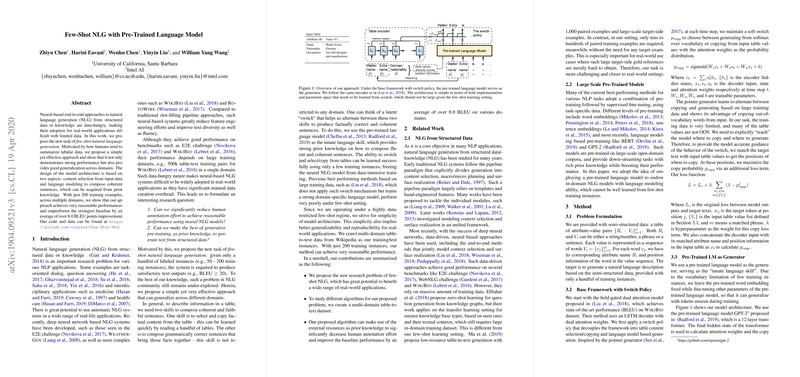

The authors introduce a structured approach integrating content selection from input data with LLMing to produce coherent text. Their model leverages a LLM pre-trained on extensive corpora (e.g., GPT-2) for its robust language skills, while refining its ability to copy factual content from input tables—a skill feasible to acquire from few instances.

The architecture is notable for its simplicity, emphasizing a switch policy mechanism that toggles between generating text and copying from the table. This strategy is supplemented by a copy switch loss function to ensure accurate content copying, which is crucial given the constraints on training data size.

Experimental Validation

The model was rigorously tested across three domains: human biographical data, book summaries, and song descriptions. Results demonstrated the proposed method's superiority, achieving over 8.0 BLEU point improvements on average over baselines, and outperforming a strong domain-specific LLM under few-shot conditions. Notably, the model delivered reasonable performance with only 200 training instances, a testament to its efficiency in low-resource settings.

Numerical and Comparative Insights

The quantitative results detail significant enhancements over existing methods, such as the baseline model from prior works which is unable to leverage a pre-trained LLM. Human evaluation further corroborates these findings by underscoring the model's ability to generate factually correct and grammatically fluent text compared to other approaches. This elevates the practical viability of deploying NLG in domains where the availability of structured data is inherently limited.

Implications and Future Directions

The implications of this research are manifold. Practically, it paves the way for more efficient deployment of NLG systems in real-world scenarios, making it particularly relevant for domains like healthcare, where data privacy and scarcity are pressing concerns. Theoretically, the integration of content selection mechanisms and pre-trained models highlights the growing importance of transfer learning in NLP.

Future research could explore extending this methodology to other types of structured inputs such as knowledge graphs and investigate more sophisticated switch mechanisms. Additionally, adapting this approach to even more challenging zero-shot scenarios could further bridge the gap between AI capabilities and human-like understanding.

Conclusion

In sum, this paper advances the field of NLG with a novel approach to few-shot learning, demonstrating that pre-trained LLMs coupled with strategic content selection mechanisms can yield substantial improvements in text generation tasks with limited data. This line of research not only enhances the utility of NLG systems but also enriches the toolkit available for NLP researchers seeking scalable and efficient solutions.