RGB Image-Based Mapless Robot Navigation With Deep Reinforcement Learning

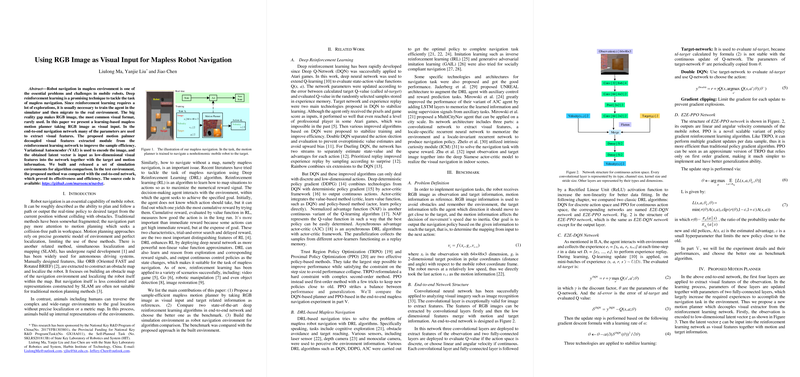

This paper addresses a significant challenge in robotics: achieving efficient robot navigation in mapless environments using RGB images as the primary sensory input. Previous navigation paradigms have relied on precise localizations like SLAM to create obstacle maps, which often limit applicability due to their dependency on detailed geometric models and environmental mapping. In contrast, this work explores the use of deep reinforcement learning (DRL), leveraging RGB images for visual information in order to navigate successfully without explicit mapping.

The authors propose an innovative framework that decouples visual feature extraction from the reinforcement learning network. They employ a Variational Autoencoder (VAE) for encoding RGB images into a latent space, which captures environmental features in a reduced dimensionality. This decoupling is integral to enhancing sample efficiency, a long-standing bottleneck in DRL due to its requirement for extensive exploration data.

The paper distinguishes itself by comparing and contrasting two DRL approaches: one based on DQN and another on PPO to address the navigation tasks. VAE processes account for visual observation, while the DRL-inferred policies output velocity commands for the nonholonomic mobile robot. The experimental setup situated in synthetic simulation environments serves as a benchmark to evaluate performance and efficiency improvements brought by the novel algorithm.

Key Contributions and Results

- New Motion Planner: The primary contribution is a mapless motion planner that uses RGB imagery. The motion planner successfully decouples RGB visual feature extraction, thereby improving the sample efficiency significantly. Specifically, it requires only about one-third to one-quarter of the samples needed by traditional end-to-end networks to achieve similar success rates in simulated environments.

- Algorithm Comparison and Selection: By testing two distinct DRL algorithms (E2E-DQN and E2E-PPO), the paper demonstrates the superiority of the PPO-based approach, evidenced by faster convergence and reduced sample requirements.

- Simulation Framework: The authors release a set of navigation environments, contributing to the field by enabling public benchmarking and further research.

Implications and Future Prospective

The methodology of using RGB images for navigation opens new horizons for real-world applications where exhaustive mapping is impractical or impossible. The approach is likely to stimulate further research into more efficient and robust DRL algorithms tailored for robotics, potentially incorporating adaptive tuning of network architectures or hybrid sensory information to enhance performance.

The demonstrated sample efficiency of the decoupled feature extraction approach holds promise in integrating more complex visual data sets or applying the algorithm in real-world environments beyond simulations. Furthermore, the use of VAEs paves the way for advanced generative models in robotics, facilitating better adaptation and generalization across varied settings.

As research progresses, future work may investigate multisensory integration, safety assurances in unpredictable environments, and deployment at scale, addressing both theoretical and practical challenges in autonomous navigation.

This paper represents a noteworthy step forward in bridging the gap between simulated training environments and practical deployment in real-world robotics, leveraging RGB images for robust and efficient mapless navigation.