KBQA: Learning Question Answering over QA Corpora and Knowledge Bases

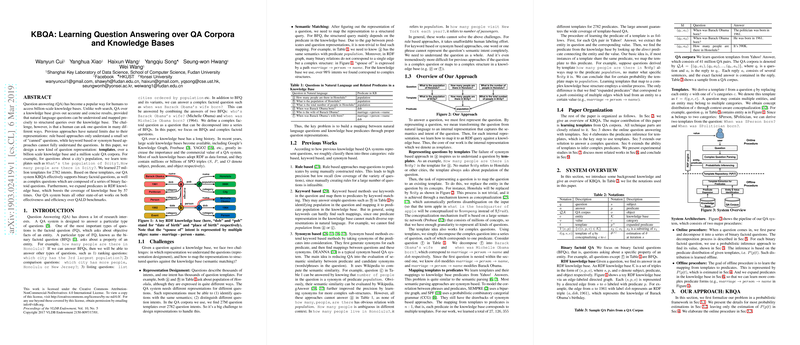

The paper "KBQA: Learning Question Answering over QA Corpora and Knowledge Bases" explores innovative methods for question answering (QA) by building a framework that effectively leverages both QA corpora and structured knowledge bases. This approach aims to address the inherent challenges of understanding natural language questions and mapping them to precise, structured queries over large-scale RDF knowledge bases. The authors propose a new model termed "KBQA" which utilizes templates to solve the problem of semantic matching between a user's question and the predicates present in a knowledge base.

Key Contributions and Methodologies

- Template-Based Representation: The paper introduces a novel idea of representing questions using templates. These templates are derived by replacing entities in the questions with their corresponding concepts, creating an abstract representation that can capture the semantic meaning of various question forms. This method allows the system to understand and categorize a plethora of question expressions that essentially ask the same information, hence enhancing coverage.

- Learning from QA Corpora: The authors leverage a large-scale QA corpus, such as Yahoo! Answers, to learn mappings from natural language questions to question templates and subsequently to RDF predicates. This learning process involves identifying frequently occurring natural language patterns and associating them with structured knowledge base queries.

- Expanded Predicate Coverage: Recognizing the complex structure of RDF graphs where predicates can represent multi-hop relationships between entities, KBQA includes expanded predicates that cover such cases. This significantly improves the system's ability to extract information from RDF graphs, expanding the coverage of the QA system beyond direct factoid queries.

- Handling Complex Questions: KBQA is designed not only to handle binary factoid questions but also to decompose complex questions into sequences of such simpler questions. This decomposition allows the system to answer intricate queries that would otherwise be challenging through a single query process.

Validation and Results

The paper validates its approach using several well-known benchmarks such as QALD, Freebase, and WebQuestions. KBQA demonstrates high precision in identifying correct predicates for given templates, outperforming existing state-of-the-art QA systems. Notably, the use of templates for representation significantly enhances the system’s precision compared to keyword or synonym-based approaches, which often struggle with the breadth of human language.

Implications and Theoretical Development

The introduction of templates as a representation mechanism in KBQA has important theoretical implications in the field of question answering. By abstracting and categorizing question forms, the model effectively bridges the gap between unstructured natural language input and structured query output. This approach also offers a robust framework for future enhancements in AI, particularly in expanding the system to include more complex language understanding capabilities.

Future Directions in AI

The concepts introduced in this paper open new avenues for research. As AI systems become more sophisticated in natural language processing, integrating deeper semantic understanding and context-awareness will be key to advancing QA systems. Furthermore, the exploration of expanded predicates will increasingly have to utilize machine learning techniques to automatically infer richer, deeper linkages within knowledge bases. Extending the current model to handle more diverse datasets and incorporating multi-lingual capabilities could also enhance the robustness and applicability of systems like KBQA in diverse real-world scenarios.

In conclusion, this paper presents a significant step forward in building effective QA systems over RDF knowledge bases, with a practical focus on improving precision and coverage by bridging the gap between natural language and structured data.