Overview of MUREL: Multimodal Relational Reasoning for Visual Question Answering

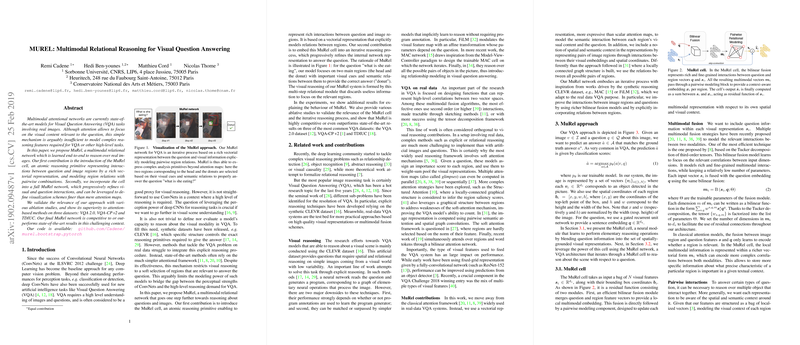

The paper "MUREL: Multimodal Relational Reasoning for Visual Question Answering" introduces an innovative architecture known as MuRel, designed to enhance the capabilities of Visual Question Answering (VQA) systems. The core contribution lies in the development of a Multimodal Relational (MuRel) cell that serves as an atomic reasoning unit, facilitating complex interactions between question features and image regions through a vectorial representation.

The authors address a limitation of traditional attention mechanisms in VQA tasks, which, while effective at identifying relevant regions in an image, may lack the capacity for complex reasoning required in high-level tasks. To overcome this, MuRel introduces a robust end-to-end multimodal relational network. The approach is validated through extensive experimentation, demonstrating superior performance over existing attention-based methods across multiple datasets, including VQA 2.0, VQA-CP v2, and TDIUC.

Key Contributions

- MuRel Cell: The introduction of the MuRel cell marks a significant advancement in modeling interactions between questions and image regions. It utilizes rich vectorial representations to capture region relationships through pairwise combinations. This allows for more granular reasoning than what is typically possible with mere attention maps.

- Iterative Refinement Process: The MuRel network leverages its cell structure to iteratively refine the representation of visual and textual interactions. This progressive refinement is critical for complex scene understanding and reasoning, and it distinguishes MuRel from more simplistic attention frameworks.

- Quantitative Validation: The MuRel network demonstrated competitive or superior performance metrics on the VQA 2.0 dataset, achieving notable improvements in accuracy over traditional attention-based models. Key metrics include a competitive overall accuracy on both test-dev and test-standard splits, emphasizing its effectiveness.

- Visualization Methods: Beyond accuracy improvements, MuRel introduces visualization schemes that provide insights into the decision-making process of the model. These schemes surpass traditional attention maps by highlighting complex region relationships pertinent to the task, thereby supporting model interpretability.

Experimental Findings

The paper presents a thorough experimental validation of the MuRel network's components:

- Comparative Performance: MuRel outperformed strong attention-based baselines using identical image features, achieving accuracy gains in contexts like the VQA-CP v2 dataset, where it mitigates linguistic biases.

- Ablation Studies: Experiments confirm the utility of both pairwise modeling and iterative reasoning within the MuRel framework, highlighting their contributions to overall performance improvements.

- Number of Iterations: The impact of iterative processing is analyzed, providing evidence that increasing the number of MuRel cell iterations generally enhances model accuracy for most question types, particularly those involving counting.

Implications and Future Directions

The introduction of the MuRel network signifies a step forward in the field of multimodal reasoning within AI, particularly for VQA applications. The model's ability to refine visual question answering processes through enhanced interaction between image regions and question semantics portends broader applications in domains requiring sophisticated scene and context comprehension.

From a theoretical standpoint, the MuRel approach could pave the way for future exploration into more complex multimodal fusion strategies and relational reasoning techniques. Practically, the principles underlying MuRel may be adapted and extended to other AI tasks that necessitate intricate understanding of visual and textual data.

In conclusion, MuRel's introduction represents a meaningful contribution to VQA methodologies, offering both performance enhancements and novel insights into the potential of multimodal relational networks. As such, it sets a promising trajectory for ongoing research efforts aimed at bridging perception and reasoning in AI systems.