RaAM: A Relation-aware Attention Model for Visual Question Answering

The paper, "RaAM: A Relation-aware Attention Model for Visual Question Answering," presents an advanced framework to enhance visual question answering (VQA) by focusing on the relational dynamics between objects in an image. The authors propose a relation encoder that incorporates both explicit and implicit visual relationships, advancing the integration of semantic and spatial information into image analysis.

Key Contributions

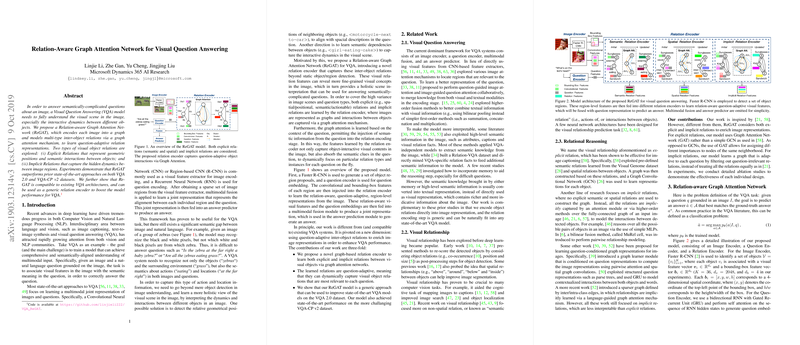

The core contribution of the paper is the development of the Relation-aware Attention Model (RaAM), which employs a graph-based attention mechanism. This model represents images as graphs where nodes correspond to objects detected by a Faster R-CNN, and edges depict the relations between these objects. There are two types of relationships addressed:

- Explicit Relations: These include spatial and semantic interactions explicitly defined by the model, drawing from pre-trained classifiers on datasets like Visual Genome.

- Implicit Relations: These relations are learned in a question-adaptive manner, capturing latent interactions without predefined semantic or spatial categories.

RaAM is designed to extend existing VQA architectures rather than replace them, enhancing their performance by infusing richer relational information.

Methodology

The methodology involves encoding image representations through explicit and implicit relations:

- Explicit Relation Encoding: The method constructs pruned graphs where relations such as spatial (e.g., "on" or "inside") and semantic (e.g., "holding" or "sitting on") between objects are utilized. The paper employs a Graph Attention Network (GAT) to manage the attention across these relationships, assigning different levels of importance based on the context of each question.

- Implicit Relation Encoding: Here, a fully connected graph is used, where attention mechanisms capture inter-object dynamics reliant on the visual and bounding-box features. The implicit relationships are learned through question embedding concatenation, providing a more adaptive interpretation of object interactions based on contextual language cues.

The model demonstrates its effectiveness through experiments on the VQA 2.0 and the challenging VQA-CP v2 datasets. The results show RaAM's significant compatibility with and enhancement of state-of-the-art VQA systems, showcasing improvements even on datasets with diversified training and testing distributions.

Empirical Evaluation

Empirical results underscore the efficacy of the RaAM model, with notable performance improvements reported for variations like BUTD, MUTAN, and BAN models. Specific advances include higher accuracy scores on implicit relations and notable gains in leveraging combined explicit and implicit relations.

Theoretical and Practical Implications

Theoretically, the RaAM framework emphasizes the importance of integrating multi-type relations, thereby narrowing the semantic gap between visual and textual modalities. Practically, this approach offers a versatile enhancement tool for existing VQA systems, adaptable to different multimodal fusion methods without requiring wholesale architectural changes.

Future Directions

Future research avenues may entail exploring more refined multimodal fusion strategies and optimizing the balance of relation usage in handling diverse question types. Additionally, enhancing interpretability through visualized attention maps, as demonstrated, could provide deeper insights into relational influence over model decision-making.

Overall, the paper contributes a methodologically sophisticated approach to VQA by embedding detailed relational comprehension into image representations, thereby enhancing semantic alignment with textual queries.