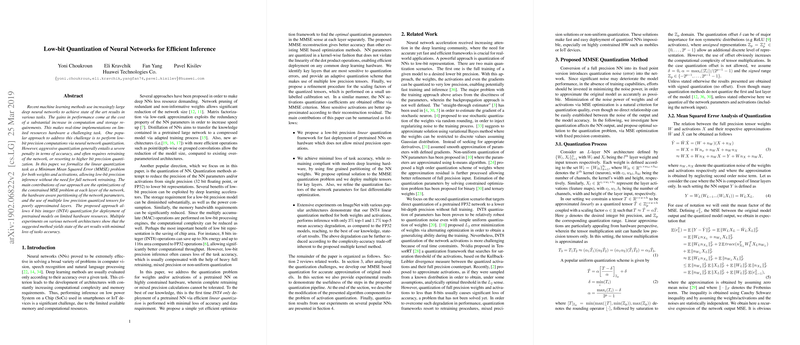

Low-bit Quantization of Neural Networks for Efficient Inference

The paper under review, "Low-bit Quantization of Neural Networks for Efficient Inference," addresses a pertinent challenge in neural network deployment—reducing the computational and resource demands without significantly sacrificing model accuracy. As the scale and complexity of neural networks grow, ensuring efficient inference on devices with limited hardware capabilities becomes increasingly crucial. The authors introduce a novel approach that formalizes linear quantization as a Minimum Mean Squared Error (MMSE) problem for both weights and activations, enabling low-bit precision deployment without requiring full retraining of the network.

The authors propose several core contributions that constitute their quantization framework. Firstly, they optimize the constrained MSE problem at each network layer, which ensures that the quantization process is finely tuned to maintain accuracy. The approach is streamlined for hardware compatibility by employing kernel-wise quantization, a strategy that preserves the linearity necessary for efficient matrix operations on common hardware architectures such as systolic arrays. This is particularly significant as it enables the quantized models to perform adequately on low power devices like IoT systems and smartphones.

A standout element of the framework is the use of multiple low precision quantized tensors for layers that are otherwise difficult to approximate with a single low-bit tensor. This method effectively handles layers with a high quantization error, mitigating the accuracy loss that typically accompanies aggressive quantization. For challenging layers, the adaptation into a dual quantization strategy permits further accuracy improvements through enhanced approximation.

The experimental results presented exhibit the efficacy of the proposed approach across myriad architectures. Notably, with INT4 quantization, the models suffered, on average, only 3% top-1 and 1.7% top-5 accuracy degradation compared to their full precision counterparts. These results highlight the capacity of this method to push the boundaries of quantization while maintaining strong performance metrics, an achievement that surpasses existing methods that rely heavily on retraining or higher precision.

The implications of these findings are robust, both in practical deployment and theoretical understanding. From a deployment perspective, this approach reduces the memory and computational overhead on edge devices, providing considerable enhancements in speed and efficiency without substantial trade-offs in accuracy. Theoretically, it pushes the field towards a deeper understanding of quantization impacts on neural network models, challenging the preconceived limits of low-bit quantization.

Future directions suggested by this work include broadening the applicability of quantization to include other network components and processes, such as gradients in training phases. Additionally, exploring adaptive quantization thresholds or better distribution assumptions for activations could further refine the balancing act between quantization aggressiveness and accuracy retention.

In conclusion, this paper presents a comprehensive and technically robust solution fitting the current and future needs of deploying neural networks on constrained hardware. Its insights lay down a foundation for future research that can explore the optimization of quantization thresholds and the inclusion of broader network functionalities under the low-bit framework. The research community can leverage these findings to extend the lifespan of existing architectures and enhance the capabilities of neural inference in edge and mobile environments.