Spider: A Large-Scale Human-Labeled Dataset for Complex and Cross-Domain Semantic Parsing and Text-to-SQL Task

The paper presents Spider, a dataset designed to significantly advance research in the field of semantic parsing, specifically in the complex and cross-domain text-to-SQL context. Unlike many existing datasets that focus on single databases and simpler queries, Spider encompasses a diverse range of databases and more challenging SQL queries. This makes it a critical resource for evaluating and improving models that need to generalize across different database schemas and handle complex logical structures.

Dataset Composition and Annotation

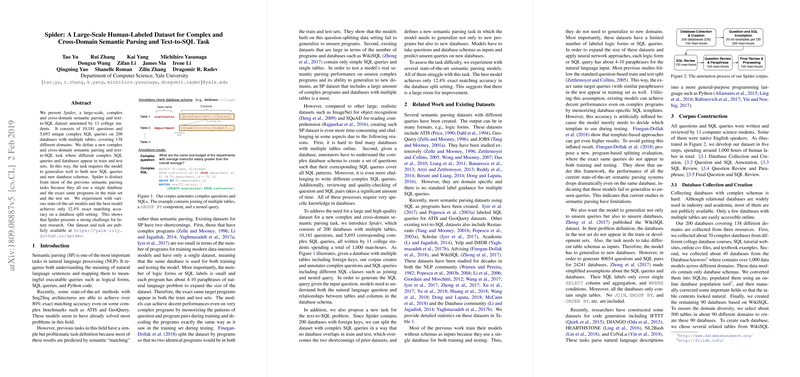

Spider is composed of 10,181 questions and 5,693 unique SQL queries spanning 200 databases, each with multiple tables. This coverage extends to 138 distinct domains, offering broad variability and presenting a comprehensive challenge for semantic parsing models. Each SQL query was crafted to include various SQL components such as JOIN, GROUP BY, and nested subqueries—elements that are not well-addressed in previous datasets like WikiSQL, which primarily encompasses simple SQL queries without multi-table joins.

The dataset was curated by 11 college students over approximately 1,000 man-hours. The annotation process was thorough, focusing on ensuring SQL pattern coverage, consistency, and clarity of questions. Each question in the dataset maps to a logically complex SQL query, demanding models to not only comprehend natural language but also to operationalize it into accurate SQL representations.

Task Definition and Assumptions

Spider introduces a new semantic parsing and text-to-SQL task designed to evaluate the generalization capabilities of models to both new SQL patterns and unforeseen database schemas. This is particularly critical as it simulates real-world applications where database structures and queries vary significantly.

Several assumptions were made for the task:

- Value prediction is not evaluated, prioritizing correct SQL structure generation.

- Queries requiring extensive common sense or external knowledge were omitted.

- Table and column names were kept clear and explicit to avoid ambiguous interpretations.

These stipulations ensure a focus on enhancing the fundamental semantic parsing capabilities of models.

Evaluation Metrics

The evaluation framework includes Component Matching, Exact Matching, and Execution Accuracy.

- Component Matching measures exact match F1 scores for different SQL components such as

SELECT,WHERE, andGROUP BY. - Exact Matching evaluates the whole SQL query's correctness.

- Execution Accuracy involves executing the SQL queries and comparing output, complementing the other metrics by catching logical correctness even if the syntactic structure varies.

SQL difficulty is assessed based on predefined criteria, categorizing queries into 'easy', 'medium', 'hard', and 'extra hard' levels.

Baseline Model Experiments

The researchers evaluated several state-of-the-art models, including Seq2Seq, SQLNet, and TypeSQL, using Spider. The results highlight the dataset's challenging nature. For instance, the TypeSQL model demonstrated a considerable drop in performance when generalizing to new databases, achieving only 8.2% accuracy in the database split setting. This scenario underscores the difficulty of the task due to the necessity to interpret relations across multiple, unseen database schemas and their complex table interactions.

Implications and Future Directions

Spider sets a new benchmark for semantic parsing and text-to-SQL tasks, emphasizing cross-domain generalization and handling of complex logical forms. The low performance of existing models on Spider implies significant room for improvement, potentially steering future research towards more sophisticated approaches integrating schema understanding, robust handling of nested queries, and improved generalization methodologies.

Moreover, Spider's extensive database coverage and diversified queries offer an invaluable resource for training and evaluation, likely fostering advancements in model architectures and training methodologies that can handle complex and varied SQL generation tasks.

Conclusion

Spider is a pioneering contribution that raises the bar for semantic parsing and text-to-SQL tasks. By confronting the limitations of previous datasets and proposing rigorous evaluation metrics, it provides a profound foundation for future research aimed at achieving higher levels of model understanding and robustness in semantic parsing across diverse and complex database environments.