Analysis of "TVQA: Localized, Compositional Video Question Answering"

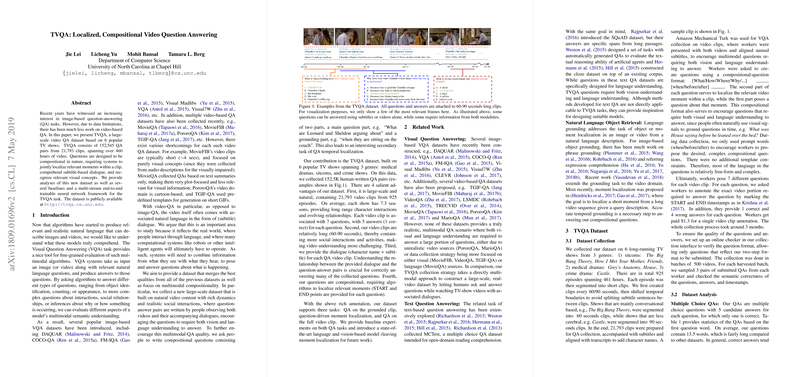

This paper addresses the domain of multimodal video question answering (QA), expanding the existing research landscape which has predominantly focused on image-based QA. The authors introduce TVQA, a substantial video QA dataset comprising 152,545 QA pairs from 21,793 video clips, gathered from six widely recognized TV shows. The dataset encompasses both diverse genres and rich multimodal data, including subtitles and visual content, which pose unique challenges and opportunities for developing advanced QA systems.

Contributions and Methodology

The primary contribution of the paper is the creation of the TVQA dataset, which aims to overcome the limitations present in previous video-based QA datasets. Large scale, diversity of genres, and integration of audio-visual data are key features that set this dataset apart. The authors also introduce a structured question design that requires models to jointly localize relevant video moments and understand both dialogues and visual concepts.

The dataset facilitates exploration into three main tasks:

- Answering questions based on localized video clips.

- Temporal localization driven by questions.

- Answering questions using the entire video clip context.

The authors propose a neural network framework utilizing a multi-stream architecture capable of integrating different data modalities—regional visual, visual concept, and subtitle data—each encoded through a bi-LSTM mechanism. This framework models the interplay between video content and natural language to predict answers with a high level of multimodal understanding.

Results and Baseline Comparisons

The paper reports on several baseline models, leveraging methods like Retrieval, Nearest Neighbor Search (NNS), and the proposed multi-stream neural network. Remarkably, human performance was significantly superior when both video and subtitles are included, underlining the challenge the task poses for machine understanding.

Numerically, the proposed model achieves highest accuracy when all modalities (video and subtitles) are integrated, attesting to the advantage of utilizing a comprehensive context. Particularly, visual concept features demonstrate significant utility, aligning with intuitive expectations regarding the importance of integrated multimodal processing.

Implications and Future Directions

The TVQA dataset opens new avenues for research into sophisticated AI systems capable of complex inferential reasoning across modalities. It encourages development of models with improved human-like understanding of social dynamics, interactions, and narrative structures inherent in TV show data. Furthermore, the results suggest that improvements in temporal localization and enhanced interaction between multimodal data streams could narrow the performance gap between machine models and human benchmarks.

The dataset's availability also provides a robust platform for investigating more nuanced aspects of QA models, including contextual and linguistic reasoning, object and scene recognition, and enhanced temporal understanding.

Anticipated future directions include exploring better temporal cues and integrating knowledge bases to improve reasoning capabilities and advance the frontier of AI in real-world environments. Overall, the TVQA dataset represents a significant step forward in video understanding and QA research, promising substantial contributions to both theoretical insights and practical applications in artificial intelligence.