Heterogeneous Memory Enhanced Multimodal Attention Model for Video Question Answering

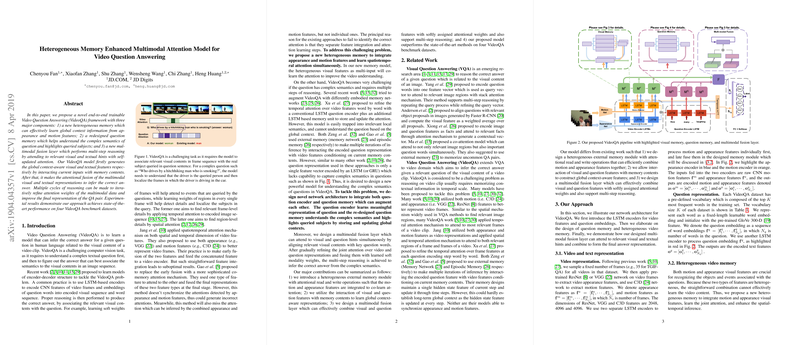

The paper presented introduces an innovative framework for Video Question Answering (VideoQA), a task that inherently demands the processing of complex semantics in both textual and visual modalities. The proposed architecture comprises three primary components: heterogeneous memory for visual feature integration, a refined question memory for managing complex linguistic content, and a multimodal fusion layer enabling iterative reasoning.

At the core of the solution is the heterogeneous memory structure designed for synchronizing both motion and appearance features. This approach seeks to resolve the shortcomings observed in previous methods that either combined these features prematurely or late in the processing pipeline. The paper critiques prior practices such as early fusion used in models like ST-VQA, which were noted to result in suboptimal performance due to unsynchronized attention modeling between different feature types. The heterogeneous memory thus facilitates joint spatial-temporal attention learning by incorporating multiple input types and leveraging attentional read and write operations.

Complementing the visual aspect is a re-imagined question memory network. The necessity for this arises from the inadequacy of traditional single hidden state models like LSTM to encapsulate the global context of complex questions. By implementing a nuanced memory network, the research claims an enhanced capability to differentiate between queried subjects within intricate narratives, a distinction paramount to accurate VideoQA.

The paper describes a multimodal fusion layer that employs an LSTM controller to iteratively refine attention weights across visual and textual inputs, thus performing nuanced reasoning required for VideoQA. The iterative cycle enables a more profound comprehension of multimodal interactions, and the results suggest superiority over non-integrative attention models.

Extensive experimentation across four benchmark datasets validates the efficacy of this approach by achieving state-of-the-art results. Some specific numeric results include improvements on the TGIF-QA dataset where the method reduced counting task losses and increased action recognition accuracy significantly. Moreover, this end-to-end trainable architecture outperformed existing models across datasets such as MSVD-QA and MSRVTT-QA, demonstrating an enhanced ability to address diverse query types ranging from "what" and "who" to temporally ambiguous queries like "when" and "where."

The implications of this research are twofold: practical and theoretical. Practically, the model's robust architecture suggests significant potential in applications requiring collaborative understanding of videos, such as automated video annotation and interactive virtual assistants. Theoretically, it sets a precedent for future AI systems that can more deeply integrate heterogeneous data types into coherent reasoning processes, thus expanding the cognitive capabilities of multimodal AI systems.

In conclusion, while the paper's proposed model breaks new ground with its heterogeneous memory and multimodal interaction mechanisms, future research will likely build on these findings to explore more sophisticated memory networks and reasoning protocols, potentially incorporating reinforcement learning approaches and exploring larger, more complex datasets to further the frontier in VideoQA capabilities.