Towards an Intelligent Edge: Wireless Communication Meets Machine Learning

The paper "Towards an Intelligent Edge: Wireless Communication Meets Machine Learning" by Guangxu Zhu and colleagues proposes a conceptual and technical intersection of wireless communication and machine learning, termed "edge learning." This burgeoning field capitalizes on the increasing deployment of smart mobile gadgets and IoT devices to push AI-enabled applications toward network edges rather than central cloud infrastructures.

Key Insights and Implications

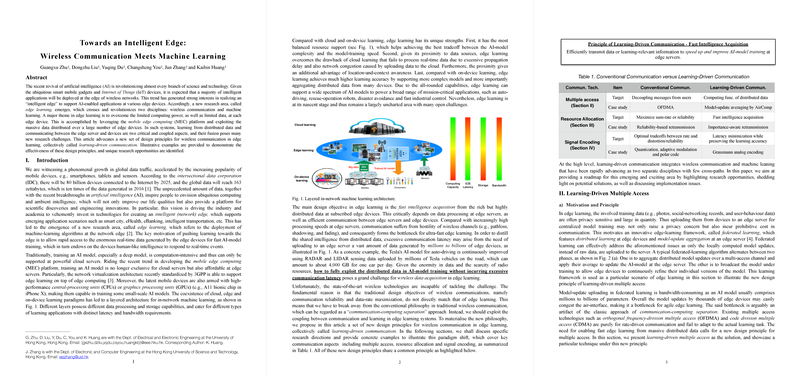

The core motivation for edge learning is to leverage data proximity for rapid AI model training, addressing the challenges of limited computational power and data availability at individual devices. The concept envisages a layered architecture combining cloud, edge, and on-device learning paradigms to balance latency, bandwidth, and processing capabilities. This layered architecture aims to facilitate diverse AI-powered applications, from smart cities to industrial control systems.

A pivotal aspect of the paper is the notion of "learning-driven communication," which proposes a paradigm shift from traditional wireless communication principles. In conventional schemes, communication reliability and data-rate maximization are prioritized. However, these do not align with the requirements of edge learning, where the primary goal is fast intelligence acquisition from distributed data. This approach suggests breaking the "communication-computing separation” by integrating learning processes into communication itself.

Numerical Results and Claims

The paper provides illustrative examples to support its design principles, focusing on three major areas:

- Learning-Driven Multiple Access: The paper introduces federated learning, which mitigates privacy concerns and reduces communication costs by updating models rather than transmitting raw data. A case study comparing AirComp (over-the-air computation) with conventional OFDMA shows that AirComp drastically reduces latency (up to 1000x) without compromising accuracy, a significant claim that highlights the potential for rapid model updates in dynamic environments.

- Learning-Driven Radio Resource Management (RRM): RRM traditionally optimizes for spectrum efficiency, but edge learning demands consideration of data importance. An importance-aware retransmission scheme is proposed, enhancing model accuracy by allocating resources based on data criticality. Experimental results suggest that this approach improves learning performance compared to conventional retransmission.

- Learning-Driven Signal Encoding: This facet integrates feature extraction with encoding processes. The introduction of Grassmann analog encoding (GAE) enables robust, CSI-free data transmission, markedly reducing latency while maintaining high classification rates, especially in high-mobility scenarios.

Future Directions and Challenges

The paper identifies several research directions and challenges, underscoring the nascent nature of edge learning:

- Noise as a Resource: Reevaluating noise, not merely as a hindrance but as a potential asset in training robustness, contrasts with traditional communication assumptions.

- Mobility Management: Handling transient connections and handovers between mobile devices and edge servers remains a hurdle, especially in heterogeneous networks.

- Cloud-Edge Collaboration: Integrating cloud and edge computing strengths could forge more comprehensive AI models, albeit with challenges in minimizing data exchange.

- Signal Encoding: Further research into efficient gradient-data and motion-data encoding could lead to substantial improvements in communication efficiency.

Conclusion

The pursuit of an "intelligent edge" presents a fertile ground for transformative research, bridging communication and machine learning. The paper lays the groundwork for redesigning communication protocols to support efficient edge learning, focusing on latency and resource optimization. As AI applications proliferate, convergence in these areas is critical for realizing the potential of ubiquitous and responsive edge intelligence.