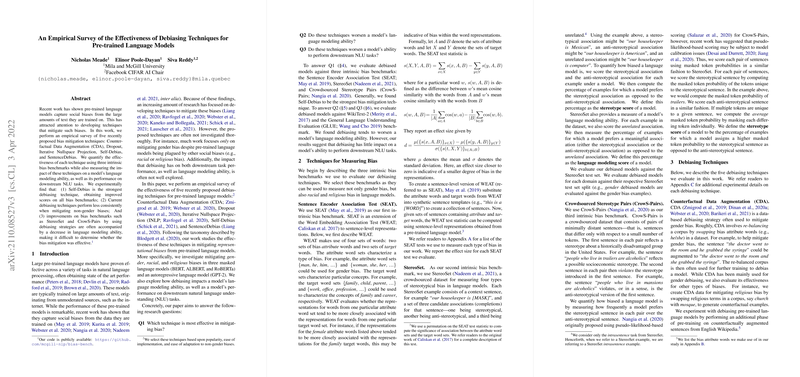

An Empirical Survey of the Effectiveness of Debiasing Techniques for Pre-trained LLMs

The paper "An Empirical Survey of the Effectiveness of Debiasing Techniques for Pre-trained LLMs" by Meade, Poole-Dayan, and Reddy elucidates the evaluation of five debiasing methods applied to pre-trained LLMs. Acknowledging that LLMs often encapsulate social biases from extensive and typically unmoderated datasets, the paper addresses methods developed to alleviate such biases.

Key Findings and Methodology

The researchers conducted an empirical survey on five debiasing methods: Counterfactual Data Augmentation (CDA), Dropout, Iterative Nullspace Projection (INLP), Self-Debias, and SentenceDebias. They rigorously evaluated these methods using intrinsic bias benchmarks: SEAT, StereoSet, and CrowS-Pairs, alongside measuring their impact on LLM performance and downstream NLU tasks.

The following research questions guided this paper:

- Which debiasing technique is most effective in mitigating bias?

- Do these techniques affect the LLMing capability?

- How do these techniques influence a model’s ability to perform downstream NLU tasks?

The paper’s key findings are:

- Self-Debias emerged as the most effective debiasing technique across all bias benchmarks, improving scores on SEAT, StereoSet, and CrowS-Pairs. Notably, it retained efficacy across gender, racial, and religious biases.

- An observable trade-off exists where improvements on bias benchmarks often coincide with a decline in LLMing abilities, complicating the assessment of bias mitigation success.

- Surprisingly, debiasing seems to have minimal adverse effects on downstream NLU task proficiency, suggesting fine-tuning regains essential task-solving competencies.

Implications and Future Directions

This paper holds practical implications for deploying LLMs in sensitive applications where bias mitigation remains paramount. The negative impact of debiasing on a model’s inherent language capabilities urges caution, highlighting the necessity for careful trade-offs between reducing bias and maintaining language proficiency.

On a theoretical level, this paper advocates for further advancement in self-debiasing methods exploiting a model’s inherent knowledge without substantially degrading performance. Moreover, there is a manifested necessity for developing reliable and culturally inclusive bias benchmarks beyond the current North American-focused datasets.

As these findings suggest, broadening debiasing techniques for multilingual contexts and various social biases remains a pivotal area for future research. Advancing our understanding of bias mitigation will not only push the boundary of NLP fairness but will also foster inclusive AI systems respecting diverse cultures and languages.

Conclusion

The survey conducted by Meade et al. substantially contributes to understanding the efficacy of debiasing techniques in LLMs. While Self-Debias shows promise, the search for methods that minimize bias without compromising language capabilities continues to be a significant research avenue. As AI technologies proliferate across different sectors, the importance of mitigating biases in LLMs cannot be overstated, emphasizing the ongoing need for innovation in debiasing approaches.