This paper explores the use of deep representation learning for automatically detecting vulnerabilities directly from source code, aiming to improve upon traditional static analysis tools that often suffer from high false positive rates or require extensive manual configuration (Russell et al., 2018 ). The core idea is to learn meaningful vector representations (embeddings) of code functions that capture syntactic and semantic patterns indicative of vulnerabilities.

Problem Addressed:

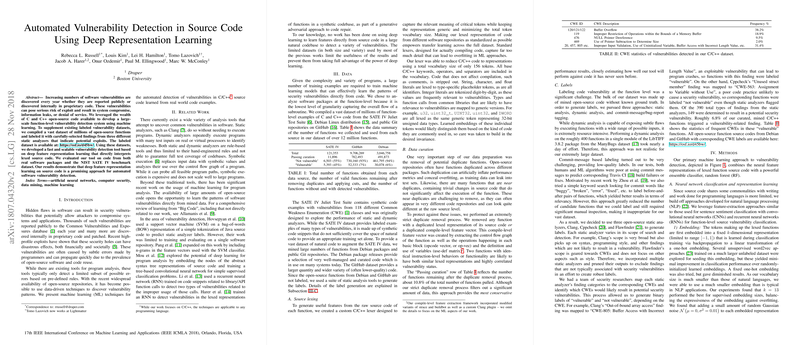

The primary goal is to create a system that can automatically identify security vulnerabilities (like buffer overflows, SQL injection, etc., often categorized by CWEs) in C/C++ source code at the function level. This addresses the limitations of manual code review (slow, expensive) and traditional static analysis tools (often noisy or incomplete).

Methodology:

- Data Preparation:

- The researchers curated a dataset comprising C/C++ functions labeled as either vulnerable or not vulnerable. This dataset was primarily sourced from the Software Assurance Reference Dataset (SARD), which contains synthetic examples of specific CWEs, and potentially augmented with code from real-world projects.

- Each function's source code was processed to create a suitable input representation for neural networks. This involved:

- Parsing the code (likely using tools like Clang) to obtain an Abstract Syntax Tree (AST) or a token sequence.

- Normalizing or simplifying the representation, e.g., by removing comments, standardizing variable names (anonymization), and converting the code/AST into a sequential format suitable for RNNs or CNNs.

- Model Architecture:

- The paper focuses on deep learning models to learn representations. While specific architectures might vary, common approaches discussed in this context include:

- Recurrent Neural Networks (RNNs), particularly LSTMs or GRUs: These models process the sequential representation of the code (e.g., token sequences) to capture long-range dependencies and sequential patterns.

- Convolutional Neural Networks (CNNs): CNNs can be applied to sequences of token embeddings to learn local patterns indicative of vulnerabilities.

- Embedding Layer: An initial layer maps code tokens (or AST nodes) to dense vectors (embeddings). These embeddings are learned during training.

- The output of the representation learning model (the learned vector for a function) is then fed into a classification layer (e.g., a dense layer with a sigmoid activation) to predict the probability of the function being vulnerable.

- The paper focuses on deep learning models to learn representations. While specific architectures might vary, common approaches discussed in this context include:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

graph TD

A[Source Code Function] --> B(Preprocessing);

B -- Token Sequence / AST --> C(Embedding Layer);

C --> D{Deep Learning Model (RNN/CNN)};

D -- Learned Representation (Vector) --> E(Classification Layer);

E -- Vulnerable / Not Vulnerable --> F[Prediction Output];

subgraph Data Preparation

A

B

end

subgraph Model

C

D

E

end |

Key Findings:

The results demonstrated that the deep learning models significantly outperformed traditional static analysis tools and simpler machine learning baselines (like bag-of-words models) in detecting vulnerabilities within their dataset. They likely reported metrics such as:

- High Area Under the ROC Curve (AUC) scores, indicating good discrimination between vulnerable and non-vulnerable functions.

- Improved F1-scores, showing a better balance between precision and recall compared to baselines.

- The ability of the learned representations to capture complex patterns that rule-based systems might miss.

Practical Implementation & Applications:

- Integration into DevSecOps: Such a model can be integrated into Continuous Integration/Continuous Deployment (CI/CD) pipelines. Upon code commits, the tool can automatically scan changed functions for potential vulnerabilities, providing rapid feedback to developers.

- Code Auditing Assistance: Security auditors can use the tool to prioritize functions for manual review, focusing on those flagged as potentially vulnerable by the model.

- Training Data: Implementing this requires a substantial, well-labeled dataset. Creating or obtaining such data is a major challenge. Using synthetic data like SARD is a starting point, but models perform best when trained on or fine-tuned with real-world code.

- Preprocessing Pipeline: A robust parser and preprocessing pipeline is essential. For C/C++, using libraries like

libclangis common. The choice of representation (tokens, AST nodes, graph structures) impacts complexity and performance.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24

# Pseudocode for preprocessing a C function import clang.cindex def function_to_tokens(source_code_str): """Parses C code and returns a sequence of tokens.""" index = clang.cindex.Index.create() # TU = Translation Unit tu = index.parse('temp.c', args=['-std=c11'], unsaved_files=[('temp.c', source_code_str)], options=clang.cindex.TranslationUnit.PARSE_DETAILED_PROCESSING_RECORD) tokens = [] # Traverse the AST or directly get tokens for token in tu.get_tokens(extent=tu.cursor.extent): # Simple tokenization: just use the spelling # More advanced: filter, normalize, use token kinds tokens.append(token.spelling) return tokens # Example usage: # c_function = "int add(int a, int b) { return a + b; }" # token_sequence = function_to_tokens(c_function) # print(token_sequence) # ['int', 'add', '(', 'int', 'a', ',', 'int', 'b', ')', '{', 'return', 'a', '+', 'b', ';', '}']

- Computational Resources: Training these deep learning models requires significant GPU resources and time. Inference might also require GPUs for acceptable speed on large codebases.

- Limitations:

- Generalization: Models may struggle with vulnerability types or coding patterns not well-represented in the training data.

- Interpretability: Understanding why a model flags a function can be difficult, although techniques like attention mechanisms can provide some insight.

- Inter-procedural Analysis: The function-level approach might miss vulnerabilities that arise from interactions between multiple functions. More advanced graph-based models aim to address this but add complexity.

In summary, the paper presents a promising deep learning-based approach for automated vulnerability detection in source code, offering potential improvements over traditional methods. However, practical implementation requires careful consideration of data acquisition, preprocessing, model training, and inherent limitations like generalization and interpretability.