A Unified Model for Extractive and Abstractive Summarization Using Inconsistency Loss

The paper "A Unified Model for Extractive and Abstractive Summarization Using Inconsistency Loss" presents a novel approach integrating both extractive and abstractive summarization methods to improve the overall quality of automated text summarization. The approach combines sentence-level attention from extractive models with word-level dynamic attention from abstractive models, complemented by a newly introduced inconsistency loss function.

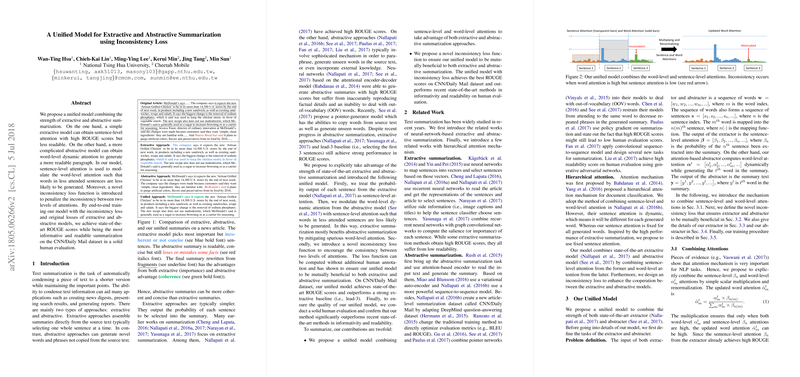

The proposed integration leverages sentence-level attention probabilities from a pre-trained extractive model to modulate the word-level attentions in an abstractive summarization model. This modulation is intended to reduce the likelihood of generating words from sentences that are less focused upon in the extractive phase, thus aiming to create coherent and informative summaries. The inconsistency loss penalizes discrepancies between these attentions, encouraging synergy between the models.

Significant findings of this approach are demonstrated on the CNN/Daily Mail dataset, where it achieves state-of-the-art ROUGE scores across multiple configurations. Notably, the unified model, trained end-to-end with the inconsistency loss, surpasses strong baselines, including the lead-3 method, in informativity and readability as evaluated both by ROUGE metrics and human assessments. The inconsistency loss effectively reduces the inconsistency rate from 20% to approximately 4%, fostering a closer alignment between sentence importance and word-level attention.

The paper addresses inherent limitations in extractive and abstractive summarization separately. Extractive summarization selects entire sentences verbatim, often resulting in high ROUGE scores but low readability due to incoherence. Conversely, abstractive summarization can theoretically generate more readable and coherent texts but faces challenges with reproduction fidelity and dealing with out-of-vocabulary words. The unified model creatively combines these approaches to capitalize on their strengths while mitigating their individual weaknesses.

The implications of this work are significant within the context of NLP and automated text summarization. By establishing a framework where extractive and abstractive techniques reinforce each other, this research suggests new directions for future exploration, such as extending the unified approach to different types of texts beyond news articles or adapting the model's complexity for real-time applications.

Overall, this paper makes substantial progress towards more effective and human-like summarization systems by meticulously constraining the relationship between extractive and abstractive processes and introducing an innovative penalty mechanism to enhance their interoperability. The advances achieved in ROUGE performance and human evaluations highlight the potential of this integrated strategy as a foundation for future summarization models, possibly paving the way for deeper integrations of various NLP tasks.