Overview of NVIDIA Tensor Core Programmability, Performance, and Precision

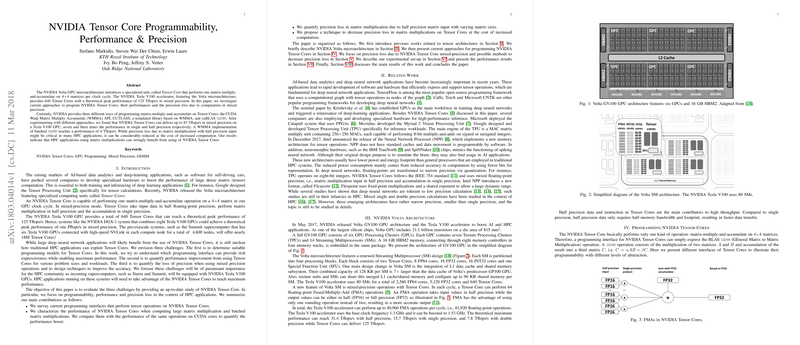

The paper "NVIDIA Tensor Core Programmability, Performance, Precision" provides a detailed examination of the NVIDIA Volta GPU microarchitecture, particularly focusing on the Tensor Core units and their applicability within high-performance computing (HPC). Introduced in the NVIDIA Tesla V100 accelerator, Tensor Cores are designed to enhance the computational efficiency of matrix-multiply-and-accumulate (MMA) operations using mixed precision. The paper evaluates several aspects critical to understanding Tensor Cores: programming models, performance metrics, and precision trade-offs.

Programming NVIDIA Tensor Cores

The implementation of Tensor Cores involves three primary programming interfaces:

- CUDA Warp Matrix Multiply Accumulate (WMMA): This API provides a direct though rudimentary level of control over Tensor Core operations. Its capability is currently limited to fixed-size matrix operations, and it requires handling via CUDA warps to maximize performance.

- CUTLASS: As a templated library, CUTLASS builds on WMMA to offer more flexible matrix multiplication strategies with varying tiling and pipelining options to optimize performance.

- cuBLAS: The cuBLAS library allows higher-level programming with potential mathematical optimizations abstracted away from the user. Specific settings enable the use of Tensor Cores for generalized matrix to matrix multiplication (GEMM) operations.

The exploration of these programming interfaces indicates a mature ecosystem although further developments could enhance the expressiveness and efficiency in deploying Tensor Cores in varied computational settings.

Performance Analysis

Theoretical peak performance of the Tensor Cores in the Tesla V100 GPU is stated at 125 Tflops/s in mixed precision. The results presented indicate that Tensor Cores can reach approximately 83 Tflops/s under optimal conditions, representing a sixfold increase over single precision MMAs on CUDA cores. Notably, the efficiency of Tensor Cores in executing batched GEMM operations enables significant speed-ups for workloads involving numerous small matrix multiplications—common in applications such as the Fast Multipole Method.

Despite the impressive performance gains, the observed peak does not fully align with theoretical values, underscoring potential areas for optimization, particularly in memory traffic management and kernel execution alongside traditional CUDA cores.

Precision Considerations and Trade-Offs

Tensor Cores operate in a mixed-precision regime where inputs are processed in half precision, and accumulation occurs in single precision. While this approach accelerates throughput by minimizing memory bandwidth usage, the resultant rounding errors can be substantial, especially as the matrix sizes grow. To mitigate precision loss, the paper proposes a refinement technique employing a form of error correction through additional computational passes.

The analysis demonstrates that employing these techniques can reduce error by an order of magnitude, though this benefit comes at the cost of increased computational workload and associated execution time. The balance between precision and performance remains crucial for adopting Tensor Core acceleration in HPC use cases.

Implications and Future Directions

The research elucidates both the advantages and constraints of incorporating NVIDIA Tensor Cores within high-performance computing infrastructure. TNVIDIA's continued development of CUDA APIs and associated libraries will be instrumental in maximizing Tensor Core utility across diversified applications, whether in deep learning or traditional HPC.

For HPC applications, performance gains must be meticulously weighed against acceptable levels of precision loss—particularly when scientific accuracy cannot be compromised. Future studies should focus on optimizing memory usage and computational kernel designs, investigating hybrid techniques that leverage both Tensor Core and CUDA Core operations. Expanding the repertoire of single and mixed-precision refinement methods will also fortify the Tensor Core’s role in computational science and engineering tasks.