Self-Attention with Relative Position Representations

The Transformer architecture introduced by Vaswani et al. has established itself as a dominant paradigm in various NLP tasks, particularly in machine translation. This paper, authored by Peter Shaw, Jakob Uszkoreit, and Ashish Vaswani, extends the self-attention mechanism of the Transformer to incorporate relative position representations, and demonstrates substantial improvements over traditional absolute position encodings.

Introduction

Sequence-to-sequence models have traditionally relied on recurrent neural networks (RNNs) or convolutional neural networks (CNNs) to handle various tasks. Both architectures inherently capture positional information due to their sequential nature. In contrast, the Transformer, which relies entirely on self-attention, requires explicit addition of positional information to its inputs to retain the sequence order. This paper proposes a novel mechanism to integrate relative position information directly into the self-attention mechanism, potentially enhancing the model's ability to generalize across varying sequence lengths.

Background

Self-attention enables the Transformer to capture dependencies between input and output tokens regardless of their distance from each other in the sequence. Traditional Transformers employ sinusoidal position encodings to embed positional information, facilitating the model's generalization to unseen sequence lengths. However, these encodings are absolute in nature and might not optimally leverage the relative distances between tokens. This limitation motivates the proposed methodology.

Proposed Architecture

Relation-aware Self-Attention

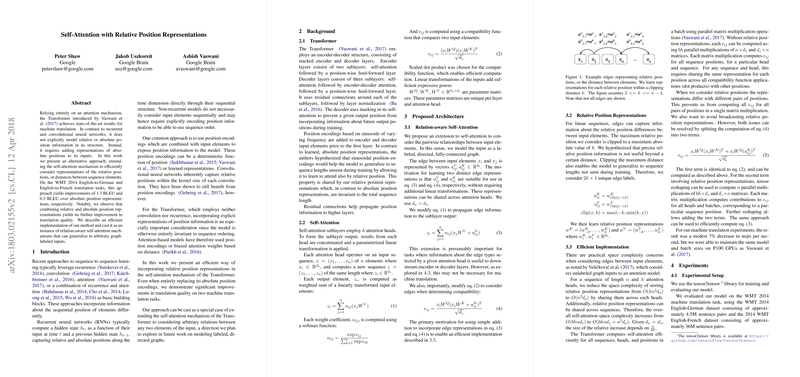

The core idea is to extend the self-attention mechanism to consider pairwise relationships between input elements. This is achieved by representing the edge between two input elements by vectors and , which encode relative positions and are integrated into the self-attention computations. The modified attention computation equations are as follows:

These equations involve additional terms for relative positions, enhancing the model's ability to understand the relative distances among tokens.

Relative Position Representations

In linear sequences, edges capture the information about the relative position differences between input elements. The authors clip the maximum relative position to a threshold , assuming larger distances provide diminishing returns in terms of positional information. They learn distinct embeddings for these clipped relative positions, which are then efficiently incorporated into the attention mechanism.

Efficient Implementation

Despite the theoretical advantages, incorporating relative position representations poses computational challenges due to increased space complexity. The authors address this by sharing the relative position representations across attention heads and sequences, reducing the overall complexity. They also split the computation of the compatibility score into two terms to exploit matrix multiplication efficiently.

Experimental Results

The authors test their model on the WMT 2014 English-to-German (EN-DE) and English-to-French (EN-FR) translation tasks. The results indicate a significant improvement over the baseline Transformer:

- For EN-DE, the relative position encodings improved BLEU scores by 0.3 (base model) and 1.3 (big model).

- For EN-FR, the improvements were 0.5 (base model) and 0.3 (big model).

Importantly, combining relative and absolute position representations did not yield further improvements, suggesting that relative position information alone is sufficient.

Further Experiments

The paper also explores the impact of varying clipping distances and ablation studies to evaluate different components of the proposed mechanism. Notably, precise relative position information beyond a certain distance is deemed unnecessary, validating their hypothesis about clipping distance . Ablation of edge representations reveals that the inclusion of relative positional information in compatibility functions is critical for performance.

Implications and Future Work

The integration of relative position representations into the self-attention mechanism demonstrates clear performance gains in machine translation tasks. This research opens avenues for further exploration into more complex graph-labeled inputs and enhancements in self-attention mechanisms. Potential future directions include incorporating arbitrary graph structures and experimenting with nonlinear compatibility functions, with an emphasis on maintaining computational efficiency.

Conclusion

This paper offers an impactful extension to the Transformer architecture by incorporating relative position representations, improving machine translation performance. The findings underscore the value of relative positioning in enhancing the efficacy of self-attention mechanisms and set the stage for further advancements in the field.