SuperNeurons: Dynamic GPU Memory Management for Deep Learning

The paper "SuperNeurons: Dynamic GPU Memory Management for Training Deep Neural Networks" addresses a pressing challenge in deep learning: the limited GPU memory available for training expansive and complex neural networks. This limitation forces practitioners to either simplify their network architectures, which may compromise performance, or employ non-trivial strategies to distribute computations across multiple GPUs, diverting attention from core machine learning tasks.

Overview of Contributions

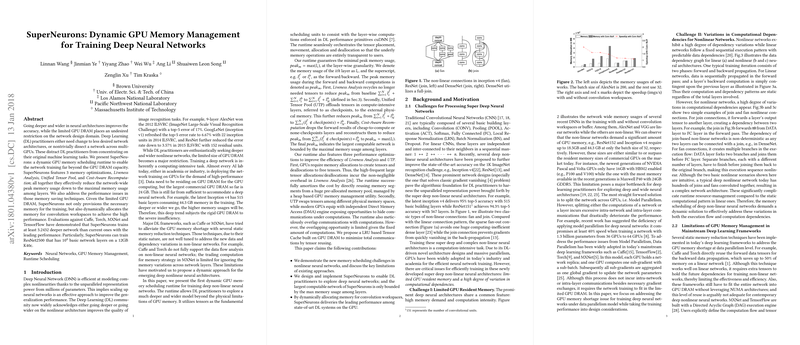

SuperNeurons presents a dynamic GPU memory scheduling runtime designed to extend network training capabilities beyond the physical limits of GPU DRAM. The runtime introduces three core memory optimization techniques that collectively ensure a substantial reduction in peak memory utilization:

- Liveness Analysis: This optimization is designed to recycle memory by tracking and freeing tensors that are no longer required during the back-propagation phase, thereby significantly reducing the network's peak memory usage.

- Unified Tensor Pool (UTP): The UTP offloads memory from GPU DRAM to external storage (such as CPU DRAM), using a dynamic approach that schedules data transfers to overlap with computations, optimizing the balance of memory and speed.

- Cost-Aware Recomputation: This technique selectively recomputes parts of the neural network to minimize memory usage while incurring minimal additional computational overhead. The recomputation is done in a way that ensures performance remains competitive.

Each of these techniques contributes to the paper’s overarching goal: to enable the training of deeper and wider neural networks. SuperNeurons claims to efficiently train a ResNet model with up to 2500 layers, a feat that is far beyond the capability of current systems when constrained by conventional GPU memory limits.

Numerical Highlights

Through extensive evaluations, the authors report that SuperNeurons can train models three times deeper than those accommodated by existing frameworks like TensorFlow, Caffe, MXNet, and Torch. Such a claim is supported by the implementation of dynamic memory management that aligns peak memory usage to the maximum requirement at any single layer, a substantial improvement given typical deep learning resource constraints.

Practical and Theoretical Implications

Practically, the introduction of SuperNeurons has implications for both academic research and industrial applications, particularly in fields where model accuracy can benefit from greater architectural complexity, such as computer vision and language processing. Theoretically, this work suggests new pathways for optimizing deep learning frameworks to handle dynamic memory allocations better and encourage further exploration into memory-efficient algorithms.

Future Directions

While SuperNeurons significantly extends the capacity for training large models on limited hardware, the broader applicability of its techniques to evolving hardware architectures (e.g., Google’s TPU or future GPUs with novel memory hierarchies) would be a pertinent direction for future research. Furthermore, expanding the scope of this approach to address other critical constraints in DL, such as energy consumption or distributed computing across heterogeneous computing resources, could enhance the robustness and scalability of neural network training in diverse environments.

In summary, SuperNeurons presents a substantial forward step in the management of GPU memory, effectively expanding the computational horizon for deep learning practitioners and providing a foundation for future advancements in the field.