Semantic Textual Similarity: Multilingual and Cross-lingual Advances at SemEval-2017

The paper "SemEval-2017 Task 1: Semantic Textual Similarity Multilingual and Cross-lingual Focused Evaluation" reports on the findings and outcomes of the 2017 semantic textual similarity (STS) shared task. This initiative aims to evaluate sentence-level semantic similarity across multilingual and cross-lingual pairs, emphasizing languages such as Arabic, Spanish, and Turkish in conjunction with English. The task stands as a critical benchmark for gauging current progress in NLP methodologies across diverse linguistic contexts.

Overview of the STS Task

Semantic textual similarity (STS) measures the degree of semantic equivalence between pairs of sentences. This evaluation has critical implications in areas like machine translation (MT), summarization, question answering (QA), and semantic search. Unlike tasks such as textual entailment and paraphrase detection, which predominantly use binary classification, STS employs a graded scale, capturing finer gradations of meaning overlap.

Task Objectives

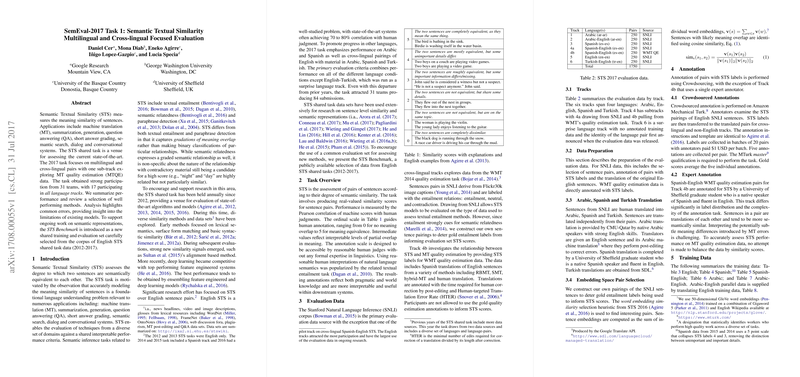

The 2017 iteration of the STS shared task focused on extending the traditionally English-focused evaluations to include Arabic, Spanish, and Turkish, both in monolingual and cross-lingual contexts. Specifically, the task included:

- Monolingual tracks in Arabic, Spanish, and English.

- Cross-lingual tracks pairing English with Arabic, Spanish, and Turkish.

- An additional sub-track exploring MT quality estimation (MTQE).

Evaluation Data and Methodology

Data for the 2017 evaluation were sourced primarily from the Stanford Natural Language Inference (SNLI) corpus, supplemented by the WMT 2014 quality estimation task data for the MTQE track. Human annotations for STS labels were collected via crowdsourcing and expert annotators, providing a well-rounded ground truth for evaluation.

Participants and Results

The task garnered significant participation, with 31 teams and 84 submissions. Among these, 17 teams participated in all language tracks. The primary evaluation metric was Pearson correlation between machine-assigned scores and human annotations. The overall top performers were ECNU, BIT, and HCTI, whose methods involved sophisticated ensembles of feature-engineered models and deep learning approaches. These methods utilized a variety of techniques, such as:

- Lexical similarity

- Syntactic features

- Semantic alignments

- Embeddings from deep neural networks

The top system from ECNU, for instance, applied an ensemble of models combining Random Forest, Gradient Boosting, and XGBoost regression techniques, enriched by various semantic and syntactic features, and complemented by deep learning models using LSTM and deep averaging networks.

Comparative Analysis

Performance varied significantly across different tracks, with higher correlations observable in monolingual tasks as opposed to cross-lingual ones. The most challenging tracks were cross-lingual ones involving Turkish and Arabic, highlighting areas needing further research. Furthermore, the integration of MT quality estimation data underscored the inherent complexities in translating semantic similarity judgments to practical MT tasks, as reflected in lower performance metrics.

Introduction of the STS Benchmark

To support ongoing and future research, the paper introduces the STS Benchmark, encompassing well-curated STS data from 2012-2017 for English. This dataset sets the stage for future developments by providing a common ground for evaluating and comparing the efficacy of new models.

Implications and Future Directions

The findings from SemEval-2017 underscore the nuanced and multifaceted nature of semantic textual similarity tasks. The diverse participation and rich methodological variations highlight the community's investment in addressing multilingual and cross-lingual challenges. Moving forward, areas ripe for further exploration include improved model robustness in low-resource languages, enhanced cross-lingual representation learning, and more effective integration of MT and STS evaluations.

Continued refinement of benchmarks like the STS Benchmark will facilitate the comparability of emerging approaches. Such endeavors are imperative for pushing the boundaries of what current NLP models can achieve in diverse linguistic environments, ultimately ensuring more inclusive and capable NLP applications.