Analysis of "Virtual to Real Reinforcement Learning for Autonomous Driving"

This paper presents an approach to address one of the central challenges in autonomous driving—training reinforcement learning (RL) models in a way that safely and effectively transfers from virtual simulations to real-world driving. The authors propose a novel realistic translation network, which aims to bridge the visual domain gap between virtual and real environments. This methodology builds on improving the applicability of virtual training by ensuring the visual inputs for RL models closely resemble those seen in the real world.

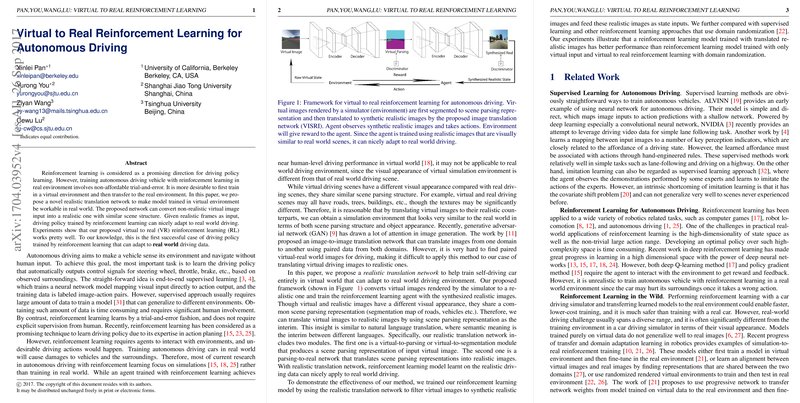

The authors approach the problem by developing a two-part image translation technique leveraging Generative Adversarial Networks (GANs). The process first involves converting virtual images into a form of scene parsing, followed by translating these parsing representations into realistic images. This forms a synthesized dataset that preserves the structural fidelity of real-world driving conditions while maintaining the training safety and flexibility of a virtual simulation environment. Consequently, autonomous driving policies can be trained using RL with this realistic imagery, mitigating the discrepancies that typically arise when a model trained in a virtual environment is deployed in the real world.

Key experimental results presented indicate that models trained using the synthesized realistic images outperform those trained purely on virtual data or using domain randomization strategies in transfer learning contexts. Specifically, the paper suggests substantial improvement in the accuracy of action prediction tasks related to steering in different driving scenarios. The reinforcement learning approaches using the proposed synthesized data approach closer the performance benchmark established by supervised learning models trained directly on large amounts of real-world data, yet the synthesis method demands significantly less manual data collection.

These findings underscore the potential for synthesized data to accelerate and enhance RL training methodologies in autonomous driving. The process efficiently reduces the gap between simulation and reality in terms of visual perception, allowing RL agents to better generalize when introduced to complex, varied real-world inputs. The approach also bypasses the risks associated with real-world trial-and-error learning, typical of reinforcement methods.

In terms of technical contributions, the paper provides a detailed exploration of the image-to-image translation techniques within the GAN framework devised to function optimally in this context. It acknowledges limitations in terms of the variability of outputs and suggests future exploration into generating diverse appeared outputs to reduce possible biases further in the reinforcement learning input space.

Looking forward, enhancing the robustness of this domain adaptation framework could spur practical, widespread application in training autonomous vehicles entirely within virtual environments. Incorporating additional real-world complexities and diversifying input-output mappings will be essential steps toward refining this promising framework for autonomous vehicle deployment.