Overview of "Video Captioning with Transferred Semantic Attributes"

The paper "Video Captioning with Transferred Semantic Attributes" presents a novel approach for generating natural language descriptions of videos, a task that bridges the areas of computer vision and natural language processing. The proposed method integrates semantic attributes learned from both images and videos into a Long Short-Term Memory (LSTM) framework, termed LSTM-TSA, with the goal of improving video captioning.

Methodology

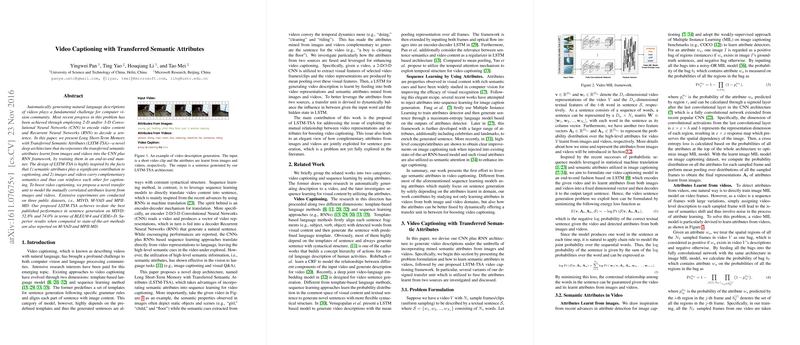

The core idea behind LSTM-TSA is the utilization of semantic attributes, which act as high-level semantic cues, to enhance the conventional CNN-RNN video captioning architecture. The method highlights two primary insights:

- Semantic Importance: Semantic attributes are crucial for effective captioning as they encapsulate high-level, meaningful information that might be overlooked by raw visual data alone.

- Complementary Semantics: Images and videos contribute complementary semantic information. Images are typically better at capturing static scenes and objects, whereas videos convey dynamic actions and temporal changes. By fusing these attributes, the model can generate richer and more contextually relevant captions.

To implement this, the authors devise a transfer mechanism that facilitates the integration of attributes into the LSTM structure dynamically, based on the current input and the state of the network. This is achieved through an innovative 'transfer unit' which regulates the influence of image and video-derived attributes.

Experimental Results

The LSTM-TSA model was extensively evaluated on three public datasets—MSVD, M-VAD, and MPII-MD. In terms of numerical performance, the model achieved significant improvements:

- On the MSVD dataset, it reached a BLEU@4 score of 52.8% and a CIDEr-D score of 74.0%, which represents the best reported results at the time of publication.

In comparison with several state-of-the-art models such as LSTM-E, GRU-RCN and HRNE, LSTM-TSA consistently showed superior performance across various metrics. The paper highlights that both individual attributes (from images or videos alone) and the combined model demonstrate enhanced sentence generation capabilities, with the joint model generally outperforming those based on single-source attributes.

Theoretical and Practical Implications

Theoretically, this work underscores the significance of integrating semantic attribute transfer in deep learning architectures for video captioning. The approach advocates for a holistic view, where semantic understanding from both image-centric and video-centric perspectives is harnessed conjointly. It opens pathways for further exploration into multi-source knowledge fusion in neural networks, potentially influencing other related tasks such as multi-modal translation and holistic scene understanding.

Practically, LSTM-TSA offers advancements in automatic video annotation, which has vast applications in media content retrieval, autonomous surveillance systems, and assistive technologies for the visually impaired.

Future Directions

The authors hint at future enhancements which could include the application of an attention mechanism to further refine how the model focuses on different video segments contextually while generating descriptions. This direction aligns with ongoing trends in neural attention research, and its exploration could lead to even more precise video understanding systems. Additionally, expanding the approach to handle longer narratives or sequences of descriptions remains an exciting challenge, promising to enrich automatic video narration diversity and detail.

In conclusion, "Video Captioning with Transferred Semantic Attributes" contributes a compelling framework for improving video captioning tasks, demonstrating both promising results and offering a foundation for future development in video understanding algorithms.