Overview of "Jointly Modeling Embedding and Translation to Bridge Video and Language"

The paper focuses on advancing the field of automatic video description generation by proposing a novel framework named Long Short-Term Memory with Visual-Semantic Embedding (LSTM-E). The central problem addressed is generating coherent and relevant natural language sentences that accurately describe video content, which is a challenging task in multimedia processing.

Framework and Methodology

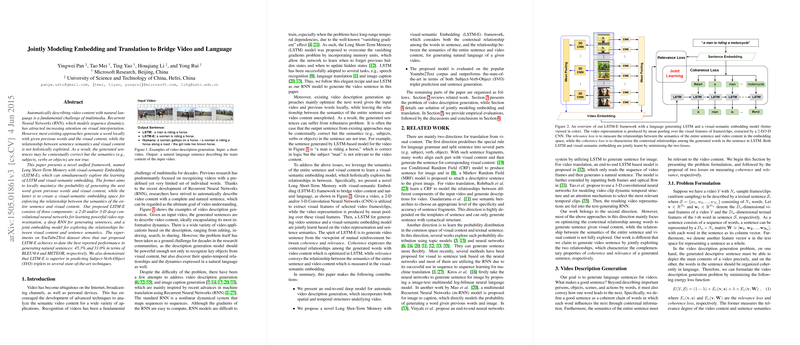

LSTM-E integrates two powerful techniques: Long Short-Term Memory (LSTM) networks and visual-semantic embedding models. This dual approach aims to enhance both the local and global relationships between video content and language semantics.

- LSTM Component: The use of LSTM addresses the temporal dependencies in sequence data, such as video frames, enabling the model to predict the next word in a sentence based on previous words and visual cues. This essentially forms a probabilistic sequence model optimized for coherence.

- Visual-Semantic Embedding: By mapping both video and text features into a joint embedding space, the framework ensures that the semantic alignment between the entire sentence and video content is robustly captured. This embedding serves to holistically verify the relevance of generated sentences to the video, thus improving accuracy in semantic representation.

Three key components are utilized:

- 2-D and 3-D deep CNNs for feature extraction from video data.

- A deep RNN for sentence generation.

- A joint embedding model that aligns the video representation with sentence semantics.

Experimental Results

The framework's efficacy is demonstrated on the YouTube2Text dataset, where it outperformed existing models with BLEU@4 and METEOR scores of 45.3% and 31.0%, respectively. It also achieved superior results in predicting Subject-Verb-Object (SVO) triplets compared to contemporary techniques.

Implications and Future Directions

The integration of LSTM and visual-semantic embeddings provides a comprehensive solution that improves both coherence and semantic relevance. The ability to model complete sentences from video content holds significant implications for various applications like video indexing, retrieval, and content generation.

Future advancements could incorporate deeper RNN architectures and larger training datasets to enhance model robustness and performance. Moreover, exploring alternative video representations and extending the framework to more diverse video types may broaden its application scope.

In summary, the LSTM-E model represents a significant step in aligning video content with natural language, addressing both local word sequences and global semantic relationships with impressive results. Further exploration and enhancement of this framework could drive substantial progress in the field of multimedia comprehension and interaction.