An Analytical Review of "TextBoxes: A Fast Text Detector with a Single Deep Neural Network"

Scene text detection and recognition hold significant importance in various computer vision applications such as geolocation, autonomous driving, and image-based data extraction. The paper "TextBoxes: A Fast Text Detector with a Single Deep Neural Network" by Minghui Liao, Baoguang Shi, Xiang Bai, Xinggang Wang, and Wenyu Liu, introduces an innovative approach for efficient text detection using a fully convolutional network architecture. This paper demonstrates substantial improvements in both detection speed and accuracy over previous methodologies.

Contributions and Architecture

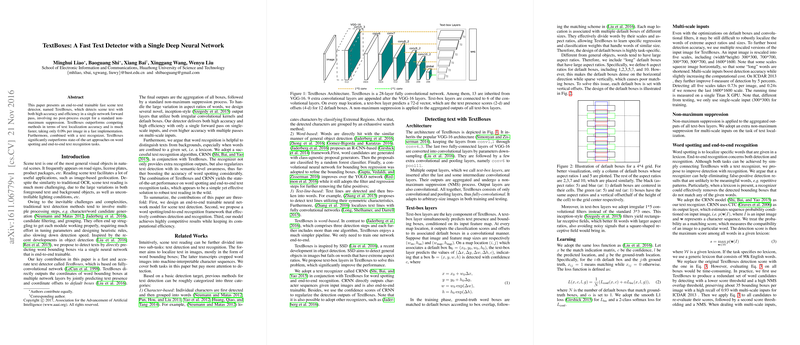

The primary contribution of this paper is the proposal and empirical validation of the TextBoxes architecture. TextBoxes leverages a 28-layer fully convolutional network (FCN), with a significant part of its architecture inherited from the VGG-16 model, specifically layers from conv1_1 to conv4_3. By converting the fully connected layers to convolutional ones and appending additional convolutional and pooling layers (conv6 to pool11), TextBoxes makes a crucial step towards efficient, end-to-end trainable text detection.

The architecture's core innovation lies in the introduction of novel text-box layers. These layers simultaneously predict the presence and bounding boxes of text, tailored to handle the inherent variability in text aspect ratios by using irregular convolutional kernels. TextBoxes directly outputs the coordinates of word bounding boxes, followed by a standard non-maximum suppression (NMS) process to refine the detection results.

Technical Details

TextBoxes optimizes its processing pipeline by focusing on word-based detection rather than character-based or text-line-based approaches. This decision simplifies the model and focuses the computational burden onto a single network pass, significantly boosting efficiency. Furthermore, TextBoxes uses default boxes of varied aspect ratios (1, 2, 3, 5, 7, and 10) and introduces vertical offsets to tackle the density imbalances in different orientations.

To further enhance detection accuracy, the authors incorporated multi-scale inputs during inference. Input images are rescaled to various sizes (300x300 to 1600x1600), and the results from these scales undergo an aggregation and non-maximum suppression step. This multi-scale approach, while increasing the computational load slightly, significantly boosts detection performance.

Numerical Results

TextBoxes demonstrates superior performance across several standard benchmarks. In terms of text localization, TextBoxes achieves an F-measure of 0.85 on the ICDAR 2011 and ICDAR 2013 datasets, outperforming methods like FCRNall+filts and Deep2Text. The method's efficiency is also notable, with a single-scale inference time of just 0.09 seconds per image, establishing its viability for real-time applications.

The combined approach of TextBoxes with the CRNN text recognizer shows state-of-the-art results in word spotting and end-to-end text recognition tasks. On the ICDAR 2013 dataset, for instance, TextBoxes achieves an F-measure of 0.87, 0.92, and 0.85 under strong, weak, and generic lexicon settings respectively, indicating robust performance in varied lexical scenarios.

Implications

Practically, the advancements presented by TextBoxes offer significant enhancements in scenes where fast and reliable text detection is essential. Examples include real-time image annotation, automatic license plate recognition, and text extraction from natural scenes for augmented reality applications. Theoretically, this work underscores the potential of fully convolutional architectures augmented with specialized layers for addressing domain-specific challenges.

Future Directions

While TextBoxes sets a new benchmark in text detection, several areas warrant further exploration. Future work should aim to extend the model's capabilities to handle multi-oriented text detection and integrate the recognition component more tightly into the detection framework. Additionally, exploring the model's robustness across diverse and more challenging datasets could provide deeper insights into its operational limits.

Conclusion

The TextBoxes paper presents a significant step forward in scene text detection, combining efficiency with effective performance enhancements. Its contributions in architecture and methodology pave the way for broader applications and future research aimed at more complex and dynamic text detection challenges. Through rigorous empirical validation, the authors convincingly demonstrate the merits and potential of their proposed approach.