Multi-Oriented Text Detection with Fully Convolutional Networks: An Expert Overview

The paper "Multi-Oriented Text Detection with Fully Convolutional Networks" presents a method for detecting text in natural images, focusing on multi-oriented, multilingual, and various font texts. It introduces a multi-step approach that uniquely integrates local and global image features to enhance text detection accuracy.

Methodology

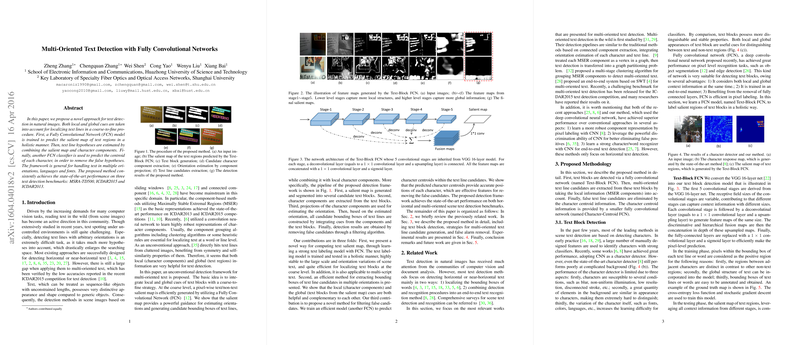

The proposed framework leverages Fully Convolutional Networks (FCNs) to generate text salient maps and process text line candidates efficiently. The approach is divided into several key stages:

- Text Salient Map Generation: The method starts by training an FCN to predict salient maps of text regions. This network processes images holistically, capturing both local and global text features to generate pixel-wise text/non-text predictions efficiently.

- Text Line Hypothesis Generation: Utilizing the salient map, the text line hypotheses are created by combining them with character components extracted through MSER. This process crucially accounts for various orientations, which traditional methods often neglect, thus restricting their capability to mostly horizontal texts.

- Orientation Estimation and Candidate Extraction: A projection-based method is employed to determine the text orientation from character components within detected text blocks, enhancing accuracy by aligning the candidates with the actual text direction.

- False Hypotheses Filtering: An additional FCN is trained to predict character centroids, refining the text line candidates by removing false detections. This step ensures that the text detection is both accurate and applicable to texts with diverse orientations and scales.

Experimental Results

The effectiveness of the proposed method is validated across multiple benchmark datasets, including MSRA-TD500, ICDAR2015, and ICDAR2013. Results indicate superior performance in both precision and recall when compared to existing approaches, showcasing the framework's adaptability to both horizontal and multi-oriented text detection.

- MSRA-TD500: Achieving a precision of 0.83 and recall of 0.67, the proposed method demonstrated robust handling of varied orientations, providing a clear improvement over previous methodologies.

- ICDAR Datasets: The framework performs well across ICDAR benchmarks, maintaining high levels of accuracy, particularly in challenging incidental text detection scenarios.

Contributions and Implications

This research contributes significantly to the field by introducing a holistic framework that integrates both local character components and global region-based approaches for improved text detection. The use of FCNs for semantic region labeling and character centroid prediction provides a more robust architecture that scales well to diverse orientations and complexities in natural scenes.

Furthermore, the separation of text block detection and text line candidate generation utilizing MSER and orientation estimation underscores a methodological advancement in scene text detection, extending applicability to real-world applications where text appears in arbitrary orientations.

Limitations and Future Directions

While the method exhibits superior performance, challenges remain in accurately detecting highly curved texts and maintaining real-time processing speeds. Additionally, improvement opportunities exist in handling scenes with significant clutter or reflections. Future advancements could focus on integrating more sophisticated learning techniques for component extraction and exploring end-to-end architectures for combining text detection with recognition tasks.

Overall, this approach presents a valuable contribution to text detection methodologies, paving the way for more comprehensive and adaptable scene text recognition systems.