SoundNet: Learning Sound Representations from Unlabeled Video

The paper "SoundNet: Learning Sound Representations from Unlabeled Video" by Aytar, Vondrick, and Torralba addresses the challenge of developing robust acoustic representations using an innovative approach centered around the natural synchronization of visual and audio data in unlabeled videos. The authors leverage state-of-the-art visual recognition models to infuse discriminative knowledge into a deep audio network, with the goal of enhancing performance in natural sound recognition tasks.

Methodological Approach

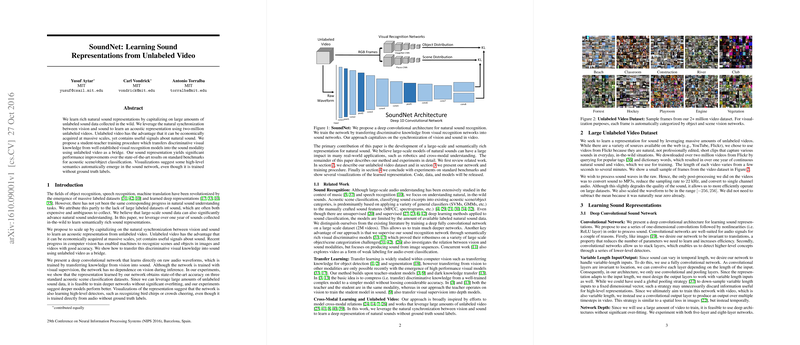

The primary contribution of this work is a deep convolutional network, named SoundNet, which processes raw audio waveforms and learns complex sound representations through visual transfer learning. The foundation of the approach is a student-teacher model where established visual networks act as the teacher, and the sound network is the student. The transfer of information is facilitated through the inherent synchronization between the visual and audio streams in unlabeled videos.

Key Aspects of the Network:

- Deep Convolutional Architecture: SoundNet employs either a five-layer or eight-layer convolutional setup to handle variable-length audio inputs, capitalizing on temporal translation invariance.

- Student-Teacher Training: The network learns to recognize acoustic scenes and objects by optimizing the KL-divergence between the output distributions of visual recognition networks and the sound network.

- Unlabeled Video Data: The training process involves over two million hours of unlabeled videos sourced from Flickr, utilizing the temporal alignment of sight and sound within these videos for learning.

Experimental Evaluation

Datasets and Baselines:

The authors evaluate SoundNet on three standard benchmark datasets: DCASE, ESC-50, and ESC-10. These datasets focus on classifying natural sounds into predefined acoustic scene categories, with ESC-50 and ESC-10 also incorporating a variety of sound categories, such as human non-speech sounds and environmental noises. The authors compare their results against state-of-the-art models, including baselines such as MFCC and traditional classifiers like SVMs.

Results:

- DCASE: SoundNet achieves a classification accuracy of 88%, outperforming the best baseline by around 10%.

- ESC-50/ESC-10: For ESC-50, SoundNet reaches 74.2% accuracy, and for ESC-10, it achieves 92.2%, which is in close proximity to human performance on these tasks.

Analysis and Implications

SoundNet demonstrates significant performance improvements over prior state-of-the-art techniques in natural sound classification tasks. The results underscore the potential of leveraging large-scale unlabeled data to improve the depth and efficacy of sound representations. Several facets of the approach provide valuable insights:

- Deep Networks: Training deeper models (eight layers) results in better-performing representations compared to shallower ones (five layers), validating the hypothesis that greater network depth enhances feature extraction in the sound domain.

- Visual Supervision: The transfer of semantic knowledge from visual models proves effective, with combined supervision from ImageNet and Places networks yielding the highest performance.

- Unlabeled Data Utilization: The large-scale unlabeled video dataset is pivotal; training solely on labeled data led to significant overfitting, particularly for deeper architectures.

Future Directions

This work opens several avenues for future research and practical applications:

- Multi-Modal Learning: Future studies could explore integrating additional sensory modalities beyond vision and sound, such as tactile or olfactory data, to create more comprehensive multi-modal networks.

- Real-World Applications: Robust sound recognition systems could be instrumental in various domains, including unsupervised learning for autonomous systems, improved auditory perception in robotics, and enhanced user experiences in multimedia and augmented reality applications.

- Scalability and Richer Models: Scaling the dataset further and improving underlying visual models could further alleviate current limitations, pointing towards even richer and more semantically meaningful sound representations.

In conclusion, SoundNet exemplifies an innovative and effective methodology for sound representation learning, setting a compelling precedent for leveraging unlabeled data in conjunction with cross-modal transfer learning. The findings indicate a significant step forward in the automation of semantic understanding of natural sounds, with substantial implications for both theory and practice in the field of machine learning and beyond.