A Diversity-Promoting Objective Function for Neural Conversation Models

The paper "A Diversity-Promoting Objective Function for Neural Conversation Models" by Jiwei Li et al. addresses a prevalent issue in sequence-to-sequence (Seq2Seq) neural network models for conversational response generation— the propensity of these models to produce overly safe, generic responses regardless of the input. The authors propose that the traditional maximum likelihood estimation (MLE) objective function is suboptimal for this task. Instead, they introduce Maximum Mutual Information (MMI) as an alternative objective function.

Motivations and Contributions

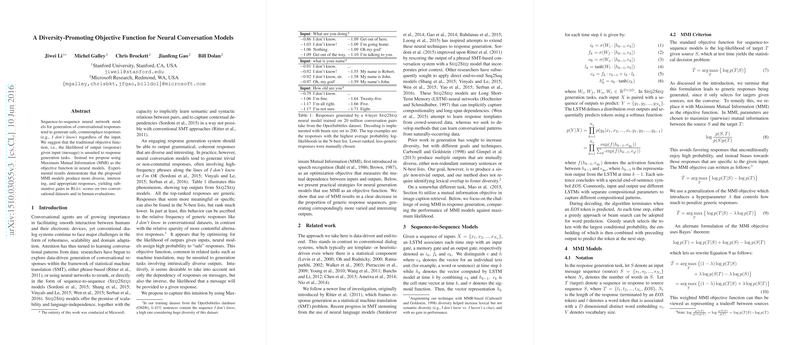

Conventional Seq2Seq models optimize the likelihood of generating a response given an input, which often leads to high-probability but dull responses such as "I don't know" or "I'm not sure." The premise of this paper is that these models fail to account for the diversity and specificity required in meaningful conversational interactions. To address this, the authors suggest the use of MMI, which considers both the dependency of responses on inputs and the inverse—thus favoring responses that are informative and diverse.

Methodology

The paper outlines two variations of the MMI objective:

- MMI-antiLM: This formulation penalizes responses that are frequent and safe by incorporating an anti-LLM term into the optimization criterion, . However, this method can sometimes lead to ungrammatical outputs since fluency is penalized. To mitigate this, the authors adjust the weight of the anti-LLM dynamically during decoding.

- MMI-bidi: This version involves reranking N-best lists generated from a standard Seq2Seq model using a combined score of target given source and source given target . This approach ensures that only well-formed responses are considered by first generating the responses with a LLM and then reranking them based on their mutual compatibility.

Experimental Setup

The proposed methods are evaluated on two datasets: the Twitter Conversation Triple Dataset and the OpenSubtitles dataset. Various metrics, including BLEU scores and measures of lexical diversity (distinct-1 and distinct-2), are used to assess performance. Human evaluations are also conducted to provide qualitative insights.

Results

The results demonstrate substantial improvements in the diversity and specificity of the generated responses:

- Twitter Dataset: The MMI-bidi model achieves a BLEU score of 5.22, outperforming the baseline Seq2Seq model and existing methods like SMT and SMT+neural reranking, which use a much larger training dataset.

- OpenSubtitles Dataset: The MMI-antiLM model shows a significant increase in BLEU scores (1.74 compared to the baseline 1.28) and a dramatic improvement in lexical diversity, highlighting its ability to generate more interesting and varied responses.

Implications and Future Work

The paper's findings imply that optimizing for mutual information between inputs and responses can effectively mitigate the issue of generic outputs in conversational AI. This approach can enhance user experience by producing more engaging and relevant interactions.

The theoretical implications extend beyond conversational modeling to any task requiring the generation of diverse outputs. For example, image description, question answering, and other domains involving Seq2Seq models could benefit from the insights provided.

Future work could explore integrating more contextual and user-specific information into the MMI models to further enhance the relevance and personalization of responses. Additionally, extending the MMI objective to multi-turn conversations and other interactive AI tasks would be a valuable direction for research.

In conclusion, this paper provides a robust solution to a critical challenge in conversational AI, offering practical and theoretical contributions that can influence a variety of neural generation tasks. The MMI-based objectives represent a significant step forward in creating conversational agents capable of maintaining engaging and informative dialogues.