A Content-Introducing Approach to Generative Short-Text Conversation

The paper "Sequence to Backward and Forward Sequences: A Content-Introducing Approach to Generative Short-Text Conversation" presents a methodology aimed at enhancing the performance of neural network-based generative dialogue systems. By addressing the prevalent issue of generating generic and non-informative responses, the authors propose a novel model entitled seq2BF, or "sequence to backward and forward sequences," which incorporates a strategic introduction of content to generate more meaningful conversations.

Key Contributions

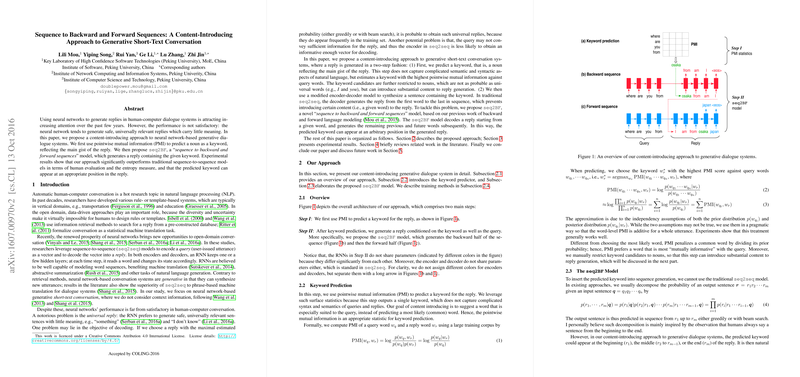

- Content-Introducing Approach: The authors introduce a two-step methodology to incorporate content into responses. Initially, Pointwise Mutual Information (PMI) is utilized to predict a salient keyword (specifically, a noun) that encapsulates the core idea of the expected reply. This keyword serves as an anchor around which the generated reply is structured.

- Seq2BF Model: The seq2BF model generated replies in a non-linear fashion, starting from the keyword and generating both backward and forward sequences to complete the response. This model diverges from traditional sequence-to-sequence (seq2seq) models by allowing predicted keywords to appear naturally within any position of the generated sentence.

- Empirical Validation: The research demonstrates superior performance of the seq2BF model over traditional seq2seq approaches. This evidence is supported by human evaluations and metrics measuring information diversity such as entropy.

Evaluation Metrics and Results

In assessing the approach, the authors utilized both subjective and intrinsic evaluation metrics. Human evaluation was conducted using both pointwise and pairwise annotation schemes, revealing that seq2BF, augmented with PMI-predicted keywords, produces more contextually appropriate replies compared to baseline models. Additionally, the entropy measure was employed as an objective metric to gauge the diversity of generated responses; seq2BF exhibited marked improvements over seq2seq, addressing the critical shortcoming of generating universally safe but trivial replies.

Discussion and Implications

The use of PMI for keyword prediction effectively introduces richer content into dialogue generation, thereby challenging the limitations of standard seq2seq models that frequently default to non-specific responses. This suggests that enriching the semantic content in dialogue systems can significantly improve their interactivity and engagement.

The seq2BF model offers adaptable sequence generation, as it can integrate predicted keywords at dynamic positions within the sentence. This capability opens up new potential for applications in AI-driven conversation systems by allowing systems to generate contextually varied and context-rich responses.

Future Directions

Future work can explore the integration of more sophisticated keyword prediction techniques, potentially leveraging advanced neural sentence models to enrich the quality of content introduced during response generation. Additionally, extending seq2BF to other natural language generation tasks, such as generative question answering, presents an intriguing avenue for enhancing context generation in dialogue systems. This would involve leveraging external knowledge bases or datasets to further tailor responses according to the user's context and intent.

In summary, the paper lays a robust foundation for advancing the field of generative dialogue by embedding meaningful content into AI-generated responses, thus aligning system outputs more closely with human conversational norms.