An End-to-End Trainable Neural Network for Image-based Sequence Recognition and Its Application to Scene Text Recognition

Introduction

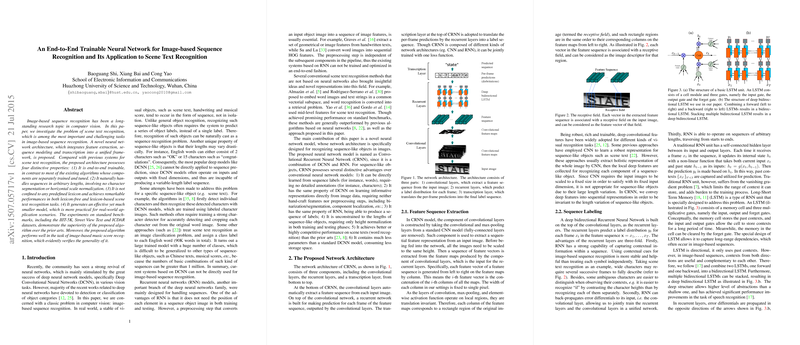

The paper presents a Convolutional Recurrent Neural Network (CRNN) tailored for recognizing sequence-like objects in images. Core to this model is the ability to integrate feature extraction, sequence modeling, and transcription into a unified, end-to-end trainable framework. This novel design circumvents the limitations of previous methods that typically required separate training for each component (e.g., character detection and recognition in the case of scene text).

Approach and Methodology

The CRNN architecture incorporates three distinct components:

- Convolutional Layers: These layers extract a sequential feature representation from an input image. The CRNN adopts convolutional and max-pooling layers from standard CNNs but discards fully connected layers to accommodate varying sequence lengths.

- Recurrent Layers: Directed by Long-Short Term Memory (LSTM) units, the recurrent layers handle the sequential aspect of image data. Bidirectional LSTMs enhance this by capturing contextual information from both directions, thereby improving sequence prediction.

- Transcription Layer: This layer converts frame-wise predictions by the recurrent layers into a final label sequence, handling both lexicon-free and lexicon-based modes.

Training and Implementation

The CRNN is trained end-to-end on pairs of images and their corresponding label sequences. The training objective minimizes the negative log-likelihood of the ground truth conditional probability. Notably, the network uses ADADELTA for optimization, which automatically adjusts learning rates, accelerating convergence.

Experimental Evaluation

Datasets

Four standard benchmarks for scene text recognition were used: ICDAR 2003 (IC03), ICDAR 2013 (IC13), IIIT 5k-word (IIIT5k), and Street View Text (SVT). The CRNN was trained on a synthetic dataset of 8 million images and tested on real-world datasets without fine-tuning.

Results

The CRNN demonstrated superior or highly competitive performance across various benchmarks:

- IIIT5k: Achieved 97.6% accuracy with a 50-word lexicon.

- SVT: Reached 96.4% accuracy with a 50-word lexicon.

- IC03: Obtained 98.7% accuracy with a 50-word lexicon.

- IC13: Scored 86.7% in the unconstrained lexicon mode.

Interestingly, the CRNN outperformed methods constrained to predefined dictionaries and demonstrated robustness across both lexicon-free and lexicon-based recognition tasks.

Application to Optical Music Recognition (OMR)

The paper extends the CRNN framework to Optical Music Recognition, treating it as a sequence recognition task. Training involved a corpus of musical score images augmented to enhance robustness. The CRNN showed significant improvement over commercial systems (CapellaScan and PhotoScore) in recognizing pitch sequences from both synthetic and real-world data.

Comparisons and Implications

The paper provides an in-depth comparison of the CRNN to other methods based on several attributes—end-to-end trainability, use of convolutional features, freedom from character-level ground truths, and ability to handle unconstrained lexicons. The CRNN’s compact model size (8.3 million parameters) makes it highly efficient and suitable for mobile devices.

Conclusion

The CRNN framework seamlessly integrates convolutional and recurrent layers to effectively handle sequence recognition tasks in images. It attains high accuracy and robustness without requiring explicit character segmentation or detailed labeling. The success in both scene text and music score recognition demonstrates CRNN’s versatility and potential for broader applications. Future work could focus on further optimizing CRNN for speed and deploying it in diverse real-world scenarios.