A Comprehensive Analysis of Mask TextSpotter for Scene Text Recognition

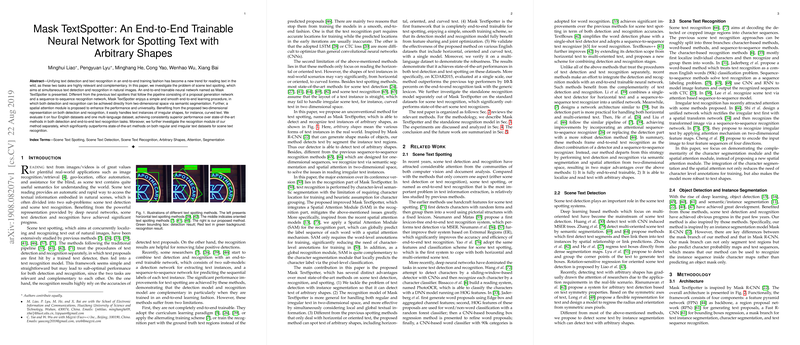

The paper "Mask TextSpotter: An End-to-End Trainable Neural Network for Spotting Text with Arbitrary Shapes" by Minghui Liao et al. addresses the complex challenges of detecting and recognizing text in natural images. This paper introduces Mask TextSpotter, an innovative end-to-end trainable neural network designed to handle text detection and recognition tasks for texts of arbitrary shapes, including curved text.

Key Contributions

Mask TextSpotter diverges from traditional text spotting pipelines, which typically involve separate modules for proposal generation and sequence recognition, by utilizing a semantic segmentation approach that enables both detection and recognition directly in two-dimensional space. This approach facilitates handling text instances of irregular shapes effectively. The network architecture benefits from a simple and efficient training process, significantly reducing the dependency on character-level annotations through the incorporation of a Spatial Attention Module (SAM). SAM applies a spatial attention mechanism to enhance the recognition capabilities, especially for irregularly shaped text.

Experimental Evaluation

The effectiveness of Mask TextSpotter is validated across multiple datasets, including ICDAR2013, ICDAR2015, Total-Text, COCO-Text, and MLT. The network consistently outperforms state-of-the-art methods in text detection and recognition. Notably, on the ICDAR2015 dataset, Mask TextSpotter achieves a remarkable 10.5 percent improvement in the end-to-end recognition task with a generic lexicon, demonstrating its robustness in dealing with diverse text orientations and shapes.

The experiments also highlight the network's flexibility in addressing scene text challenges without heavily relying on lexicons. The standalone recognition model further substantiates the efficacy of the proposed recognition module, surpassing leading scene text recognizers on standard benchmarks for both regular and irregular text datasets.

Technical Insights

- Network Architecture: The integration of a Feature Pyramid Network (FPN) backbone enables Mask TextSpotter to handle various text sizes efficiently. By bypassing sequential modules traditionally used for character recognition, the network achieves end-to-end training with enhanced optimization flexibility.

- Instance Segmentation: The text detection problem is recast as an instance segmentation task, akin to the techniques employed in Mask R-CNN, facilitating the detection of texts with arbitrary shapes.

- Spatial Attention Module (SAM): SAM supplements the character segmentation map, functioning without direct dependence on character-level location annotations. It effectively predicts word sequences by attending to crucial spatial features, boosting recognition accuracy.

- Recognition Versatility: The ability to work with a wide spectrum of text shapes, including those that are curved or non-uniformly spaced, sets Mask TextSpotter apart from previous methodologies that typically focus on horizontal or oriented text.

Implications and Future Work

Mask TextSpotter's capacity to operate effectively on multi-language datasets such as MLT implies broader applications in global text recognition scenarios. The framework's adaptability suggests potential integrations with augmented reality systems, real-time language translation, and automated data entry from sources with varied text presentations.

Future research directions identified in the paper include efforts to enhance the model's efficiency, particularly by refining the detection stage, which remains the computational bottleneck. Further explorations may involve leveraging more advanced detection frameworks or adopting lightweight architectures to expedite processing while maintaining, or even enhancing, the model's current performance metrics.

In conclusion, Mask TextSpotter represents a significant advancement in the field of scene text recognition, offering a robust, flexible solution that effectively caters to the complexities of real-world text spotting challenges.