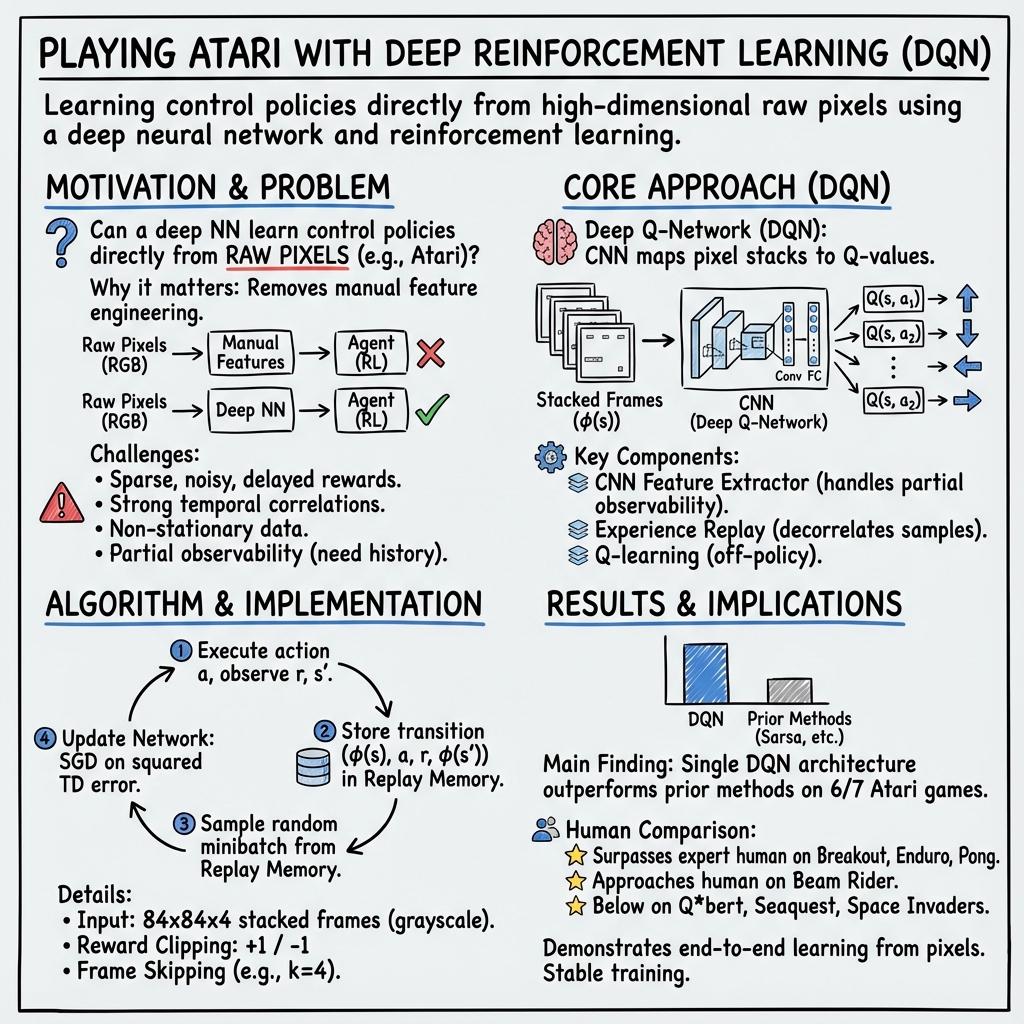

- The paper introduces a deep Q-network that directly learns control policies from raw pixel inputs in Atari games.

- It employs convolutional neural networks with an experience replay mechanism to stabilize training in the face of noisy, delayed rewards.

- Empirical results on multiple Atari games show that the DQN outperforms previous reinforcement learning methods and even surpasses human expert scores.

Playing Atari with Deep Reinforcement Learning: An Overview

This paper presents an innovative deep learning framework for reinforcement learning (RL) that directly learns control policies from high-dimensional sensory inputs, specifically raw pixel data, using a variant of Q-learning. The approach utilizes a convolutional neural network (CNN), referred to as a Deep Q-Network (DQN), which maps the input pixels to a value function estimating future rewards. This model is applied to various Atari 2600 games within the Arcade Learning Environment (ALE), achieving superior performance compared to preceding methodologies in most cases and even surpassing human experts in several instances.

Introduction and Theoretical Foundation

The primary challenge addressed in the paper is learning control policies from high-dimensional, unprocessed sensory inputs. Prior RL applications relied heavily on handcrafted feature representations combined with linear value functions or policy approximations. The advent of deep learning enables the extraction of high-level features from raw data, positing its suitability for RL problems with sensory inputs. However, RL introduces complexities such as learning from sparse, noisy, and delayed rewards, and dealing with correlated data sequences, which are not typically handled by traditional deep learning algorithms aimed at supervised learning tasks.

Methodological Approach

The proposed approach integrates a CNN with a variant of the Q-learning algorithm, adopting stochastic gradient descent (SGD) for weight updates. To mitigate issues arising from correlated data and non-stationary distributions, the authors employ an experience replay mechanism. This method involves storing agent experiences and sampling from this memory to smooth the training distribution and stabilize learning. The DQN is trained on raw frames from various Atari games without game-specific modifications, demonstrating generalization across different game environments.

Experimental Setup

The DQN was evaluated on seven Atari 2600 games: Beam Rider, Breakout, Enduro, Pong, Q*bert, Seaquest, and Space Invaders. The game setup presented agents with high-dimensional visual inputs and required learning diverse tasks. The network architecture and hyperparameters remained consistent across all games, underscoring the robustness of the approach. A preprocessing step converted RGB frames to grayscale, downsampling and cropping them to reduce input dimensionality, resulting in an 84x84 pixel representation.

Numerical Results and Analysis

The experimental results demonstrate that the DQN outperformed previous RL algorithms on six out of the seven games, with notable achievements in Breakout, Enduro, and Pong where it also surpassed human expert scores. Table 1 in the paper provides a detailed comparison of the DQN against other RL methods, such as Sarsa and Contingency, as well as against human benchmarks. The DQN achieved remarkable scores, significantly outperforming other automated methods which incorporated considerable prior knowledge (e.g., background subtraction, color channel separations).

Practical and Theoretical Implications

The practical implications of this research are significant, showcasing the feasibility of applying deep learning directly to RL problems with complex sensory inputs. The results suggest that deep learning models can automatically extract critical features necessary for control tasks, thus reducing the dependency on human-crafted features. This ability generalizes across different tasks within the same environment (Atari 2600 games), which is promising for future AI applications in more complex and variable real-world tasks.

Theoretically, the use of experience replay and architectural innovations in the DQN present a robust method for stabilizing the training of deep networks in RL. This framework addresses well-documented issues related to training stability and model convergence in RL with nonlinear function approximators.

Future Prospects

The promising results from this study pave the way for future research in several directions:

- Scaling to More Complex Tasks: Extending this approach to more complex and varied RL tasks beyond Atari games could confirm the model's robustness and generalizability.

- Advanced Sampling Techniques: Implementing prioritized experience replay to further enhance learning efficiency by emphasizing more informative training samples.

- Integration with Other Learning Paradigms: Combining this model with other learning paradigms, such as supervised and unsupervised learning for pre-training, could enhance the efficiency and performance of RL algorithms.

- Policy Transfer and Multi-task Learning: Exploring mechanisms for transferring learned policies across different tasks and environments, facilitating multi-task learning and generalization.

In conclusion, this paper introduces a significant advancement in the application of deep learning to reinforcement learning, particularly in the domain of visual control tasks. The exhibited potential of the DQN framework sets a compelling foundation for further exploration and development within the field of AI.