NT-Xent Loss for Contrastive Learning

- NT-Xent loss is a contrastive function that maps augmented views of the same input close together while distancing embeddings of distinct inputs.

- It employs cosine similarity, temperature scaling, and batch negatives to optimize discriminative representations in self-supervised frameworks.

- This loss underpins advances in computer vision, graph learning, audio processing, and recommendation systems, driving robust empirical performance.

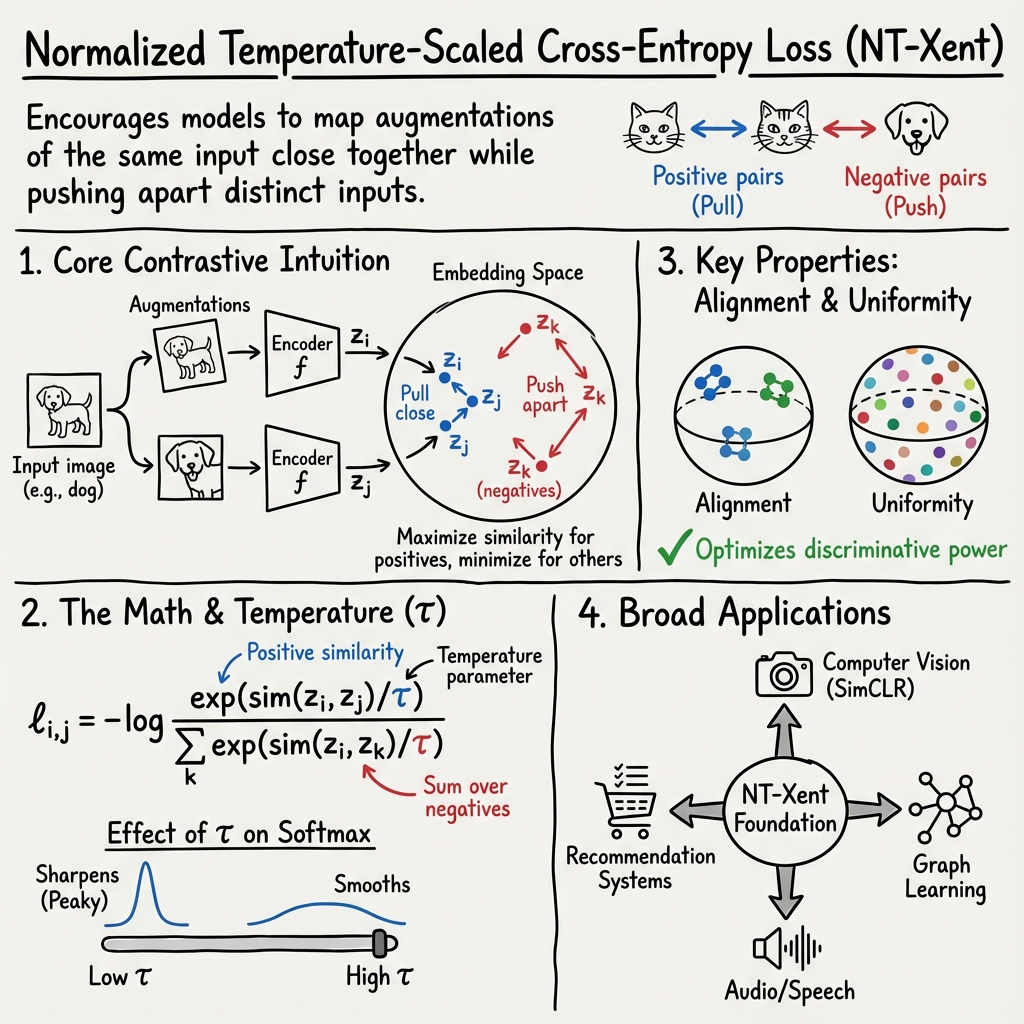

The Normalized Temperature-Scaled Cross-Entropy Loss (NT-Xent) is a pivotal loss function in contemporary self-supervised and contrastive representation learning. It is designed to encourage models to map augmentations of the same input (positive pairs) close together in the embedding space while pushing apart representations of distinct inputs (negative pairs), leveraging batch-based, temperature-scaled softmax formulations. The NT-Xent loss underlies a broad family of frameworks and is foundational to advances in computer vision, graph learning, speech, music information retrieval, and more.

1. Mathematical Formulation and Key Properties

At its core, the NT-Xent loss—central to frameworks such as SimCLR—is a variant of the cross-entropy loss applied in a contrastive, unlabeled context.

Given representations (e.g., features of two augmented views of the same input), and a set of negatives , the standard NT-Xent loss for a positive pair is:

$\ell_{i,j} = -\log \frac{\exp\left(\operatorname{sim}(z_i, z_j) / \tau\right)}{\sum_{k=1}^{2N} \mathbbm{1}_{[k \neq i]} \exp\left(\operatorname{sim}(z_i, z_k) / \tau\right)}$

where:

- is typically the cosine similarity,

- is the temperature parameter,

- $2N$ is the batch size for two augmented views per sample,

- $\mathbbm{1}_{[k \neq i]}$ excludes the anchor from the denominator.

Temperature scaling () controls the "peakiness" of the softmax: lower sharpens the relative preference among positives and negatives, higher smooths the loss surface and softens predictions (Agarwala et al., 2020).

The loss encourages high similarity between positive pairs and low similarity to all other elements in the batch (negatives), directly optimizing the discriminative power of the embedding space (Ågren, 2022).

2. Theoretical Foundations, Guarantees, and Variants

NT-Xent is closely related to the classical softmax cross-entropy. Theoretical analysis places it within a family of "comp-sum" losses, specifically as a temperature-scaled multinomial cross-entropy (Mao et al., 2023). Key theoretical findings include:

- -Consistency and Generalization Bounds: Minimizing NT-Xent, when viewed as a comp-sum loss, provides non-asymptotic upper bounds on zero-one classification error, parameterized by minimizability gaps and the expressiveness of the model class.

- Tightness and Coverage: The NT-Xent loss is both a tight and covering surrogate, enabling provable convergence toward Bayes-optimal representations under sufficient hypothesis class expressivity.

- Smooth Adversarial Variants: Regularized, smooth adversarial comp-sum losses—of which NT-Xent is a special case—offer robustness guarantees and outperform standard regularization under adversarial perturbations.

Variants and extensions have emerged:

- GNT-Xent (Gradient-stabilized NT-Xent): Modifies the denominator to exclude the positive pair’s similarity. This adjustment stabilizes the gradients, preventing the vanishing gradient issue of standard NT-Xent and resulting in steadier and faster convergence (Tu et al., 2020).

- Soft Target InfoNCE: Extends NT-Xent to allow probabilistic (soft) targets, synthesizing strong regularization techniques like label smoothing and MixUp with contrastive learning (Hugger et al., 2024).

3. Temperature Parameter and Calibration

The temperature parameter is pivotal to NT-Xent:

- Lowering temperature () sharpens the softmax, emphasizing the relative ranking of positive versus negative pairs, but can introduce optimization instability.

- Higher temperature makes the loss surface smoother but may weaken discrimination.

- Optimal performance is achieved by tuning over a wide range ( to ), with the best value being model- and architecture-dependent (Agarwala et al., 2020).

- Calibration strategies such as temperature scaling and, in recent work, focal temperature scaling offer methods to tune model confidence and distribution sharpness after training. Composed temperature and non-linear (focal) transforms further enhance calibration, particularly in multiclass settings (Komisarenko et al., 2024).

4. Practical Applications Across Domains

NT-Xent loss is widely applied beyond canonical computer vision:

- Graph Neural Networks: Used for node-level contrastive learning, NT-Xent underpins representation extraction for downstream node classification tasks. Innovations such as column-wise batch normalization (as opposed to row-wise MLP heads) significantly improve both alignment (of positives) and uniformity (spread of embeddings) of learned representations, enhancing both robustness and accuracy (Hong et al., 2023).

- Low-resource Audio Keyword Spotting: In speech, NT-Xent directly optimizes transformer-based acoustic word embeddings for discriminability under very low-resource and cross-lingual conditions, outperforming dynamic time warping and RNN-based baselines (Herreilers et al., 21 Jun 2025).

- Music Information Retrieval: In ViT-1D transformers, NT-Xent loss (applied to a class token only) results in the emergence of discriminative local features within sequence tokens, enabling strong performance on both global and local musical tasks without explicit supervision on local information (Kong et al., 30 Jun 2025).

- Sequential Recommendation Systems: When using sampled negatives due to large output spaces, scaling the normalization term (inspired by scaled cross-entropy) corrects denominator bias, vastly improving both theoretical soundness and empirical ranking performance (Xu et al., 2024).

The table below summarizes NT-Xent's core characteristics in select domains:

| Domain | Key Role of NT-Xent | Empirical Impact |

|---|---|---|

| Computer Vision | Self-supervised representation learning | SOTA on CIFAR10/SVHN, robust small-batch learning (Tu et al., 2020) |

| Graph Learning | Node embedding alignment/uniformity | >1% accuracy gain, faster convergence (Hong et al., 2023) |

| Audio/Speech | Acoustic embedding discriminability | +47% MAP over DTW baseline (Luganda KWS) (Herreilers et al., 21 Jun 2025) |

| Recommender Systems | Efficient contrastive ranking | Matches full softmax with scaled negatives (Xu et al., 2024) |

| Music IR | Global/local sequence feature emergence | Sequence tokens competitive for local tasks (Kong et al., 30 Jun 2025) |

5. Implementation Considerations and Empirical Insights

- Batch Size: The denominator in NT-Xent approximates the full normalization by using batch negatives. Larger batches improve the quality of the negative set and enhance representation quality, as shown by the explicit dependence of the NT-Xent loss upper bound on batch size through (Ågren, 2022).

- Scaling of Negatives: For large output spaces (e.g., recommendation), scaling the sum over negatives as in scaled cross-entropy corrects the denominator’s underestimation due to limited sample size, aligning NT-Xent with the true theoretical optimum (Xu et al., 2024).

- Sensitivity to Data Structure: NT-Xent’s assumptions that negatives are truly dissimilar can be violated in small or unbalanced datasets, leading to the "false negative" problem. Remedies include guided batch construction (e.g., using pseudo-labels) ensuring negatives do not share semantic categories (Chakraborty et al., 2020).

- Postprocessing: In graph and other domains, column-wise batch normalization on embeddings post-encoder can improve both alignment and uniformity, optimizing latent space geometry for downstream tasks (Hong et al., 2023).

6. Open Issues and Research Directions

Current research highlights several frontiers:

- Tightness of Upper Bounds: The theoretical upper bound for average positive pair similarity under NT-Xent is established, but practical tightness (how close models come to saturating this bound) and its dependence on batch composition and temperature remain open questions (Ågren, 2022).

- Scaling and Sampling Strategies: Further analysis is needed on optimal scaling of negatives when only a small subset is available, and on how best to combine temperature scaling with normalization scaling in large-scale contrastive objectives (Xu et al., 2024).

- Adversarial Robustness and Regularization: Smooth adversarial extensions of NT-Xent offer empirical and theoretical gains in robustness; frameworks for efficient computation and trade-off parameterization are ongoing areas (Mao et al., 2023).

- Combining Soft and Hard Targets: Soft target InfoNCE provides a route for integrating powerful probabilistic regularizers with contrastive loss, matching or surpassing hard-label baselines on large-scale datasets (Hugger et al., 2024).

7. Summary

The NT-Xent loss is a general-purpose, theoretically justified, and empirically effective objective for learning normalized, discriminative representations in self-supervised and contrastive settings. Its performance is sensitive to batch composition, temperature, and normalization practices, and it admits modular enhancements such as gradient stabilization, adversarial smoothing, soft labeling, and negative scaling. Ongoing work explores its theoretical limits, optimal calibration, and domain-specific refinements. The loss continues to underpin state-of-the-art advances across vision, graphs, audio, and recommendation, with broad implications for the design of scalable and transferable representation learning systems.