Mnemonic-Based Symbolic System

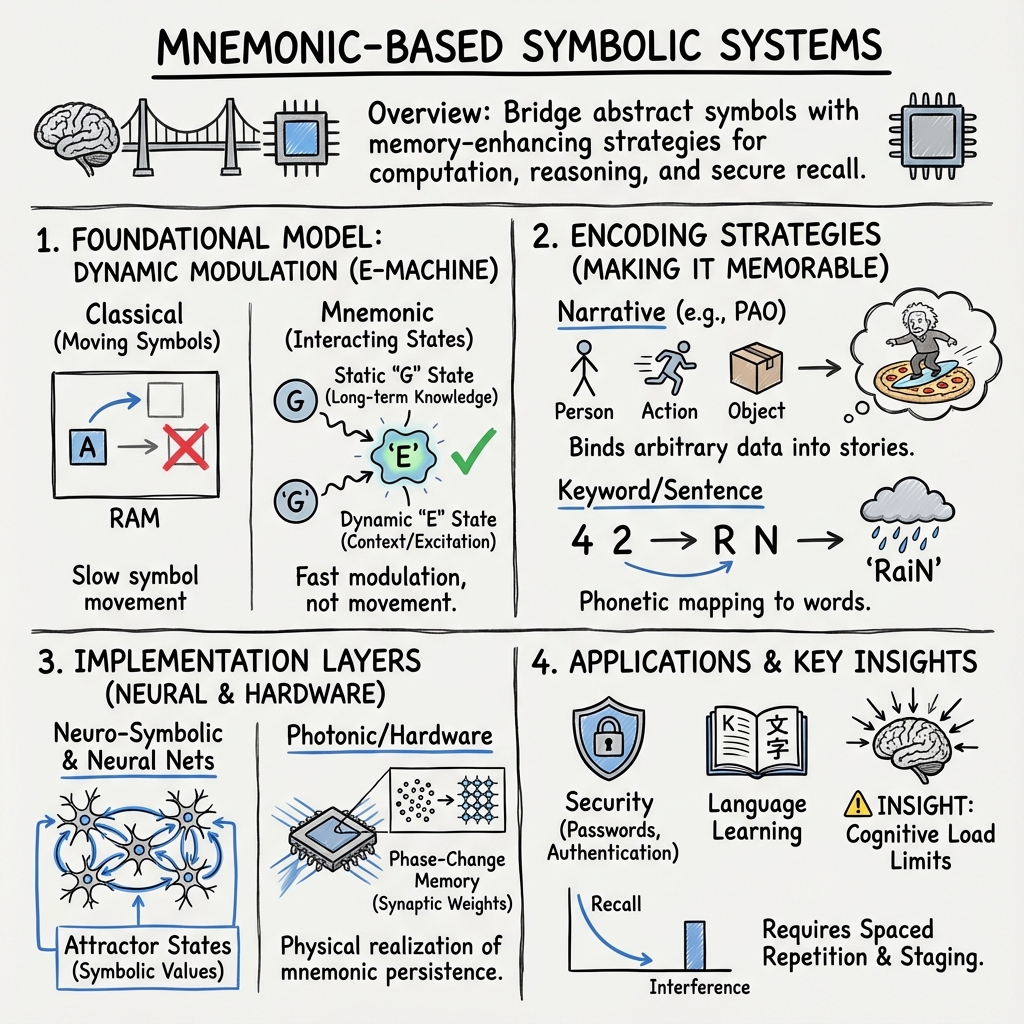

- Mnemonic-Based Symbolic System is a cognitive architecture that uses structured mnemonic cues to encode, retrieve, and manipulate symbolic information.

- It combines cognitive principles with machine learning to support tasks such as secure authentication, language acquisition, and graphic design.

- The system employs dynamic memory states and rule-based mnemonic generation to enhance recall and enable context-sensitive symbolic computation.

A mnemonic-based symbolic system is a computational or cognitive architecture that leverages structured mnemonic associations—such as linguistic, visual, or semantic cues—to encode, manipulate, retrieve, and reason with symbols or complex information. These systems bridge abstract symbolic representations with memory-enhancing strategies, drawing on both cognitive principles and modern machine learning to support tasks ranging from secure authentication to language acquisition, graphic design, and neuro-symbolic computation.

1. Foundational Models and Theoretical Principles

Early formalizations of mnemonic-based symbolic systems center on the “E-machine” model, which provides a nonclassical theory of symbolic processing inspired by mechanisms in the human neocortex (0901.1152). In this formulation, knowledge is stored as a collection of static, long-term symbolic representations (G-states), while working memory and context-dependent computations are achieved by modulating the residual dynamic states (E-states) of these symbols—rather than through a conventional RAM buffer.

A Parametric E-machine (PEM) is defined by:

- , : finite symbol sets (input/output alphabets)

- : stable long-term memory states

- : dynamic, context-sensitive excitation states

- : interpretation (decision-making) function

- : procedure for updating -states

- : incremental learning function

The E-machine paradigm posits that dynamic labeling of static symbolic structures enables context-sensitive working memory, mental simulation of external storage, and flexible computation. This model departs from classical symbolic manipulation by emphasizing interaction and modulation over symbol movement.

2. Mnemonic Encoding in Symbolic Systems

Mnemonic-based encoding is central to the functional power of these architectures. Several variants are researched:

- Narrative and Story-Based Mnemonics: As in Person-Action-Object (PAO) frameworks, stories interleave memorable entities (people), actions, and objects with contextual cues (e.g., scenes) to embed secret information for secure, long-duration recall (Blocki et al., 2014). In these models, the mnemonic acts as the symbolic representation, effectively binding sensory and semantic features.

- Keyword and Sentence-Based Systems: Automatic encoding of numbers into sentences via phoneme-to-digit mappings (the major system) yields sequences of words or full phrases. Advanced models augment these with language modeling (n-gram, POS, chunked templates) to maximize both faithful encoding and memorability (Fiorentini et al., 2017). The symbolic string can always be deterministically decoded from the mnemonic.

Such mnemonic schemes are shown to improve not only short-term recall but also user comfort and effectiveness in remembering complex or arbitrary symbols, e.g., numeric passwords (Fiorentini et al., 2017, Blocki et al., 2014).

3. Rule-Based and Interpretable Mnemonic Generation

The drive for interpretability in mnemonic-based symbolic systems has led to explicit modeling of the mnemonic construction process, governed by latent, human-interpretable rules (Lee et al., 7 Jul 2025). For example, in kanji learning, the mnemonic generation framework posits latent trait variables for each learner–item pair, selectively activating atop-of-mind strategies (transformation, imagery, logical cause-effect, etc.). The construction is modeled with an Expectation-Maximization (EM) algorithm:

- E-step: For a mnemonic , likelihoods for each rule are estimated.

- M-step: Latent factors are updated via a 1-Parameter Logistic IRT model:

with being the logistic sigmoid.

This structure enables not only more systematic mnemonic generation (especially in cold-start scenarios) but also post-hoc analysis of which strategies are most effective or align best with individual learners (Lee et al., 7 Jul 2025).

4. Neural, Photonic, and Neuro-Symbolic Implementations

Mnemonic-based symbolic processing can be realized in neural and hybrid systems:

- Neural Network Realizations: Homogeneous neural networks with lateral excitatory and inhibitory circuits, as seen in the E-machine’s neural implementation, provide distributed, context-dependent modulation of symbolic recall (via winner-take-all layers and residual excitation) (0901.1152).

- Photonic Synaptic Elements: In hardware-accelerated systems, mnemonic functionality is embodied in devices such as GSST-based Mach–Zehnder modulators. Here, phase-change materials encode multi-level weights, acting as nonvolatile synaptic memories. The switching physics—Joule heating and crystallization—allow for precise, robust, and energy-efficient manipulation of synaptic strength and, by extension, physically instantiated mnemonic weights:

Weight-setting via phase transition underpins mnemonic persistence and fast, parallel updates (Miscuglio et al., 2019).

- Neuro-Symbolic Systems: Recent neuro-symbolic models, such as Deep Symbolic Learning (DSL), demonstrate the learnability of internal, interpretable mnemonic symbols by jointly training perception and symbolic planning modules. DSL maps continuous perceptions to discrete symbols for further abstract manipulation, highlighting their mnemonic function as compressed representations for effective reasoning and memory (Daniele et al., 2022).

- Spiking Networks and Attractor-Based Registers: Prime attractors in feedback spiking neural networks serve as atomic, mnemonic symbolic values. Mechanisms for binding, unbinding, and transfer (registers, switch boxes) enable working memory operations, hash table functionality, and variable binding, reflecting emergent symbolic computation from neural substrates (Lizée, 2022).

5. Real-World Applications and System Design

Mnemonic-based symbolic systems are deployed across varied domains:

- Authentication and Security: Mnemonic encoding (e.g., PAO stories, major system sentences) supports secure, user-friendly storage of passwords, with robust memorability over months, especially when combined with spaced repetition. Empirical findings reveal an interference limit, guiding system design to stage mnemonic learning and minimize concurrent load (Blocki et al., 2014, Fiorentini et al., 2017).

- Language and Symbol Learning: Interpretable, rule-driven frameworks have proven effective in symbol-rich domains, such as kanji and cross-lingual vocabulary learning. Phonologically grounded mnemonics, as in the PhoniTale system, use explicit IPA-based transliteration, L1-based keyword matching, and cue generation via LLMs, enabling L2 learners to anchor unfamiliar words to native phonological templates, thereby enhancing both recall and learning efficiency (Kang et al., 7 Jul 2025).

- Graphic Design: Generation of concept-representative symbols leverages background knowledge, visual mnemonic cues, and computational creativity (deep learning, evolutionary optimization) to automatically produce memorable, metaphor-rich icons for applications such as logo and pictogram design (Cunha et al., 2017).

- Educational Technologies: Personalized mnemonic generators (e.g., SMART) incorporate student feedback—both expressed and observed—using hierarchical Bayesian models and Direct Preference Optimization (DPO) to align LLM outputs with mnemonics that demonstrably aid learning, offering scalable, preference-aligned aids for vocabulary acquisition (Balepur et al., 2024).

6. Limitations, Empirical Findings, and Practical Guidance

Studies consistently underscore several key limitations:

- Cognitive Load: There is a measurable interference effect when multiple mnemonic-encoded symbols are learned simultaneously, reducing recall rates. Optimal systems therefore stagger or scaffold mnemonic learning, limit initial load, and provide extra early rehearsals (Blocki et al., 2014).

- Feedback Types: Discrepancies exist between users’ expressed preferences for mnemonics and objectively observed recall effectiveness. Models that synthesize both signal types (via Bayesian modeling) achieve higher quality alignment with learning outcomes (Balepur et al., 2024).

- Interpretability vs. Flexibility: Rule-based, compositional frameworks enable transparency and insight, supporting user adaptation and system personalization. However, they may lag behind purely neural approaches in coverage or natural language fluidity unless enhanced with hybrid approaches (Lee et al., 7 Jul 2025).

7. Mathematical and Algorithmic Formulations

Mnemonic-based symbolic systems are underpinned by clear algorithmic and mathematical frameworks:

- Dynamic modulation and context-sensitive similarity:

- Spaced repetition interval scheduling:

- Rule activation in interpretable mnemonic generation:

- Direct Preference Optimization loss for feedback alignment in LLMs:

- Neural and photonic mnemonic implementation:

These frameworks provide implementable architectures that unify symbolic reasoning, mnemonic encoding, neural and physical hardware, and adaptive feedback for robust, interpretable, and user-aligned symbolic systems.