BrowseComp: Agentic AI Web Benchmark

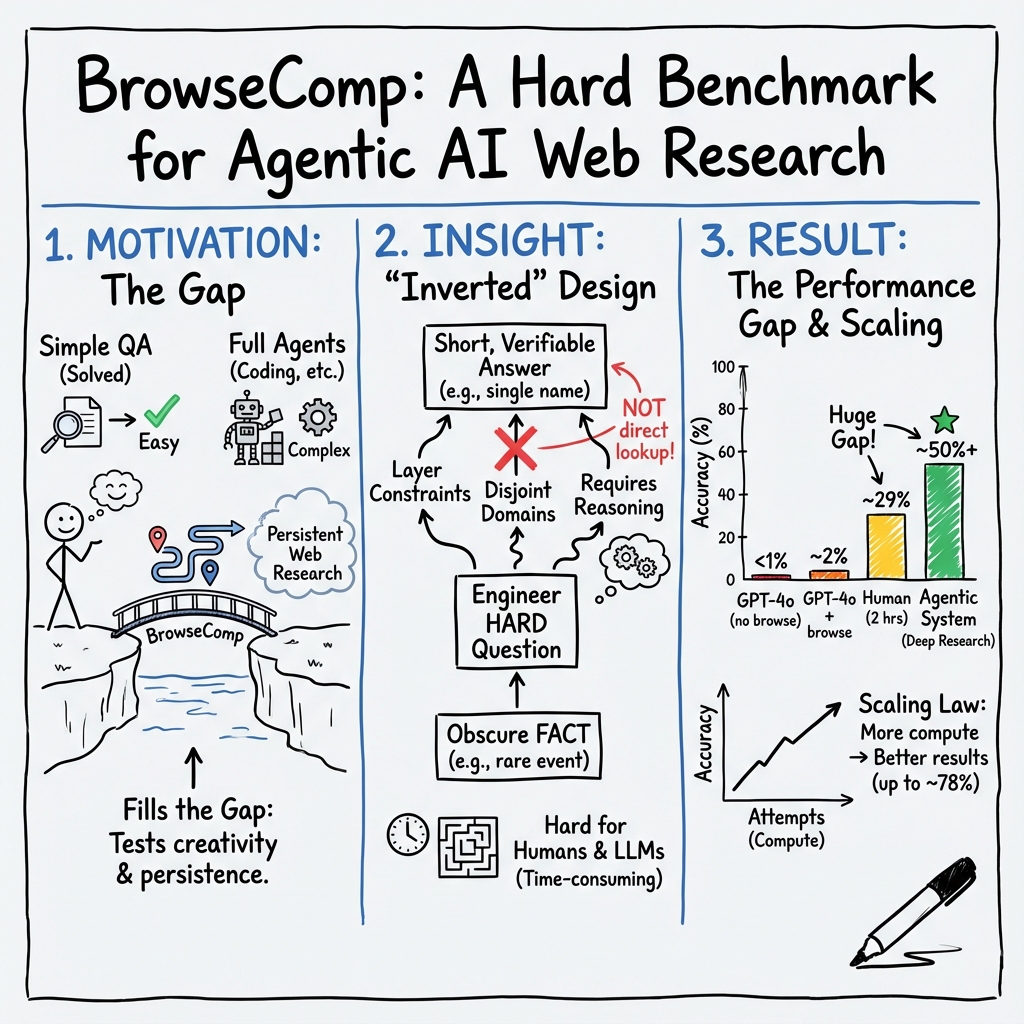

- BrowseComp is a benchmark that assesses agentic AI systems on persistent, creative web navigation and multi-hop reasoning using 1,266 curated, fact-based questions.

- It employs an inverted methodology by starting with rare facts and engineering questions that defy shallow retrieval, emphasizing iterative search reformulation and evidence synthesis.

- Empirical evaluations reveal stark performance differences among agents, highlighting the value of adaptive planning, tool integration, and scalable computing for robust web research.

BrowseComp is a benchmark designed to rigorously assess the information-seeking, reasoning, and web navigation capabilities of agentic AI systems. It presents a collection of 1,266 curated questions requiring persistent, multi-step internet browsing to obtain short, verifiable factual answers. Conceived as a high-difficulty test for language agents, BrowseComp parallels programming competitions in coding agent evaluation: it targets a specific, challenging slice of practical web research that emphasizes creativity, persistence, and reasoning over shallow retrieval.

1. Benchmark Construction and Objectives

BrowseComp was created to fill a gap in evaluation for “browsing agents”—AI systems that must autonomously navigate the web, issue queries, reformulate their search strategies, and synthesize evidence to solve hard questions not answerable via direct keyword lookup or internal model knowledge. The benchmark deliberately emphasizes the following:

- Persistence: Successful agents repeatedly reformulate their approach and persist through ambiguity or misleading evidence.

- Creativity: Many questions require indirect reasoning, hypothesis generation, and exploration across heterogeneous web domains.

- Multi-hop Reasoning: Questions are constructed so that directly searching for the answer (even with access to advanced retrieval-augmented models) is infeasible; multi-step reasoning and synthesis across sources are necessary.

- Short, Verifiable Answers: Each question demands a single-word/short-phrase answer, allowing straightforward, automated grading by semantic equivalence.

- Focus on Core Skills: By design, BrowseComp avoids evaluation of long-form question answering, user intent disambiguation, or generation of discursive explanations, enabling a clean focus on search and reasoning competence.

BrowseComp’s methodology is “inverted”: authors start from rare facts and engineer questions backward so that they are challenging to uncover but easy to grade, circumventing confounds present in open-domain QA collections.

2. Dataset Design and Content

Each BrowseComp question is crafted to be “hard-to-find”: answers are obscure, time-invariant, and well-supported by evidence scattered across the open web. The construction pipeline involves:

- Fact Selection: Identification of rare, objective facts (e.g., a specific event, name, or number).

- Constraint Synthesis: Multiple constraints from disjoint domains are layered onto the question (e.g., intersections of time period, affiliations, properties, or narrative context).

- Human and Machine Validation: Each question is independently validated to ensure it cannot be solved within 10 minutes by an expert annotator or by top-tier LLMs (such as GPT-4o with browsing enabled).

- Topical Breadth: Questions cover a wide diversity of domains, including TV/movies, science and technology, history, sports, art, music, and politics. Distribution includes, for instance, 16% TV/movies and 13% science/tech.

Sample anonymized task examples include:

- Domain-constrained event queries (e.g., specific sports matches with layered constraints).

- Multi-faceted entity identification (e.g., characters with rare narrative attributes and tight episode constraints).

- Interlinked publication or discovery events requiring evidence from primary and secondary sources.

Answers are canonicalized to short unique strings, streamlining semantic equivalence checks.

3. Evaluation Protocol and Results

BrowseComp employs rigorous evaluation rooted in answer accuracy and calibration, with grading performed by an automated LLM-as-grader process:

- Accuracy: Proportion of questions for which the agent’s final answer matches the reference answer, normalized for synonymy and phrasing.

- Calibration error: Measures agreement between agent confidence and actual correctness, enabling assessment of agent reliability.

- Test-time Compute Scaling: Agents are evaluated over increasing numbers of independent search attempts per question (up to 64), and aggregation methods (e.g., best-of-N, majority, weighted voting) are compared.

Empirical findings highlight the difficulty of BrowseComp:

- Human expert accuracy in two hours: 29%

- Single-attempt agent accuracy—GPT-4o: 0.6%, GPT-4o+browsing: 1.9%, OpenAI o1: 9.9%

- Specialized agentic system (Deep Research): 51.5% single attempt, scaling to ~78% with best-of-N aggregation over multiple attempts.

Variants such as BrowseComp-ZH have been developed to test Chinese-language web browsing, revealing similar patterns of challenge owing to distinct ecosystem fragmentation and cross-lingual constraints.

4. Comparison to Prior and Related Benchmarks

BrowseComp contrasts with earlier information-seeking and QA benchmarks in several critical aspects:

| Feature | BrowseComp | Prior QA Benchmarks (TriviaQA, HotpotQA, FEVER, ELI5, etc.) |

|---|---|---|

| Answer Findability | Rare, obscure | Common, easily found |

| Multi-step Search | Required | Usually not required |

| Reasoning Depth | High | Usually low–medium |

| Evaluation Style | Short, factual | Medium–long form, sometimes subjective |

| Persistence/Creativity | Essential | Often not exercised |

| Tool Use Emphasis | Yes (browsing agents) | No |

| Answer Verification | Easy | Sometimes ambiguous |

| Human Difficulty | Not solvable quickly | Solvable |

Benchmarks such as Search Arena and ScholarSearch have since extended evaluation to domains including multi-turn dialog, real-world functional search, and academic literature retrieval, often exposing further gaps in tool- and agentic competence not covered by single-turn or static datasets.

5. Agentic AI Approaches and Performance

BrowseComp is engineered to expose the difference between conventional pre-trained LLMs, retrieval-augmented chatbots, and fully agentic systems:

- Prompted LLMs and RAG: Models relying solely on static knowledge or shallow retrieval achieve <10% accuracy.

- Agentic Architectures: Systems employing iterative planning, autonomous tool invocation, dynamic search reformulation, memory, and subgoal tracking (e.g., OAgents, WebSailor, WebDancer, Deep Research) secure dramatic performance gains (Deep Research: 51.5% pass@1).

- Scaling Law: A linear relationship is observed between test-time compute (total number of attempts/search chains per instance) and aggregate accuracy, supporting a “test-time scaling law” for agentic research.

Recent developments introduce strategies for uncertainty reduction, group-based RL optimization, adaptive query generation, and reward shaping for answer verifiability, all of which are critical for excelling on BrowseComp.

6. Impact, Limitations, and Future Directions

BrowseComp has catalyzed advances in research on agentic AI, tool-using reasoning models, and practical browsing agents by:

- Providing a stringent, reproducible test of real-world information-seeking that goes beyond solved benchmarks.

- Incentivizing innovation in autonomous planning, search diversification, memory, and tool-use integration.

- Highlighting persistent gaps between agentic, retrieval-augmented, and parametric-only LLMs.

Limitations include:

- Lack of representation for real-world user query distributions (questions are inverted rather than sampled from logs).

- Incomplete coverage of real-world agentic behaviors, such as long-form answer synthesis or multimedia reasoning.

- Potential for alternative, undiscovered valid answers—though mitigated by robust question vetting.

Future work proposed in the literature includes:

- Expanding BrowseComp to multi-modal questions (images, tables), ambiguous queries, and authentic user-initiated tasks.

- Integrating dynamic feedback, self-reflective calibration, and human-in-the-loop oversight for deployment-ready agents.

- Cross-lingual and culturally grounded versions (e.g., BrowseComp-ZH), supporting global research and localization.

7. Summary Table: BrowseComp in Context

| Dimension | BrowseComp | Search Arena / ScholarSearch |

|---|---|---|

| Core Task | Multi-step, creative browsing | General search, multi-turn dialog, academic IR |

| Evaluation Metric | Short answer accuracy, calibration error | Human preference, citation, source lineage |

| Difficulty | Very High, unsolvable by pretrained LLMs | High–variable |

| Agentic Skill Tested | Persistence, reasoning, tool-use | Groundedness, synthesis, user trust |

| Benchmarked System Types | Browsing agents, agentic LLMs | Retrieval-augmented/chat LLMs, agentic systems |

| Human Solvability | 29% (2 hours, expert); 71% unsolved | 50–90% (depending on task) |

BrowseComp has become a canonical reference for evaluating advanced web agentic systems, stimulating the development of persistent, creative, multi-tool AI researchers and establishing a rigorous foundation for comparative study in agentic AI.