Waver: Wave Your Way to Lifelike Video Generation (2508.15761v2)

Abstract: We present Waver, a high-performance foundation model for unified image and video generation. Waver can directly generate videos with durations ranging from 5 to 10 seconds at a native resolution of 720p, which are subsequently upscaled to 1080p. The model simultaneously supports text-to-video (T2V), image-to-video (I2V), and text-to-image (T2I) generation within a single, integrated framework. We introduce a Hybrid Stream DiT architecture to enhance modality alignment and accelerate training convergence. To ensure training data quality, we establish a comprehensive data curation pipeline and manually annotate and train an MLLM-based video quality model to filter for the highest-quality samples. Furthermore, we provide detailed training and inference recipes to facilitate the generation of high-quality videos. Building on these contributions, Waver excels at capturing complex motion, achieving superior motion amplitude and temporal consistency in video synthesis. Notably, it ranks among the Top 3 on both the T2V and I2V leaderboards at Artificial Analysis (data as of 2025-07-30 10:00 GMT+8), consistently outperforming existing open-source models and matching or surpassing state-of-the-art commercial solutions. We hope this technical report will help the community more efficiently train high-quality video generation models and accelerate progress in video generation technologies. Official page: https://github.com/FoundationVision/Waver.

Collections

Sign up for free to add this paper to one or more collections.

Summary

- The paper introduces a unified high-fidelity video generation framework utilizing a rectified flow Transformer backbone and hybrid stream design.

- It employs a two-stage cascade refinement pipeline to upscale videos to 1080p, enhancing motion realism and visual quality.

- Empirical analysis shows competitive performance on T2V/I2V benchmarks, with robust motion fidelity and prompt adherence.

Waver: Unified High-Fidelity Video Generation via Hybrid Flow Transformers

Overview and Motivation

The Waver model introduces a unified framework for high-resolution video and image generation, supporting text-to-video (T2V), image-to-video (I2V), and text-to-image (T2I) tasks within a single architecture. The work addresses persistent challenges in video synthesis: suboptimal motion realism, limited aesthetic quality, inefficient multi-task modeling, and opaque data curation and training procedures. Waver leverages a rectified flow Transformer backbone, a hybrid stream architecture for modality alignment, and a two-stage cascade refinement pipeline to efficiently generate 1080p videos with strong motion fidelity and visual clarity.

Model Architecture

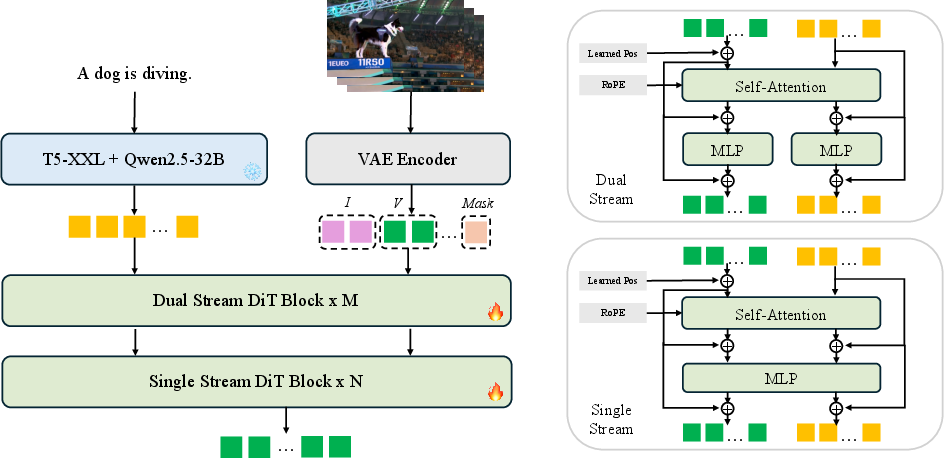

Task-Unified DiT and Hybrid Stream Design

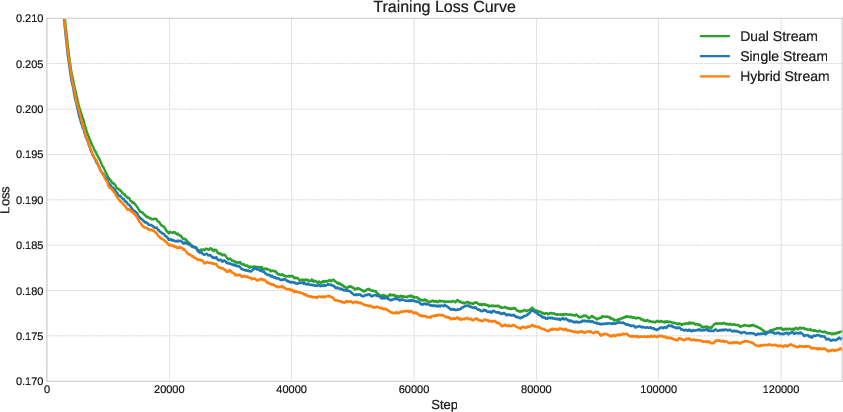

Waver's core is the Task-Unified DiT, built on rectified flow Transformers. It employs a flexible input tensor formulation, concatenating noisy latents, conditional frames, and binary masks to support T2I, T2V, and I2V tasks. The hybrid stream architecture combines Dual Stream blocks (separate parameters for text and video, merged at self-attention) and Single Stream blocks (shared parameters), optimizing the trade-off between modality alignment and parameter efficiency. Empirical results show that this hybrid approach accelerates convergence compared to pure strategies.

Figure 1: Architecture of Task-Unified DiT, illustrating the hybrid stream design for unified multi-modal generation.

Figure 2: Hybrid Stream structure achieves faster loss convergence than Dual or Single Stream alone.

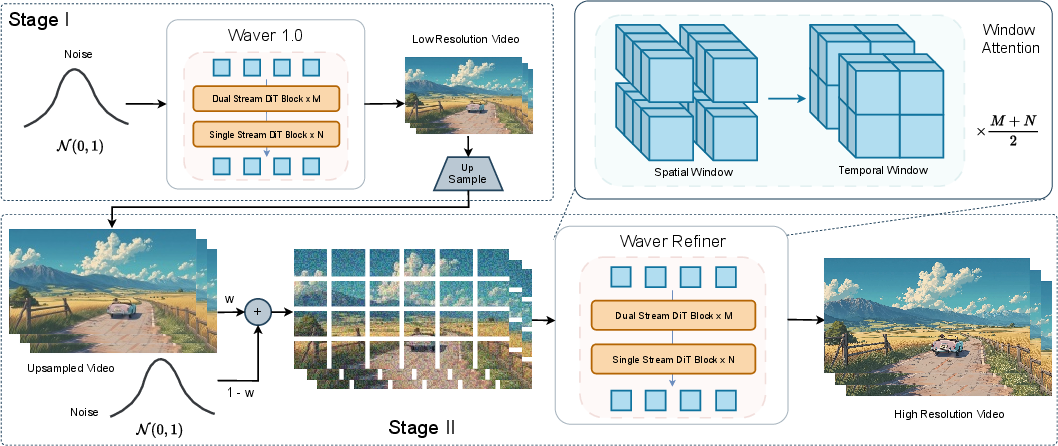

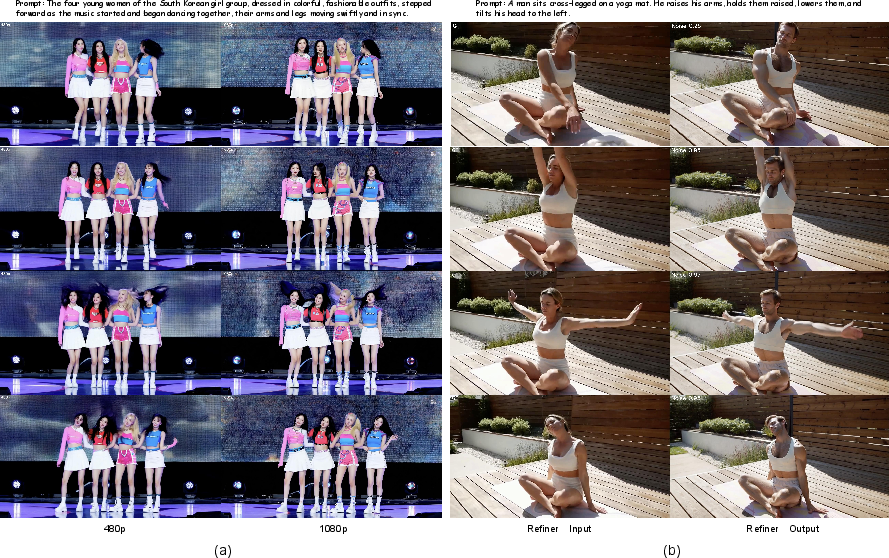

Cascade Refiner for Efficient Super-Resolution

To mitigate the computational cost of direct 1080p video generation, Waver adopts a two-stage pipeline: initial synthesis at 720p, followed by upscaling via the Cascade Refiner. The refiner uses window attention to restrict computation to local spatio-temporal windows, alternating between spatial and temporal schemes, and applies full attention in shallow and deep layers for fidelity. Training employs pixel and latent degradation to simulate realistic low-quality inputs, with flow matching to learn the mapping to high-resolution outputs. The refiner also demonstrates video editing capabilities under high noise conditions.

Figure 3: Pipeline of Cascade Refiner, showing hierarchical video upscaling and artifact correction.

Figure 4: Refiner output: (a) upscaling and artifact correction; (b) video editing via latent manipulation.

Text Encoder and Representation Alignment

Waver utilizes a dual text encoder (flan-t5-xxl and Qwen2.5-32B-Instruct) to enhance prompt following, especially in T2I tasks. Representation alignment is enforced by constraining intermediate DiT features to match high-level semantic features extracted by Qwen2.5-VL, using cosine similarity loss during 480p training. This alignment yields improved semantic structure and prompt adherence in generated videos.

Figure 5: Dual text encoder setup improves prompt following in T2I generation.

Figure 6: Representation alignment enhances semantic quality in generated videos.

Data Curation and Training Pipeline

Hierarchical Data Filtering and Semantic Balancing

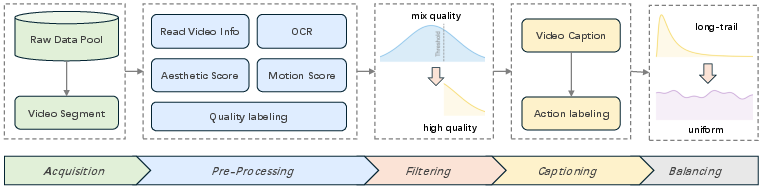

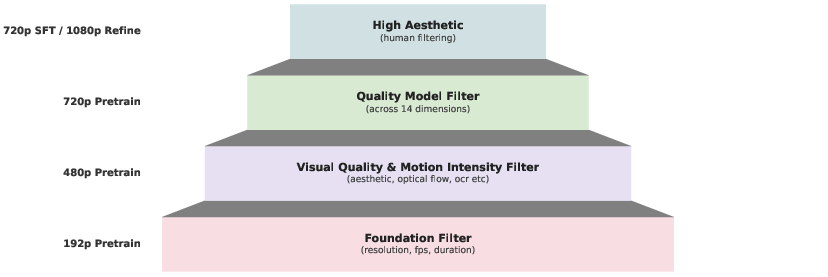

Waver's data pipeline integrates multi-source acquisition, scene segmentation, quality scoring (aesthetics, motion, watermark detection), and manual annotation. A hierarchical filtering funnel progressively refines the dataset, with increasingly strict criteria at each stage (192p, 480p, 720p, 1080p). Semantic balancing is achieved via multi-level action label classification and oversampling of underrepresented categories, supplemented by synthetic data for aesthetic enhancement.

Figure 7: Data processing pipeline: acquisition, filtering, captioning, and balancing.

Figure 8: Hierarchical data filtering funnel for progressive dataset refinement.

Caption Model and Action Temporal Alignment

Captions are generated and refined using Qwen2.5-VL, with detailed annotation of actions, subjects, backgrounds, and temporal intervals. This enables precise alignment between video segments and action descriptions, improving motion fidelity and instruction following.

Synthetic Data and Aesthetics Optimization

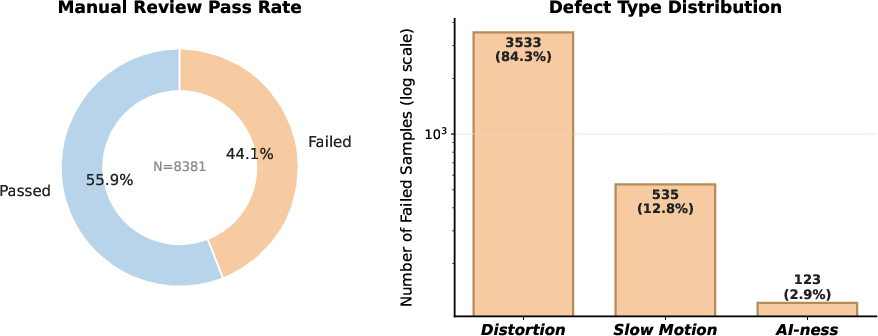

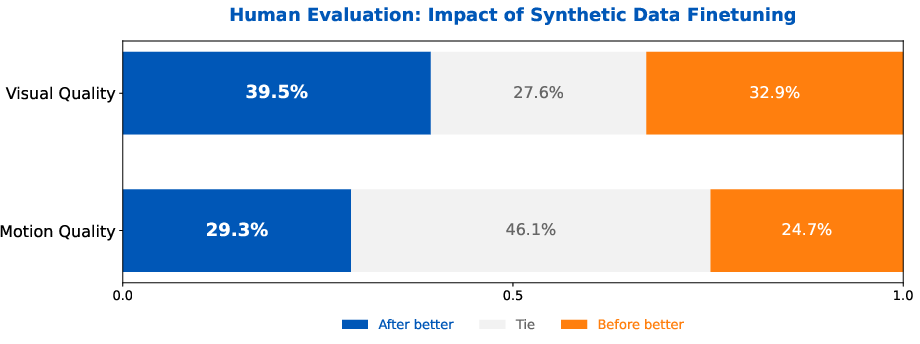

Synthetic video samples, generated via I2V from high-quality images, are manually reviewed for distortions and "AI-ness." Finetuning on curated synthetic data yields a substantial increase in visual quality (39.5% win rate vs. 32.9% baseline), with motion quality preserved.

Figure 9: Synthetic data exhibits superior aesthetics compared to typical real-world video.

Figure 10: Manual review statistics for synthetic video samples; distortion is the primary failure mode.

Figure 11: High-aesthetic finetuning improves color, clarity, and overall visual quality.

Training and Inference Strategies

Multi-Stage Curriculum and Motion Optimization

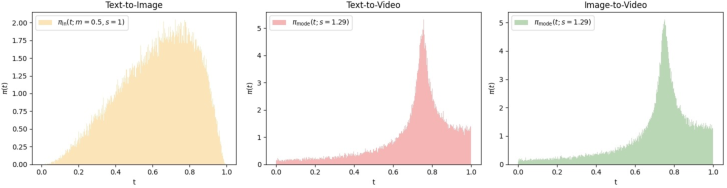

Training proceeds through staged resolution increases (192p, 480p, 720p, 1080p), with task mixing and joint T2V/I2V training to prevent motion collapse. Low-resolution pretraining (192p) is critical for learning large-scale motion, and mode-based timestep sampling further enhances motion amplitude.

Figure 12: 192p pretraining enables larger motion in subsequent 480p T2V results.

Figure 13: Mode sampling produces more intense motion than logit-normal sampling.

Figure 14: Timestep sampling distributions for T2I (logit-normal) and T2V/I2V (mode).

Figure 15: Joint T2V/I2V training increases motion amplitude in I2V outputs.

Figure 16: Foreground motion score used for filtering static and excessive-motion videos.

Model Balancing and Prompt Engineering

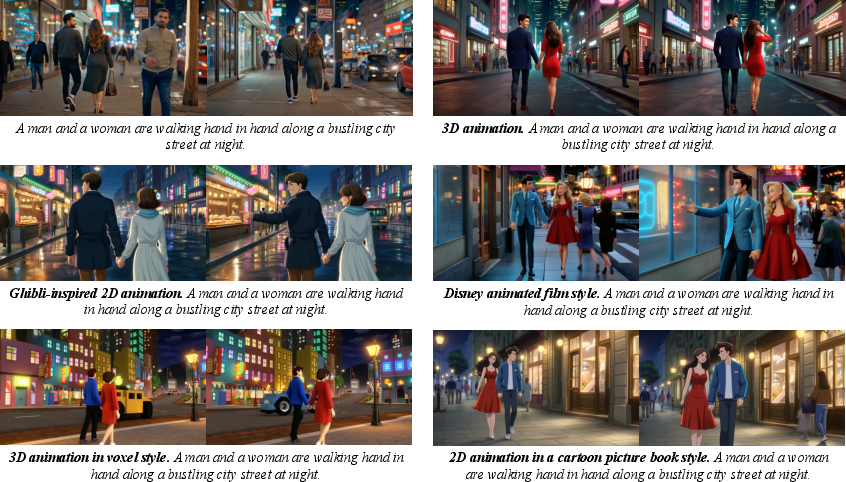

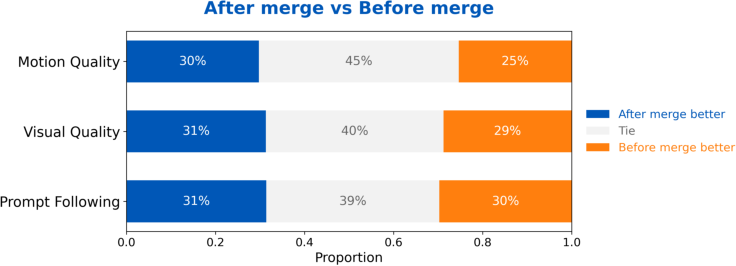

Prompt tagging distinguishes style and quality categories, enabling style-consistent generation. Video APG (Adapted Parallel Guidance) reduces artifacts and improves realism. Model averaging across checkpoints and training stages yields further improvements in motion, visual quality, and prompt following.

Figure 17: Prompt tagging enables generation of diverse video styles.

Figure 18: Video APG achieves greater realism and fewer artifacts than standard CFG.

Figure 19: Model merging improves motion, visual quality, and prompt following.

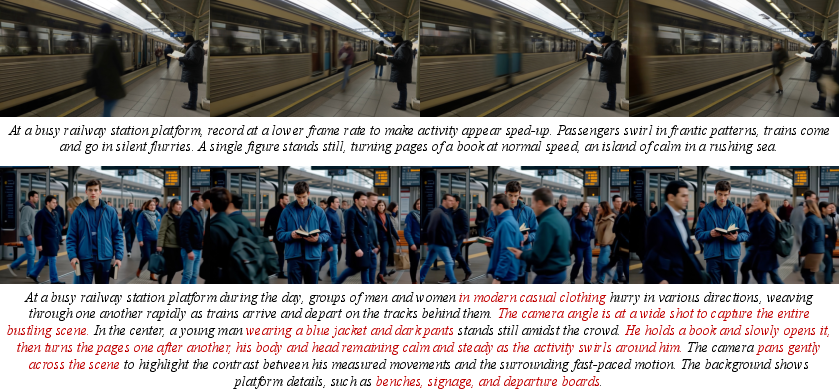

Prompt rewriting via LLMs (e.g., GPT-4.1) aligns user inputs with training caption distributions, enhancing generation quality.

Figure 20: Prompt rewriting increases visual richness and aesthetic quality in generated videos.

Infrastructure and Scaling

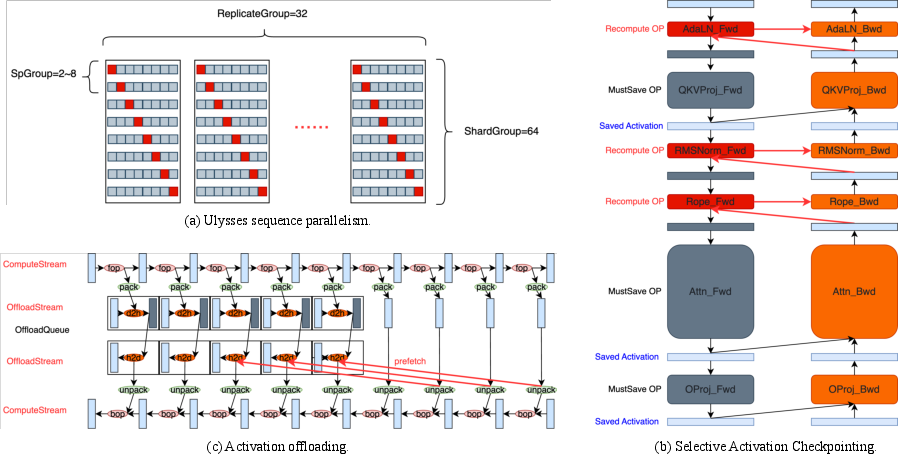

Waver employs hybrid sharded FSDP, Ulysses sequence parallelism, bucket dataloaders, selective activation checkpointing, and activation offloading to enable efficient training on extreme long sequences and high-resolution videos. Torch.compile is used for operator fusion, and MFU (Model FLOPs Utilization) is reported at each stage.

Figure 21: Infrastructure optimizations for memory and compute efficiency in long-sequence video training.

Benchmarking and Evaluation

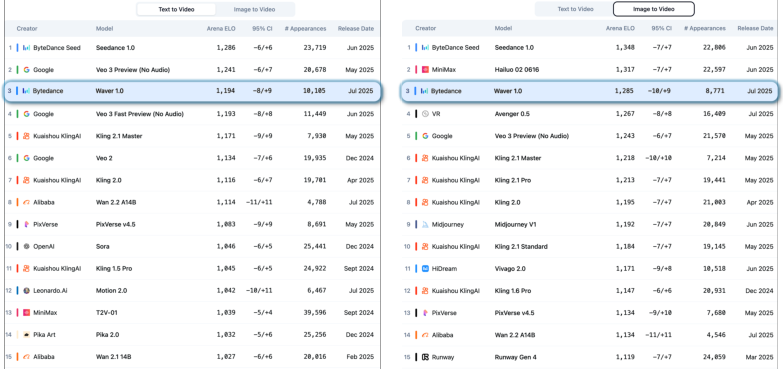

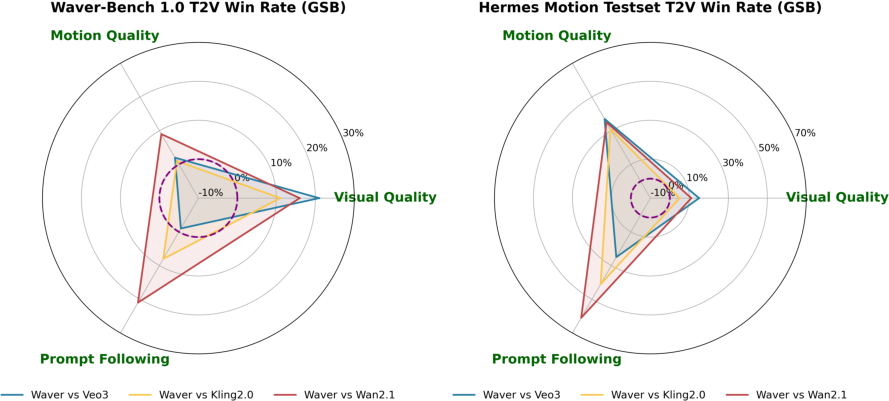

Waver ranks third on both T2V and I2V leaderboards at Artificial Analysis, outperforming open-source models and matching commercial solutions. Human evaluation on Waver-bench 1.0 and Hermes Motion Testset demonstrates strong performance in motion quality, visual quality, and prompt following, with pronounced advantages in complex motion scenarios.

Figure 22: Waver ranks third on both T2V and I2V leaderboards at Artificial Analysis.

Figure 23: Human evaluation win rates on Waver-bench 1.0 and Hermes Motion Testset; Waver excels in complex motion scenarios.

Empirical Analysis and Ablations

DiT Attention Sparsity

Analysis of attention maps reveals heterogeneous head patterns, layer-wise sparsity dynamics, and timestep-wise evolution, motivating adaptive sparse attention mechanisms over fixed window approaches.

VAE Loss Balancing

KL loss weight is shown to be sensitive to grainy textures and background distortion; excessive KL regularization collapses latent space, while insufficient regularization leads to mode collapse. LPIPS loss weight must be carefully tuned to avoid grid-like artifacts.

Caption Model Impact

Detailed captions and explicit spatial relation QA pairs improve instruction following and reduce motion distortion, highlighting the importance of high-quality annotation for video generation.

Implications and Future Directions

Waver demonstrates that unified multi-task modeling, hybrid stream architectures, and hierarchical data curation are effective for high-fidelity video synthesis. The work provides practical recipes for scaling video generation, balancing motion and aesthetics, and optimizing infrastructure for extreme sequence lengths. Future research should focus on adaptive sparse attention for video, higher-compression multimodal VAEs, and reinforcement learning for artifact mitigation and detail enhancement.

Conclusion

Waver establishes a robust framework for unified, high-resolution video generation, achieving strong empirical results in motion realism, visual quality, and prompt adherence. The model's architecture, data pipeline, and training strategies offer a comprehensive blueprint for future video foundation models. Limitations remain in handling fine-grained human details and expressiveness in high-motion scenes, suggesting avenues for further research in RL-based optimization and multimodal representation learning.

Follow-up Questions

- How does the hybrid stream architecture balance modality alignment and parameter efficiency in video generation?

- What benefits does the two-stage cascade refinement pipeline offer in terms of resolution and artifact correction?

- In what ways do the dual text encoders improve prompt following and semantic representation in generated videos?

- How does the hierarchical data filtering contribute to the aesthetic and motion quality of the final video outputs?

- Find recent papers about unified video generation.

Related Papers

- VideoCrafter1: Open Diffusion Models for High-Quality Video Generation (2023)

- Tora: Trajectory-oriented Diffusion Transformer for Video Generation (2024)

- FlashVideo: Flowing Fidelity to Detail for Efficient High-Resolution Video Generation (2025)

- Step-Video-T2V Technical Report: The Practice, Challenges, and Future of Video Foundation Model (2025)

- Wan: Open and Advanced Large-Scale Video Generative Models (2025)

- SkyReels-V2: Infinite-length Film Generative Model (2025)

- MAGI-1: Autoregressive Video Generation at Scale (2025)

- Seedance 1.0: Exploring the Boundaries of Video Generation Models (2025)

- Kwai Keye-VL Technical Report (2025)

- Yume: An Interactive World Generation Model (2025)

YouTube

alphaXiv

- Waver: Wave Your Way to Lifelike Video Generation (42 likes, 0 questions)