Deep Think with Confidence (2508.15260v1)

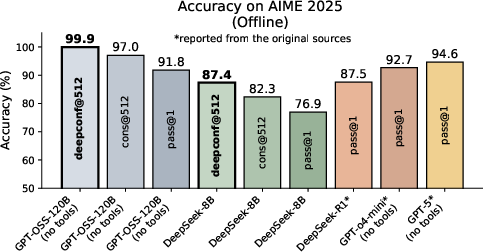

Abstract: LLMs have shown great potential in reasoning tasks through test-time scaling methods like self-consistency with majority voting. However, this approach often leads to diminishing returns in accuracy and high computational overhead. To address these challenges, we introduce Deep Think with Confidence (DeepConf), a simple yet powerful method that enhances both reasoning efficiency and performance at test time. DeepConf leverages model-internal confidence signals to dynamically filter out low-quality reasoning traces during or after generation. It requires no additional model training or hyperparameter tuning and can be seamlessly integrated into existing serving frameworks. We evaluate DeepConf across a variety of reasoning tasks and the latest open-source models, including Qwen 3 and GPT-OSS series. Notably, on challenging benchmarks such as AIME 2025, DeepConf@512 achieves up to 99.9% accuracy and reduces generated tokens by up to 84.7% compared to full parallel thinking.

Collections

Sign up for free to add this paper to one or more collections.

Summary

- The paper presents DeepConf, a method that uses internal confidence metrics like token entropy to filter out low-quality reasoning traces.

- DeepConf employs both offline and online algorithms that optimize performance, achieving up to 99.9% accuracy and saving up to 84.7% tokens.

- The approach is model-agnostic and requires minimal modifications, facilitating efficient and scalable ensemble reasoning in LLM deployments.

Deep Think with Confidence: Confidence-Guided Reasoning for Efficient LLM Inference

Introduction

The paper "Deep Think with Confidence" introduces DeepConf, a test-time method for enhancing both the accuracy and efficiency of LLMs on complex reasoning tasks. DeepConf leverages model-internal confidence signals to filter low-quality reasoning traces, either during (online) or after (offline) generation, without requiring additional training or hyperparameter tuning. The method is evaluated on challenging mathematical and STEM benchmarks (AIME24/25, BRUMO25, HMMT25, GPQA-Diamond) using state-of-the-art open-source models (DeepSeek-8B, Qwen3-8B/32B, GPT-OSS-20B/120B). DeepConf demonstrates substantial improvements in accuracy and computational efficiency compared to standard majority voting and self-consistency approaches.

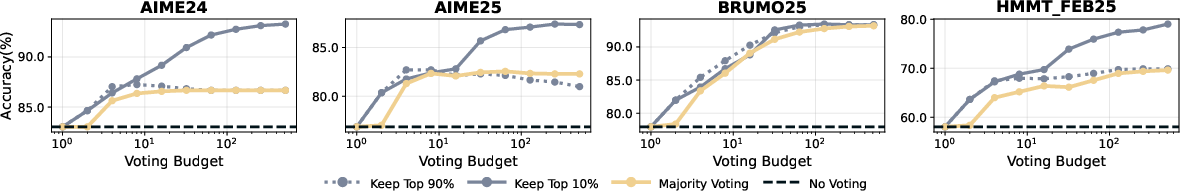

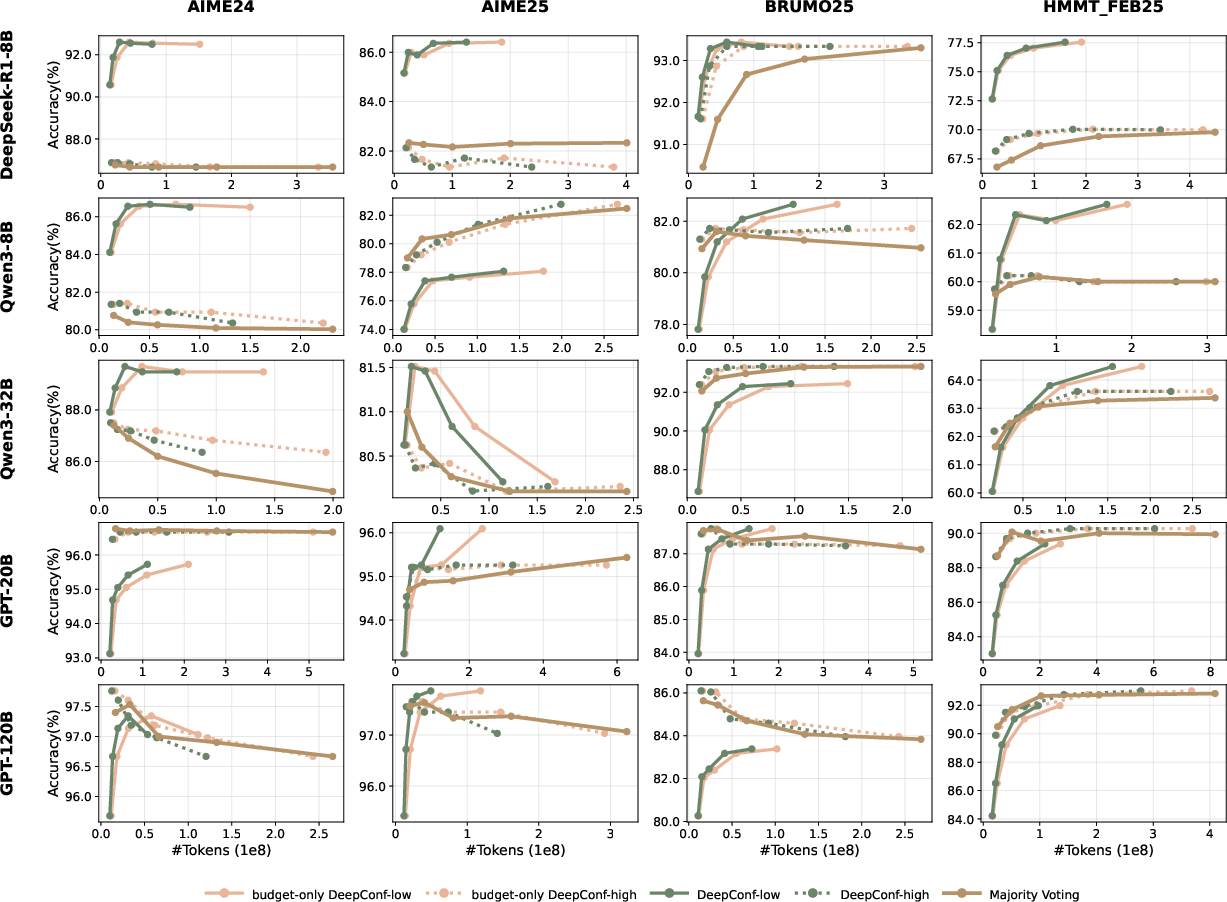

Figure 1: DeepConf on AIME 2025 (top) and parallel thinking using DeepConf (bottom).

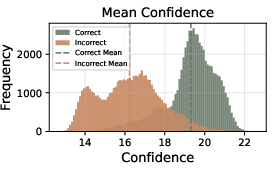

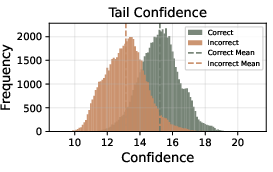

Confidence Metrics for Reasoning Trace Quality

DeepConf builds on the observation that model-internal token distribution statistics (entropy, log-probabilities) provide reliable signals for reasoning trace quality. The paper formalizes several confidence metrics:

- Token Entropy: Hi=−∑jPi(j)logPi(j), where Pi(j) is the probability of token j at position i.

- Token Confidence: Ci=−k1∑j=1klogPi(j), averaging log-probabilities over top-k tokens.

- Average Trace Confidence: Cavg=N1∑i=1NCi, global mean confidence over a trace.

- Group Confidence: Local average over sliding windows of n tokens, CGi=∣Gi∣1∑t∈GiCt.

- Bottom 10% Group Confidence: Mean of the lowest 10% group confidences in a trace.

- Lowest Group Confidence: Minimum group confidence in a trace.

- Tail Confidence: Mean confidence over the final m tokens (e.g., last 2048 tokens).

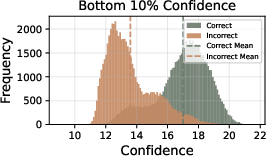

Empirical analysis shows that local metrics (bottom 10%, lowest group, tail) better separate correct and incorrect traces than global averages, especially for long chains of thought.

Figure 2: Confidence distributions for correct vs. incorrect reasoning traces across different metrics (HMMT25, 4096 traces per problem).

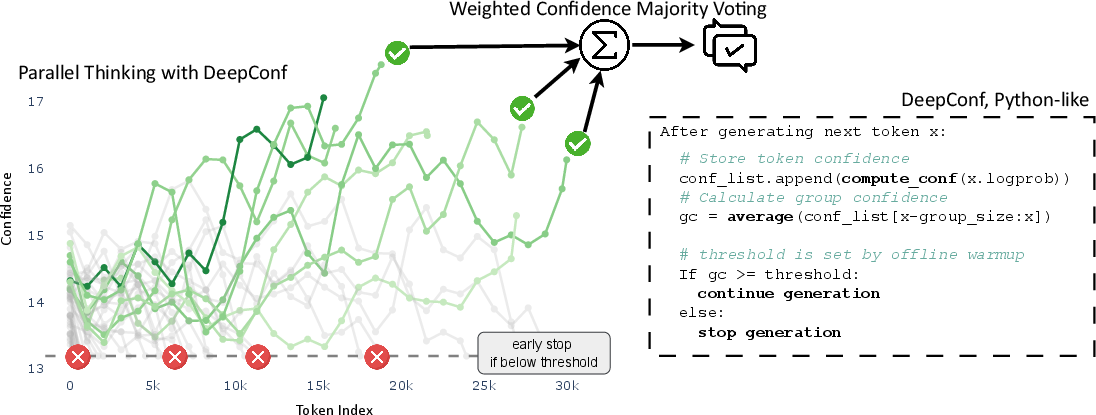

Offline and Online DeepConf Algorithms

Offline DeepConf

In the offline setting, all reasoning traces are generated before aggregation. DeepConf applies confidence-weighted majority voting, optionally filtering to retain only the top-η% most confident traces. This approach prioritizes high-confidence traces, reducing the impact of low-quality or erroneous reasoning.

Algorithmic steps:

- Generate N traces for a prompt.

- Compute trace-level confidence using a chosen metric.

- Retain top-η% traces by confidence.

- Aggregate answers via confidence-weighted majority voting.

Figure 3: Visualization of confidence measurements and offline thinking with confidence.

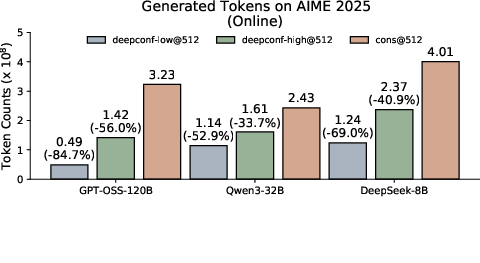

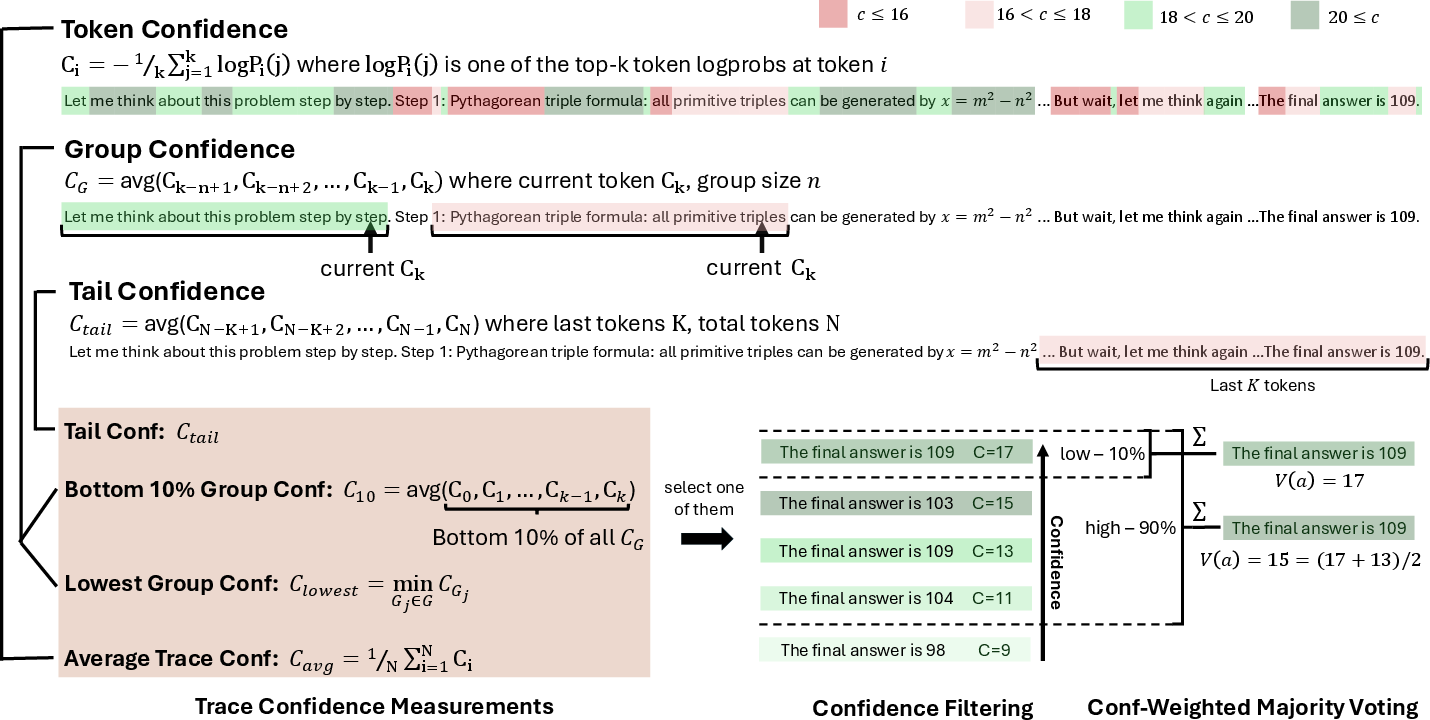

Online DeepConf

Online DeepConf enables real-time filtering and early termination of low-confidence traces during generation. The method uses a warmup phase to calibrate a confidence threshold s (e.g., 90th percentile of lowest group confidence from Ninit traces). During generation, traces are terminated when their current group confidence falls below s. Adaptive sampling continues until consensus is reached or a budget cap is met.

Algorithmic steps:

- Warmup: Generate Ninit traces, compute threshold s.

- For each new trace, monitor group confidence.

- Terminate trace early if confidence <s.

- Aggregate completed traces via confidence-weighted voting.

- Stop when consensus β≥τ or budget B is reached.

Figure 4: DeepConf during online generation.

Experimental Results

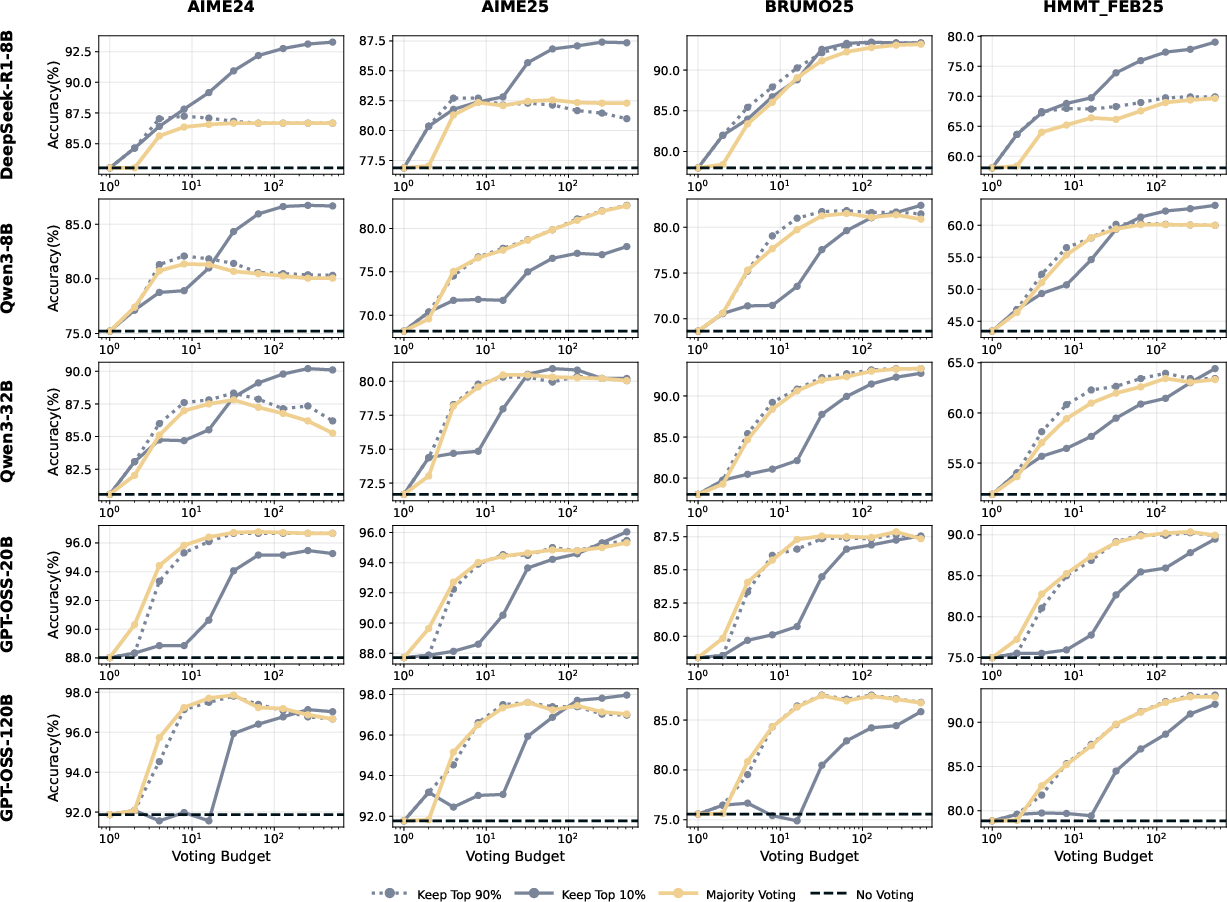

Offline Evaluations

DeepConf consistently outperforms standard majority voting across models and datasets. Filtering with η=10% yields the largest gains, e.g., DeepSeek-8B on AIME25 (82.3% → 87.4%), Qwen3-32B on AIME24 (85.3% → 90.8%), and GPT-OSS-120B reaching 99.9% on AIME25. Conservative filtering (η=90%) matches or slightly exceeds majority voting, providing a safer default.

Figure 5: Offline accuracy with Lowest Group Confidence filtering (DeepSeek-8B) on AIME24, AIME25, BRUMO25, HMMT25.

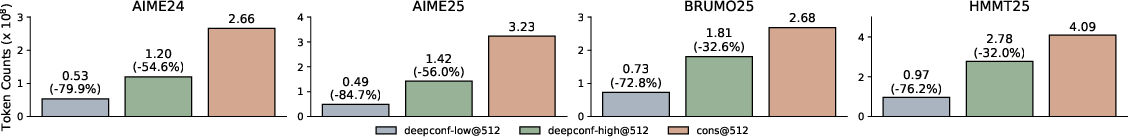

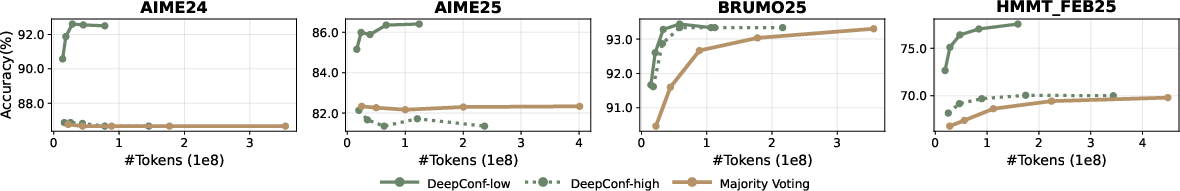

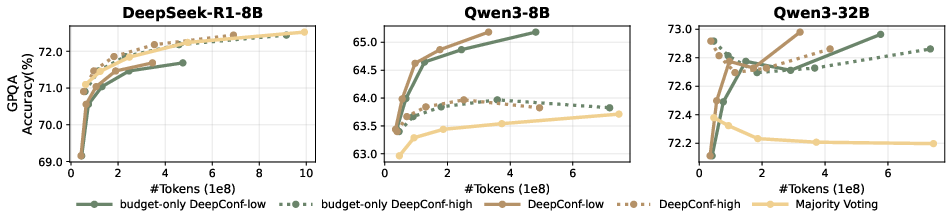

Online Evaluations

Online DeepConf achieves substantial token savings (up to 84.7%) while maintaining or improving accuracy. DeepConf-low (top 10% filtering) provides the largest efficiency gains, with accuracy improvements in most cases. DeepConf-high (top 90% filtering) is more conservative, with smaller token savings but minimal accuracy loss.

Figure 6: Generated tokens comparison across different tasks based on GPT-OSS-120B.

Figure 7: Scaling behavior: Accuracy vs voting size for different methods and models using offline DeepConf.

Figure 8: Scaling behavior: Accuracy vs token cost for different methods and models using online DeepConf.

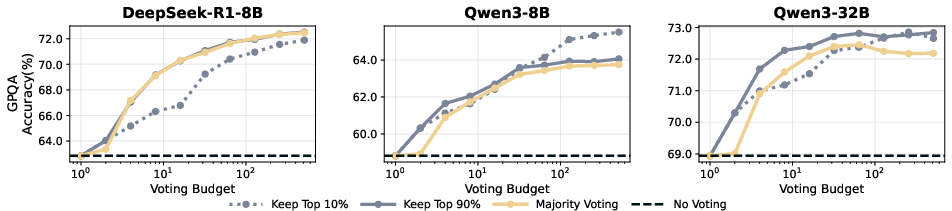

Scaling and Ablation Studies

DeepConf demonstrates robust scaling behavior across model sizes (8B–120B) and ensemble budgets. Aggressive filtering (top 10%) yields higher accuracy on most tasks, but can occasionally concentrate on confidently wrong answers. Conservative filtering (top 90%) is more stable. Ablations on consensus thresholds, warmup size, and confidence metrics confirm the reliability and flexibility of DeepConf.

Figure 9: Scaling behavior: Accuracy vs budget size for different methods on GPQA-Diamond.

Figure 10: Scaling behavior: Accuracy vs token cost for different methods on GPQA-Diamond.

Implementation and Deployment

DeepConf is implemented with minimal changes to vLLM, requiring only extensions to logprobs processing and early-stop logic. The method is compatible with OpenAI-style APIs and can be enabled per request via extra arguments. Confidence metrics are computed using token log-probabilities, with sliding windows for local metrics. The approach is model-agnostic and does not require retraining or fine-tuning.

Resource Requirements

- Computational Overhead: DeepConf reduces token generation by up to 85%, directly lowering inference cost.

- Memory: Sliding window confidence computation requires maintaining token log-probabilities for each trace.

- Scalability: The method is effective across a wide range of model sizes and ensemble budgets.

Trade-offs

- Aggressive Filtering: Maximizes accuracy and efficiency, but risks overconfidence on incorrect answers.

- Conservative Filtering: Safer, with smaller efficiency gains.

- Metric Choice: Local metrics (lowest group, tail) outperform global averages for long reasoning chains.

Implications and Future Directions

DeepConf provides a practical solution for efficient, high-accuracy LLM reasoning in deployment scenarios where computational resources are constrained. The method is particularly relevant for mathematical and STEM tasks requiring ensemble reasoning. Future work may extend confidence-based filtering to reinforcement learning, improve calibration for confidently wrong traces, and explore integration with uncertainty quantification and abstention mechanisms.

Conclusion

DeepConf introduces a confidence-guided approach to test-time reasoning in LLMs, achieving significant improvements in both accuracy and computational efficiency. The method is simple to implement, model-agnostic, and robust across diverse tasks and model scales. Confidence-aware filtering and early termination represent a scalable strategy for efficient ensemble reasoning, with strong empirical results and broad applicability in real-world LLM deployment.

Follow-up Questions

- How does DeepConf compute and use token entropy and confidence metrics to assess reasoning trace quality?

- What are the key differences between the offline and online implementations of DeepConf?

- How does DeepConf compare to traditional majority voting and self-consistency methods in enhancing LLM accuracy?

- What trade-offs does DeepConf address between aggressive and conservative filtering in ensemble reasoning?

- Find recent papers about confidence-guided reasoning in LLM inference.

Related Papers

- Is That Your Final Answer? Test-Time Scaling Improves Selective Question Answering (2025)

- Reasoning Models Can Be Effective Without Thinking (2025)

- Dynamic Early Exit in Reasoning Models (2025)

- Reasoning Models Better Express Their Confidence (2025)

- Guided by Gut: Efficient Test-Time Scaling with Reinforced Intrinsic Confidence (2025)

- Thought calibration: Efficient and confident test-time scaling (2025)

- Does Thinking More always Help? Understanding Test-Time Scaling in Reasoning Models (2025)

- Verbalized Confidence Triggers Self-Verification: Emergent Behavior Without Explicit Reasoning Supervision (2025)

- AdapThink: Adaptive Thinking Preferences for Reasoning Language Model (2025)

- Beyond Binary Rewards: Training LMs to Reason About Their Uncertainty (2025)

Authors (4)

Tweets

YouTube

alphaXiv

- Deep Think with Confidence (113 likes, 0 questions)