- The paper presents GSFix3D, which integrates a latent diffusion model with dual inputs (mesh and 3DGS) to effectively repair novel view artifacts.

- It introduces a customized fine-tuning protocol and random mask augmentation to address missing regions and enhance inpainting in under-constrained areas.

- Experimental results show significant improvements in PSNR, SSIM, and robustness to pose errors across benchmark datasets, validating its practical applicability.

Diffusion-Guided Repair of Novel Views in Gaussian Splatting: The GSFix3D Framework

Introduction and Motivation

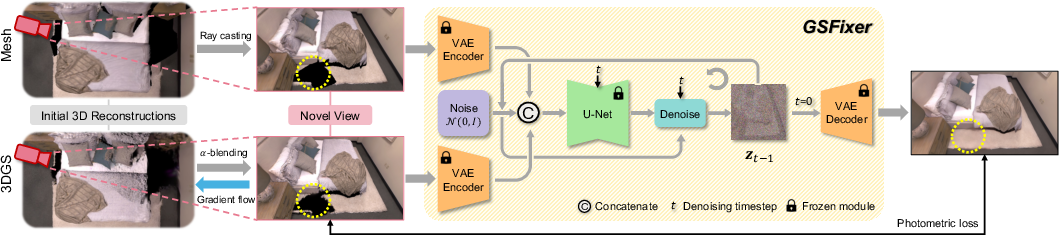

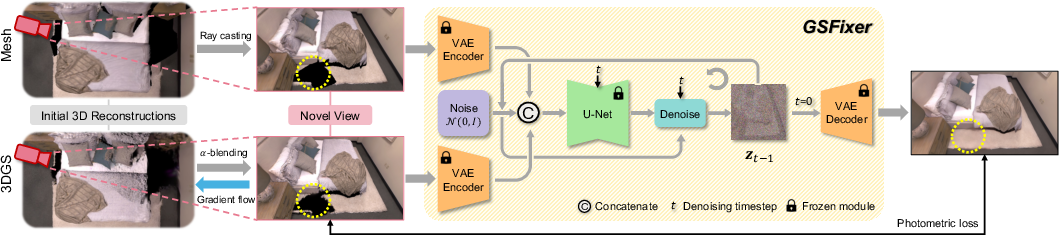

The GSFix3D framework addresses a persistent challenge in 3D scene reconstruction: the generation of artifact-free, photorealistic novel views from 3D Gaussian Splatting (3DGS) representations, particularly in regions with sparse observations or extreme viewpoints. While 3DGS offers explicit, differentiable scene modeling and fast rendering, its reliance on dense input views leads to incomplete geometry and visible artifacts in under-constrained areas. Diffusion models, notably latent-space denoising approaches, have demonstrated strong generative capabilities in 2D image synthesis but lack spatial consistency and scene awareness required for 3D reconstruction tasks. GSFix3D bridges this gap by distilling the priors of diffusion models into 3DGS, enabling robust artifact removal and inpainting for novel view repair.

Figure 1: System overview of the proposed GSFix3D framework for novel view repair, integrating mesh and 3DGS renderings as conditional inputs to GSFixer, whose outputs are distilled back into 3D via photometric optimization.

Methodology

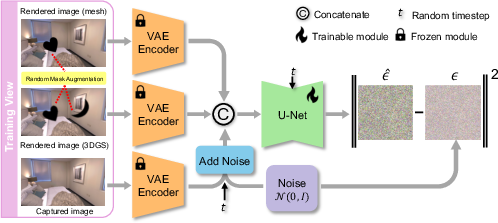

Customized Fine-Tuning Protocol

At the core of GSFix3D is GSFixer, a latent diffusion model adapted for scene-specific artifact removal and inpainting. The fine-tuning protocol extends the conditional input space to include both mesh and 3DGS renderings, leveraging their complementary strengths: mesh reconstructions provide coherent geometry in under-constrained regions, while 3DGS excels in photorealistic rendering near observed viewpoints. The protocol avoids correlated artifacts by extracting mesh and 3DGS maps simultaneously using GSFusion, rather than deriving mesh from 3DGS.

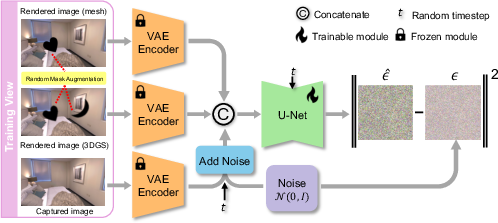

Figure 2: Illustration of the customized fine-tuning protocol for adapting a pretrained diffusion model into GSFixer, enabling it to handle diverse artifact types and missing regions.

The network architecture repurposes the U-Net backbone from Stable Diffusion v2, expanding the input channels to accommodate concatenated mesh, 3DGS, and noisy latent codes. The first layer weights are duplicated and normalized to preserve initialization behavior. Training minimizes the standard DDPM objective, predicting added noise in the latent space.

Data Augmentation for Inpainting

To address the lack of missing regions in training data, a random mask augmentation strategy is introduced. Semantic masks from annotated real images are randomly applied to mesh and 3DGS renderings, simulating occlusions and under-constrained regions. Gaussian blur is used to approximate soft boundaries typical of 3DGS artifacts. This augmentation is critical for enabling strong inpainting capabilities in GSFixer.

Inference and 3D Distillation

At inference, GSFixer receives mesh and 3DGS renderings from novel viewpoints, encoded into the latent space. Denoising is performed using a DDIM schedule for efficient sampling. The repaired images are decoded and used as pseudo-inputs for photometric optimization of the 3DGS representation, minimizing a weighted combination of L1 and SSIM losses. Adaptive density control fills previously empty regions, and multi-view constraints are enforced by augmenting the dataset with repaired views and poses.

Experimental Results

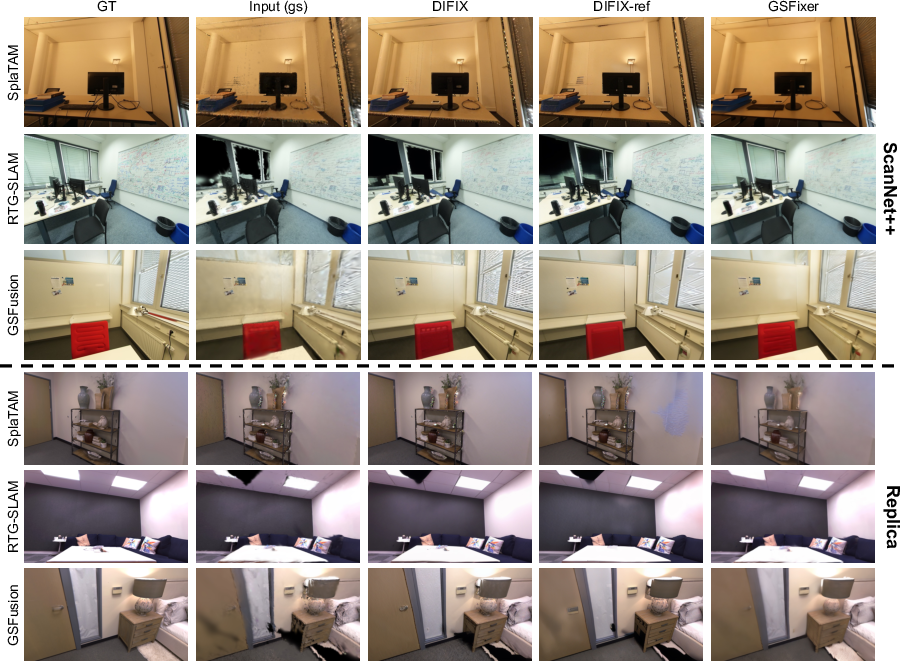

Quantitative and Qualitative Evaluation

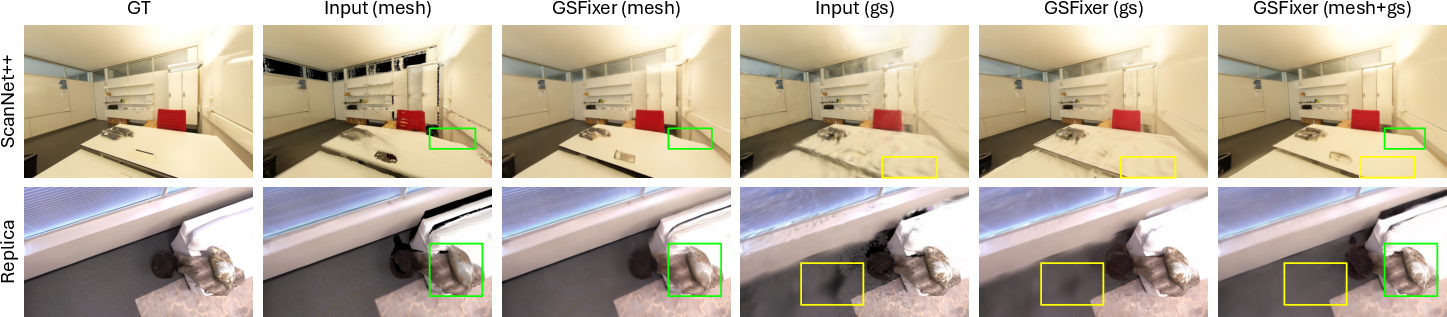

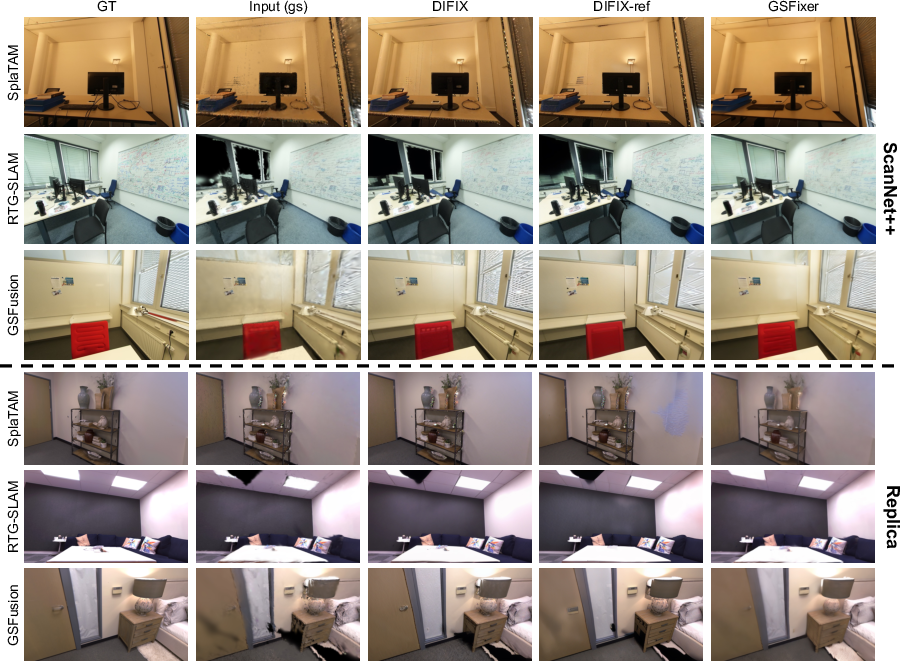

GSFix3D and GSFixer are evaluated on ScanNet++ and Replica benchmarks, as well as self-collected real-world data. Across all metrics (PSNR, SSIM, LPIPS), GSFixer consistently outperforms DIFIX and DIFIX-ref, with notable gains in PSNR (up to 5 dB over baselines in RTG-SLAM+GSFixer). The dual-input configuration (mesh+3DGS) further boosts performance, demonstrating the value of complementary conditioning.

Figure 3: Qualitative comparisons of diffusion-based repair methods on ScanNet++ and Replica datasets. GSFixer removes artifacts and fills large holes, outperforming DIFIX and DIFIX-ref.

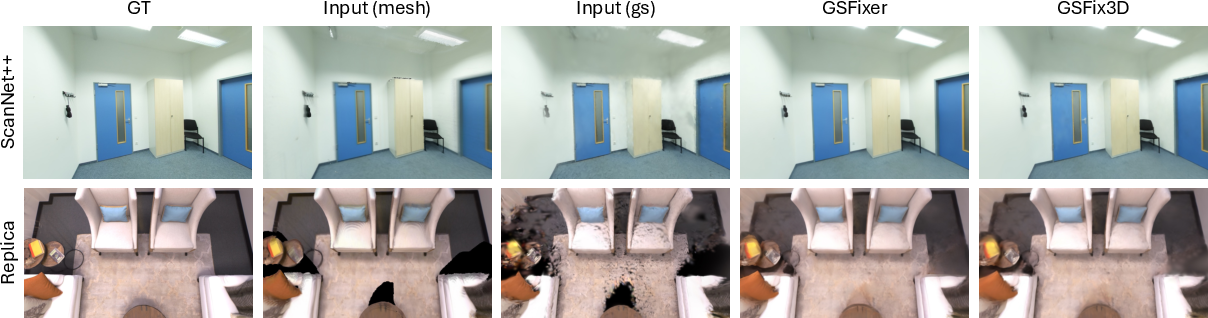

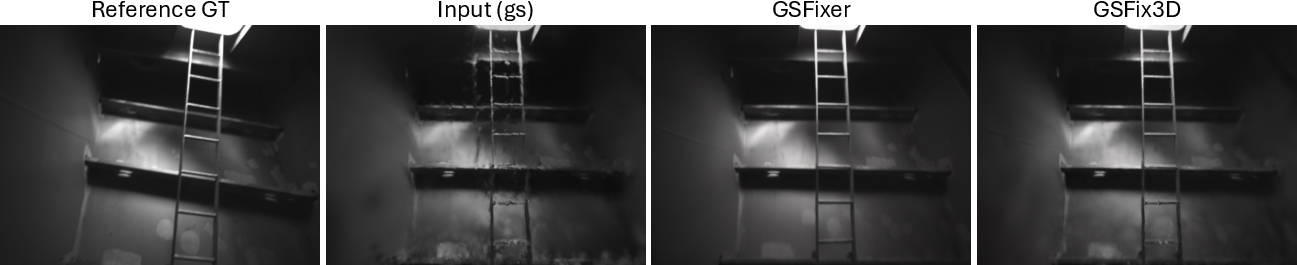

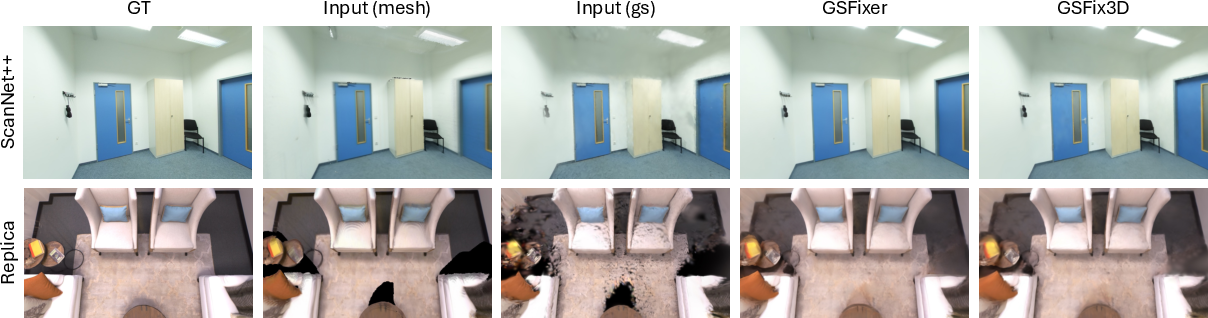

Figure 4: Qualitative comparison between GSFixer and GSFix3D. 2D visual improvements from GSFixer are effectively distilled into the 3D space by GSFix3D.

GSFix3D's multi-view optimization improves perceptual quality and consistency, as evidenced by higher PSNR and SSIM scores compared to direct GSFixer outputs. LPIPS scores are slightly higher due to the less smooth nature of 3DGS renderings post-optimization.

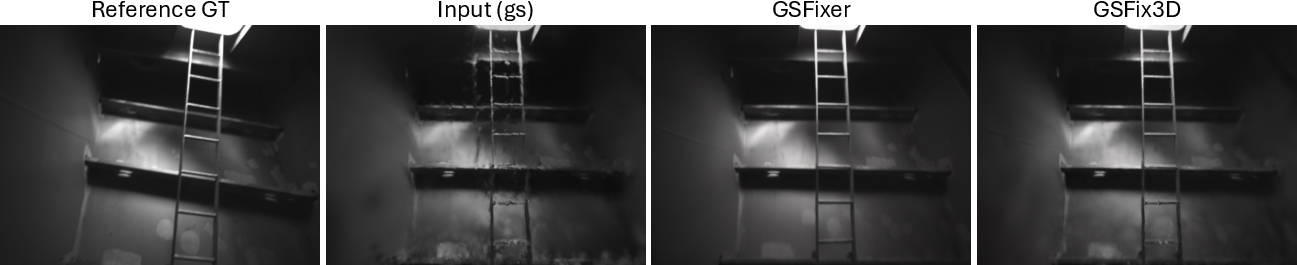

Real-World Robustness

GSFix3D demonstrates resilience to pose errors in real-world scenarios, such as ship interior scans and outdoor scenes captured with LiDAR-Inertial-Camera SLAM systems. The method effectively removes shadow-like floaters and repairs broken geometries, validating its adaptability to uncontrolled data collection and diverse reconstruction pipelines.

Figure 5: Novel view repair on self-collected ship data. GSFix3D is robust to pose errors, removing shadow-like floaters.

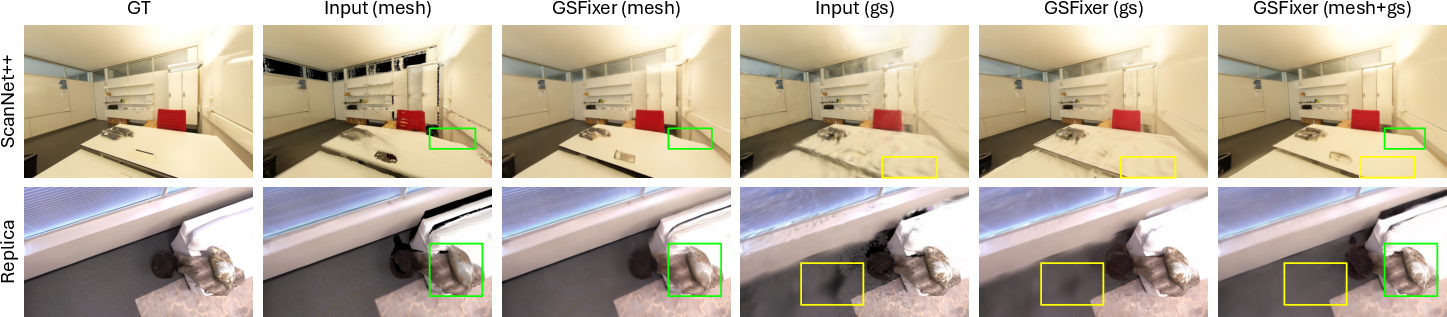

Ablation Studies

Ablation on input conditions reveals that mesh-only and 3DGS-only configurations have scene-dependent strengths. Dual-input conditioning consistently mitigates artifacts present in single-input settings, leveraging the geometric accuracy of meshes and the photorealism of 3DGS.

Figure 6: Qualitative ablation of input image conditions. Dual-input configuration effectively mitigates artifacts present in single-input settings.

Mask Augmentation Impact

Random mask augmentation is shown to be essential for inpainting large missing regions. Models trained without augmentation struggle to fill holes, even with dual-input conditioning, while the full model with augmentation produces coherent and realistic textures.

Figure 7: Qualitative ablation of random mask augmentation. Augmentation improves the ability to fill large missing regions.

Implementation Considerations

GSFix3D requires only minimal fine-tuning (2–4 hours per scene) on consumer GPUs (24GB VRAM), with no need for large-scale curated real data. Pretraining is performed on synthetic datasets (Hypersim, Virtual KITTI), and fine-tuning uses captured RGB images, depth maps, and camera poses. The pipeline is compatible with various 3DGS-based SLAM systems and mesh extraction methods. The photometric optimization step is fully differentiable and benefits from sparse keyframe selection for efficiency.

Implications and Future Directions

GSFix3D demonstrates that diffusion priors can be efficiently distilled into explicit 3D representations, enabling robust repair of novel views in challenging scenarios. The dual-input conditioning and mask augmentation strategies are broadly applicable to other generative repair tasks in 3D vision. The framework's adaptability to real-world data and resilience to pose errors suggest potential for deployment in consumer-grade 3D scanning, robotics, and AR/VR applications. Future work may explore temporal coherence for video sequences, integration with multi-modal generative models, and further reduction of fine-tuning requirements via meta-learning or self-supervised adaptation.

Conclusion

GSFix3D establishes a practical and effective pipeline for diffusion-guided repair of novel views in 3DGS reconstructions. By combining efficient scene-specific fine-tuning, dual-input conditioning, and targeted data augmentation, GSFixer achieves state-of-the-art artifact removal and inpainting, with outputs seamlessly distilled into 3D space. The method's strong quantitative and qualitative results, low resource requirements, and robustness to real-world challenges underscore its utility for a wide range of 3D reconstruction and rendering applications.