LongSplat: Robust Unposed 3D Gaussian Splatting for Casual Long Videos (2508.14041v1)

Abstract: LongSplat addresses critical challenges in novel view synthesis (NVS) from casually captured long videos characterized by irregular camera motion, unknown camera poses, and expansive scenes. Current methods often suffer from pose drift, inaccurate geometry initialization, and severe memory limitations. To address these issues, we introduce LongSplat, a robust unposed 3D Gaussian Splatting framework featuring: (1) Incremental Joint Optimization that concurrently optimizes camera poses and 3D Gaussians to avoid local minima and ensure global consistency; (2) a robust Pose Estimation Module leveraging learned 3D priors; and (3) an efficient Octree Anchor Formation mechanism that converts dense point clouds into anchors based on spatial density. Extensive experiments on challenging benchmarks demonstrate that LongSplat achieves state-of-the-art results, substantially improving rendering quality, pose accuracy, and computational efficiency compared to prior approaches. Project page: https://linjohnss.github.io/longsplat/

Collections

Sign up for free to add this paper to one or more collections.

Summary

- The paper introduces a robust unposed 3D Gaussian splatting approach that handles irregular camera motion and unknown poses for long videos.

- It employs an incremental, adaptive pipeline using local and global joint optimization with octree anchor formation and photometric refinement.

- Experimental results show superior performance in rendering quality, pose accuracy, and memory efficiency compared to existing methods.

LongSplat: Robust Unposed 3D Gaussian Splatting for Casual Long Videos

Introduction and Motivation

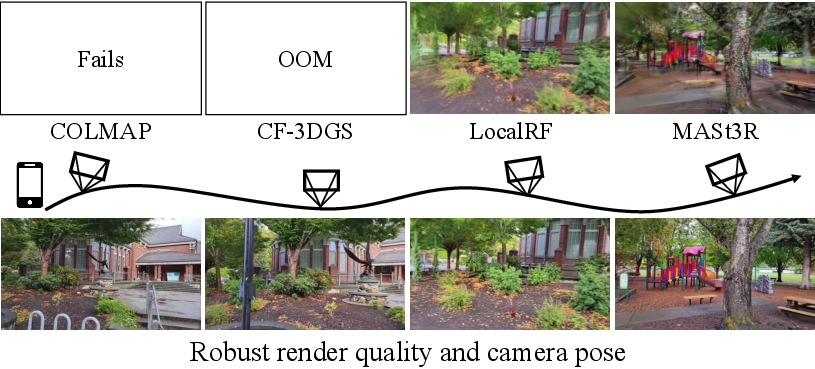

LongSplat introduces a robust framework for novel view synthesis (NVS) from casually captured long videos, addressing the challenges of irregular camera motion, unknown poses, and large-scale scene complexity. Existing NVS methods relying on Structure-from-Motion (SfM) preprocessing (e.g., COLMAP) frequently fail in casual scenarios due to pose drift and incomplete geometry, while COLMAP-free approaches suffer from memory bottlenecks and fragmented reconstructions. LongSplat circumvents these limitations by jointly optimizing camera poses and 3D Gaussian Splatting (3DGS) in an incremental, adaptive pipeline, leveraging learned 3D priors and a memory-efficient octree anchor formation.

Figure 1: Novel view synthesis for casual long videos. LongSplat robustly reconstructs scenes where prior methods fail due to pose drift, memory constraints, or fragmented geometry.

Methodology

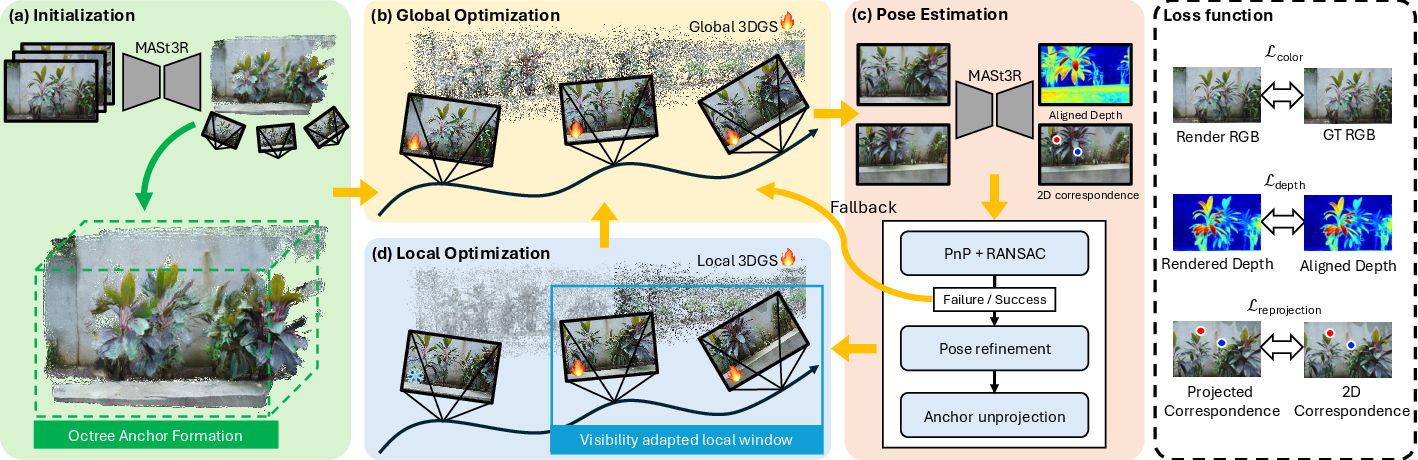

Overview of the LongSplat Framework

LongSplat operates on casually captured long videos without known camera poses. The pipeline consists of:

- Initialization: Converts MASt3R's globally aligned dense point cloud into an octree-anchored 3DGS.

- Global Optimization: Jointly refines all camera poses and 3D Gaussians for global consistency.

- Pose Estimation: Estimates new frame poses via correspondence-guided PnP, photometric refinement, and anchor updates.

- Incremental Optimization: Alternates between local optimization within a visibility-adapted window and periodic global optimization.

- Unified Objective: Combines photometric, depth, and reprojection losses for accurate geometry and appearance.

Figure 2: Overview of the LongSplat framework, showing incremental scene reconstruction via tightly coupled pose estimation and 3D Gaussian Splatting.

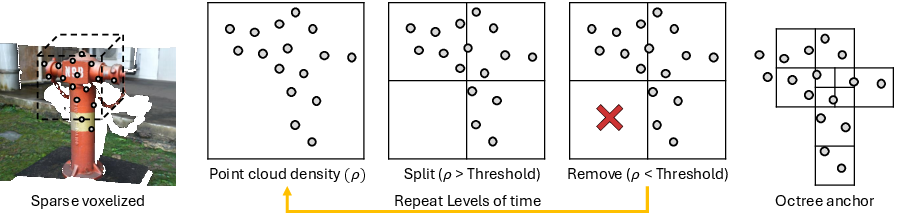

Octree Anchor Formation

To address memory efficiency and scalability, LongSplat introduces an adaptive octree anchor formation. Dense point clouds are voxelized, and voxels exceeding a density threshold are recursively split, while low-density voxels are pruned. This process yields anchors with spatial scales proportional to local scene complexity, enabling efficient representation and rendering of large-scale scenes.

Figure 3: Octree Anchor Formation. Density-guided adaptive voxel splitting and pruning enable memory-efficient anchor management for large-scale scenes.

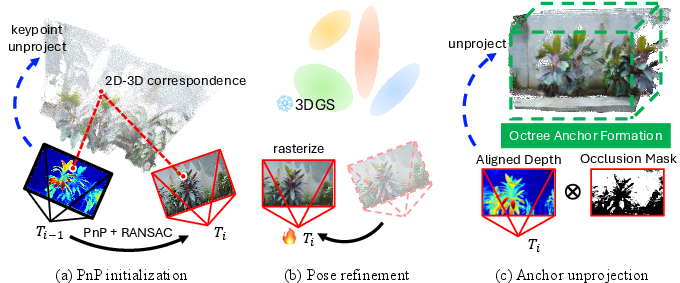

Pose Estimation Module

Camera pose estimation is performed incrementally for each frame using 2D-3D correspondences from MASt3R. Initial pose is solved via PnP with RANSAC, followed by photometric refinement to minimize reprojection error. Depth scale drift is corrected by aligning rendered and predicted depths. Newly visible regions are detected via occlusion masks and unprojected into 3D, with anchors updated via the octree strategy.

Figure 4: Camera pose estimation. PnP initialization, photometric refinement, and anchor unprojection for robust incremental pose tracking.

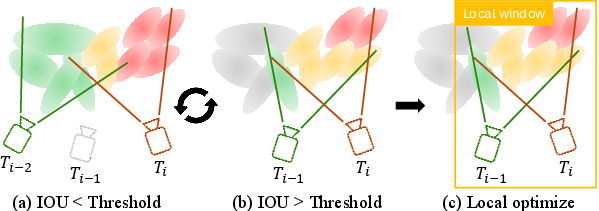

Incremental Joint Optimization

LongSplat alternates between local and global optimization:

- Local Optimization: Only Gaussians visible in the current frame's frustum are updated, constrained by a dynamically selected visibility-adapted window. Covisibility is measured by anchor IoU between frames, ensuring balanced multi-view supervision.

- Global Optimization: Periodically refines all parameters for long-term consistency.

- Loss Functions: Photometric loss, monocular depth loss, and keypoint reprojection loss are combined for robust geometry and pose refinement.

Figure 5: Visibility-Adapted Local Window. Dynamic window selection based on anchor visibility overlap ensures balanced training and local detail enhancement.

Experimental Results

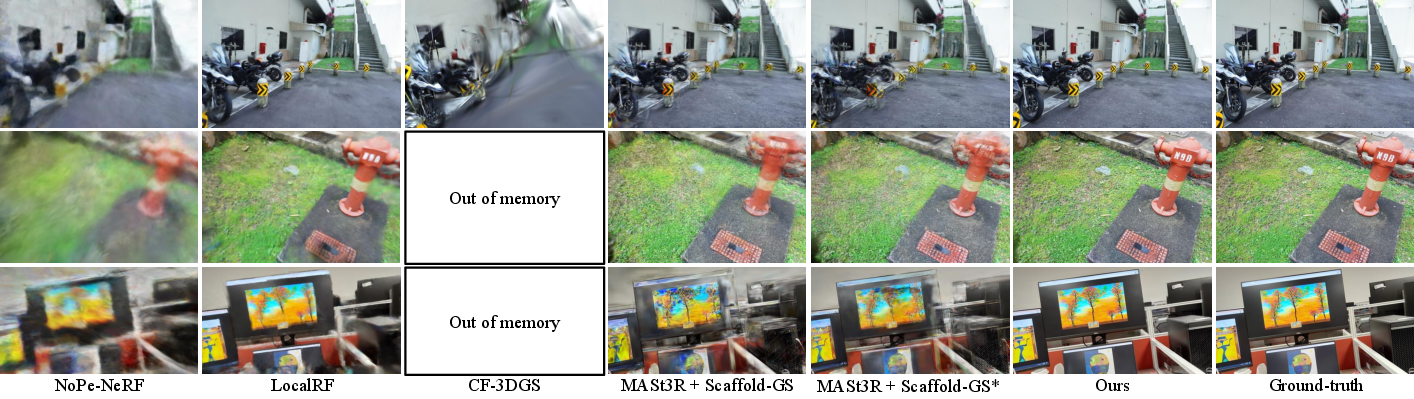

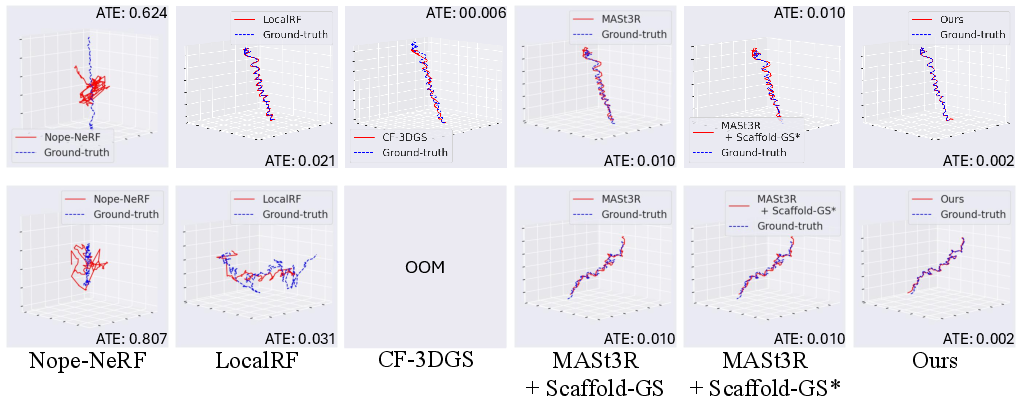

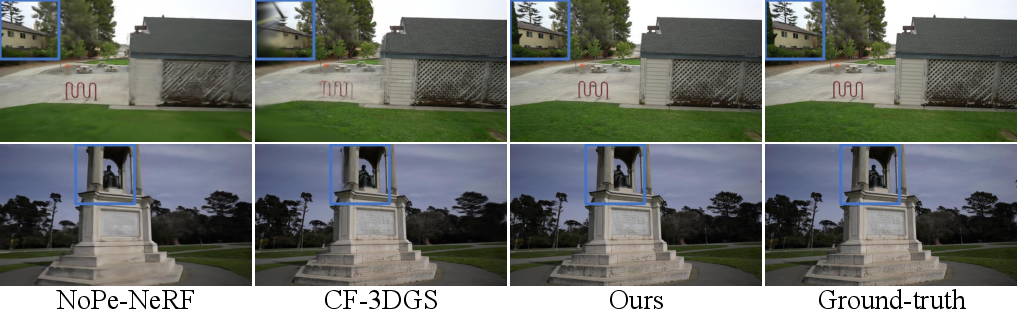

Quantitative and Qualitative Evaluation

LongSplat is evaluated on Tanks and Temples, Free, and Hike datasets, demonstrating superior performance in rendering quality, pose accuracy, and computational efficiency.

- Free Dataset: LongSplat achieves average PSNR of 27.88 dB, SSIM of 0.85, and LPIPS of 0.17, outperforming all baselines. Competing methods suffer from OOM errors, pose drift, or fragmented reconstructions.

- Tanks and Temples: LongSplat achieves PSNR of 32.83 dB, SSIM of 0.94, and LPIPS of 0.08, with lowest ATE and RPE.

- Hike Dataset: LongSplat achieves PSNR of 25.39 dB, SSIM of 0.81, and LPIPS of 0.19, significantly surpassing prior methods.

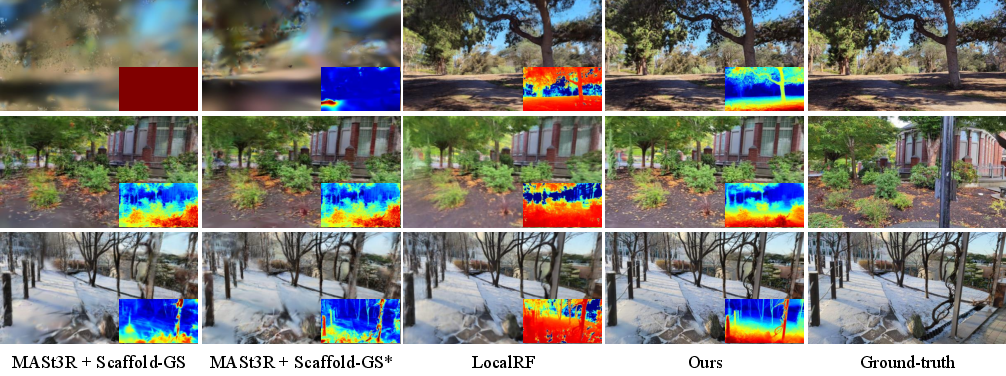

Figure 6: Qualitative comparison on the Free dataset. LongSplat produces visually consistent, sharp reconstructions, outperforming state-of-the-art baselines.

Figure 7: Visualization of camera trajectories on Free dataset. LongSplat reliably estimates stable trajectories, while CF-3DGS fails for long sequences.

Figure 8: Qualitative comparison on the Tanks and Temples dataset. LongSplat achieves sharper textures and more accurate geometry than competing methods.

Figure 9: Qualitative results on the Hike dataset. LongSplat preserves structural details and reduces artifacts in challenging outdoor scenes.

Ablation Studies

- Training Components: Removing pose estimation, global, or local optimization degrades performance, confirming the necessity of each module.

- Local Window Size: Visibility-adaptive window yields the best balance of local detail and global consistency.

- Anchor Unprojection: Adaptive octree formation achieves highest quality and 7.92× memory compression compared to dense or fixed-voxel strategies.

- Training Efficiency: LongSplat trains at 281.71 FPS, 30× faster than LocalRF, with a compact model size of 101 MB.

Robustness Analysis

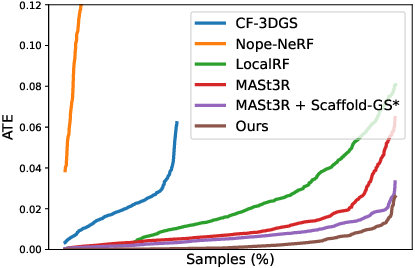

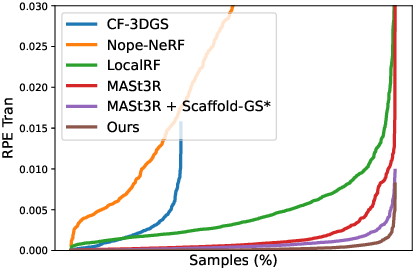

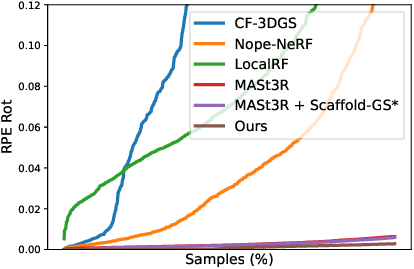

LongSplat consistently achieves lower cumulative errors in ATE and RPE, demonstrating superior robustness and reduced pose drift compared to existing methods.

Figure 10: Robustness analysis on camera pose estimation. LongSplat achieves lower errors and reduced drift across all metrics.

Implementation Considerations

- Computational Requirements: LongSplat is optimized for single-GPU (RTX 4090) training, leveraging CUDA-accelerated rasterization and lightweight MLPs for anchor decoding.

- Scalability: Adaptive octree anchors enable efficient memory usage for long videos and large-scale scenes.

- Limitations: Assumes static scenes and fixed intrinsics; not suitable for dynamic objects or varying focal lengths.

Implications and Future Directions

LongSplat advances unposed NVS by integrating incremental joint optimization, robust pose tracking, and adaptive memory management. The framework is directly applicable to VR/AR content creation, video editing, and large-scale 3D mapping from consumer-grade video. Future work should address dynamic scene reconstruction, variable camera intrinsics, and further improvements in pose estimation robustness.

Conclusion

LongSplat establishes a new state-of-the-art for unposed 3D Gaussian Splatting in casual long videos, achieving superior rendering quality, pose accuracy, and computational efficiency. Its incremental, adaptive pipeline and robust optimization strategies make it a practical solution for real-world NVS applications, with clear avenues for extension to dynamic and unconstrained environments.

Follow-up Questions

- What are the key challenges LongSplat addresses in casual long video reconstruction?

- How does the joint optimization framework improve pose estimation and scene reconstruction?

- What benefits does the adaptive octree anchor formation provide in terms of memory efficiency?

- How does LongSplat compare to COLMAP-based approaches in handling fragmented geometry and pose drift?

- Find recent papers about novel view synthesis.

Related Papers

- COLMAP-Free 3D Gaussian Splatting (2023)

- InstantSplat: Sparse-view Gaussian Splatting in Seconds (2024)

- FreeSplat: Generalizable 3D Gaussian Splatting Towards Free-View Synthesis of Indoor Scenes (2024)

- Correspondence-Guided SfM-Free 3D Gaussian Splatting for NVS (2024)

- Robust Gaussian Splatting SLAM by Leveraging Loop Closure (2024)

- Look Gauss, No Pose: Novel View Synthesis using Gaussian Splatting without Accurate Pose Initialization (2024)

- SelfSplat: Pose-Free and 3D Prior-Free Generalizable 3D Gaussian Splatting (2024)

- Towards Better Robustness: Pose-Free 3D Gaussian Splatting for Arbitrarily Long Videos (2025)

- AnySplat: Feed-forward 3D Gaussian Splatting from Unconstrained Views (2025)

- LongSplat: Online Generalizable 3D Gaussian Splatting from Long Sequence Images (2025)

Authors (6)

alphaXiv

- LongSplat: Robust Unposed 3D Gaussian Splatting for Casual Long Videos (17 likes, 0 questions)