- The paper presents a unified benchmark quantifying LLM overthinking and underthinking using metrics like AUC_OAA and overall F1 score.

- It employs rigorous dataset generation with constrained synthetic pipelines and code-based verification to evaluate both simple and complex tasks.

- Experimental results reveal that current LLMs trade efficiency for deep reasoning, highlighting the need for adaptive routing and improved prompt strategies.

OptimalThinkingBench: A Unified Benchmark for Evaluating Overthinking and Underthinking in LLMs

Introduction

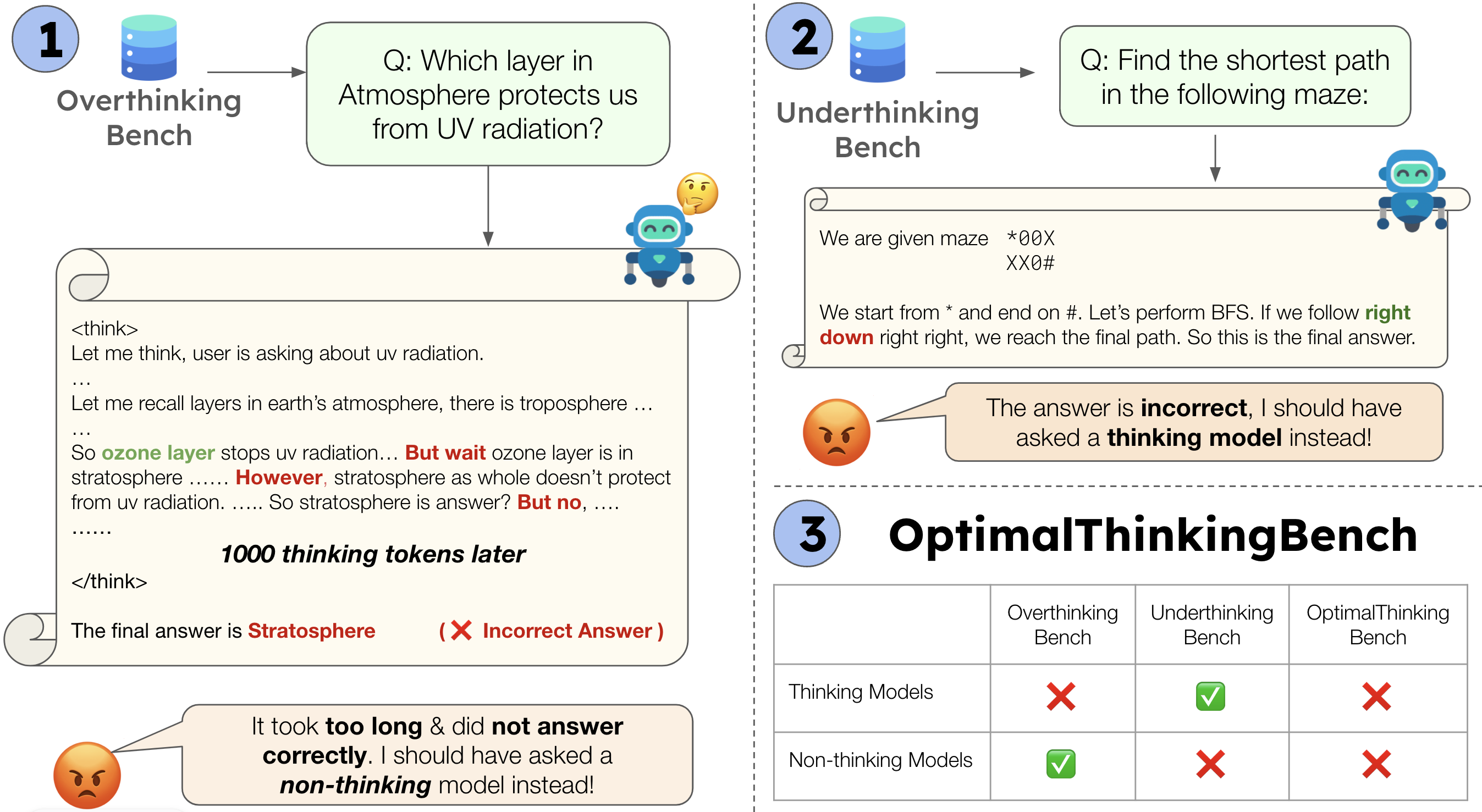

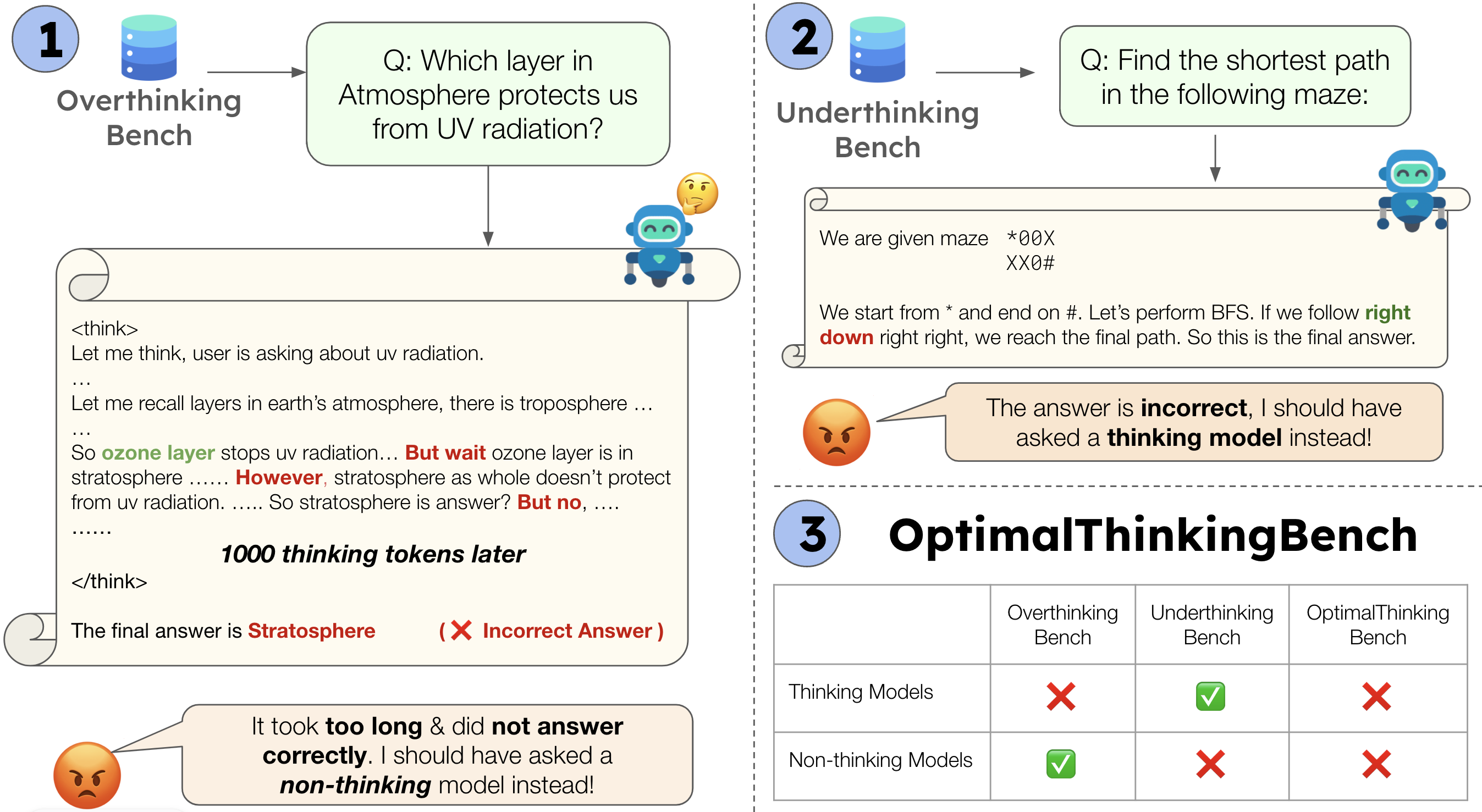

OptimalThinkingBench introduces a comprehensive framework for evaluating the dual challenges of overthinking and underthinking in LLMs. Overthinking refers to the unnecessary generation of lengthy reasoning traces on simple queries, often leading to increased latency and cost without accuracy gains, and sometimes even performance degradation. Underthinking, conversely, is the failure to engage in sufficient reasoning on complex tasks, resulting in suboptimal performance. The benchmark is designed to assess whether LLMs can adaptively allocate computational effort according to task complexity, thereby optimizing both efficiency and accuracy.

Figure 1: A unified benchmark to evaluate overthinking and underthinking in LLMs. OverthinkingBench targets simple queries, while UnderthinkingBench focuses on reasoning problems requiring deeper computation.

Benchmark Construction

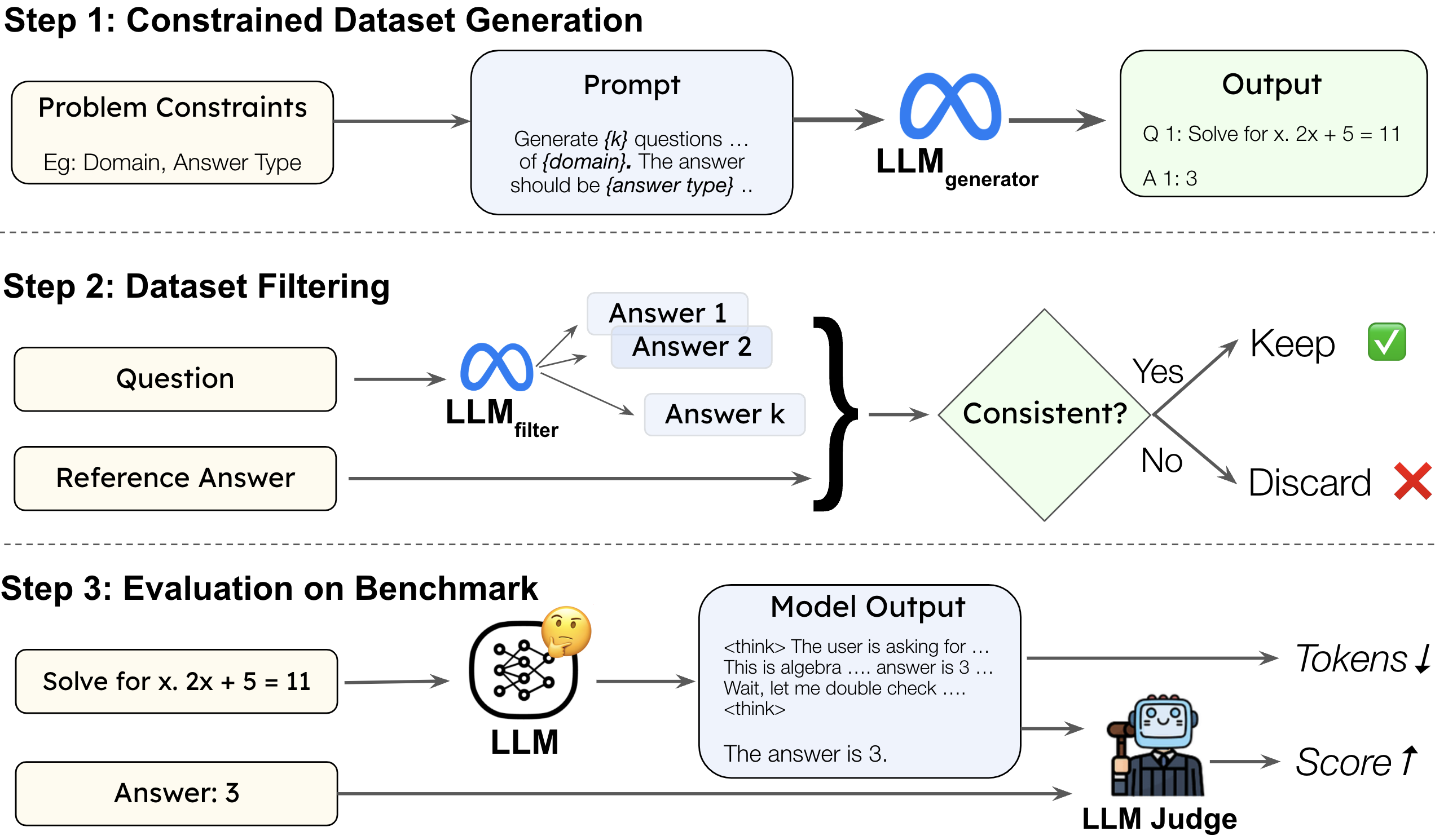

OverthinkingBench

OverthinkingBench is constructed to quantify excessive reasoning on simple queries. The dataset is generated via a constrained, synthetic pipeline that ensures broad domain coverage (72 domains) and four answer types (numeric, MCQ, short answer, open-ended). The generation process involves:

Evaluation is based on both answer correctness (using an LLM-as-a-Judge) and the number of "thinking tokens" generated. The Overthinking-Adjusted Accuracy (OAA) metric computes accuracy under a token budget, and the area under the OAA curve (AUC_OAA) serves as the primary efficiency-adjusted metric.

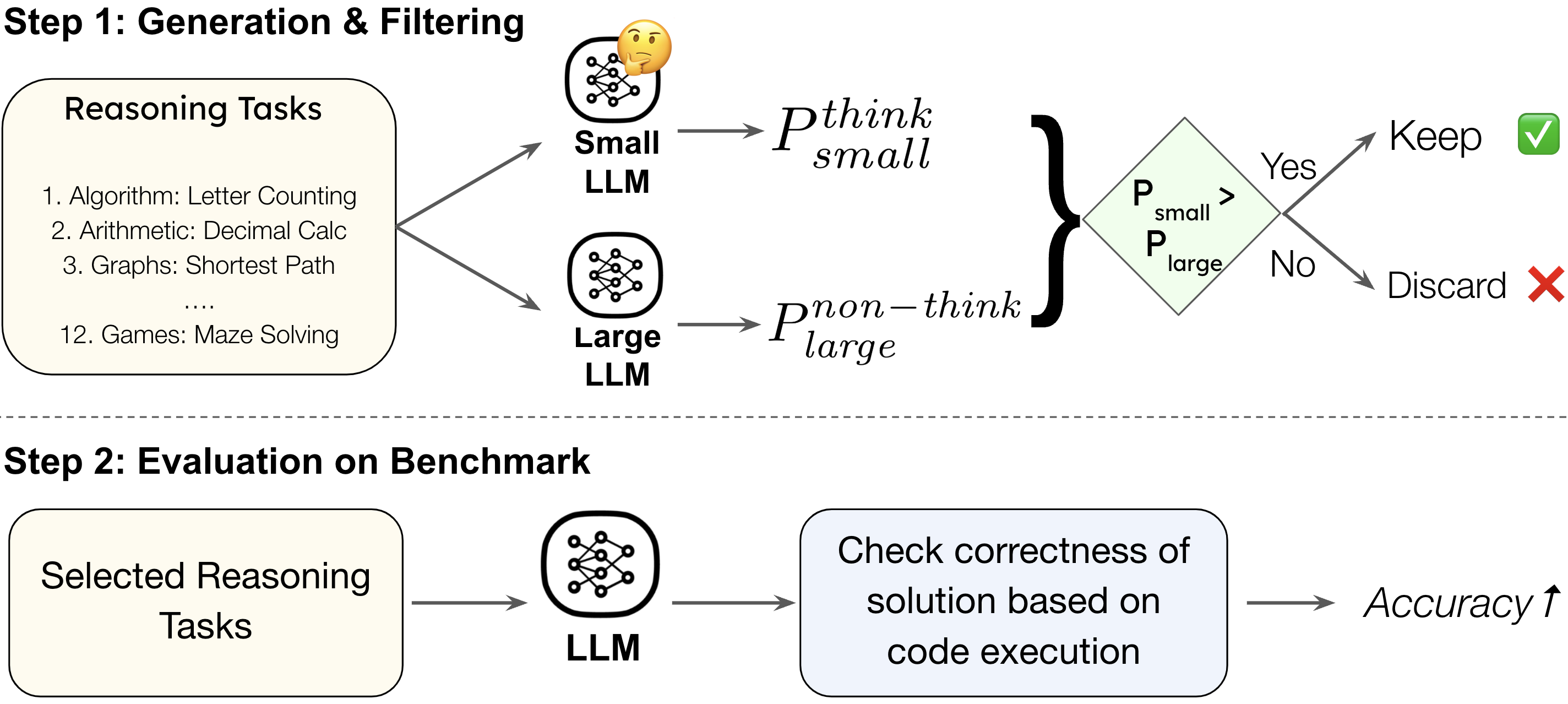

UnderthinkingBench

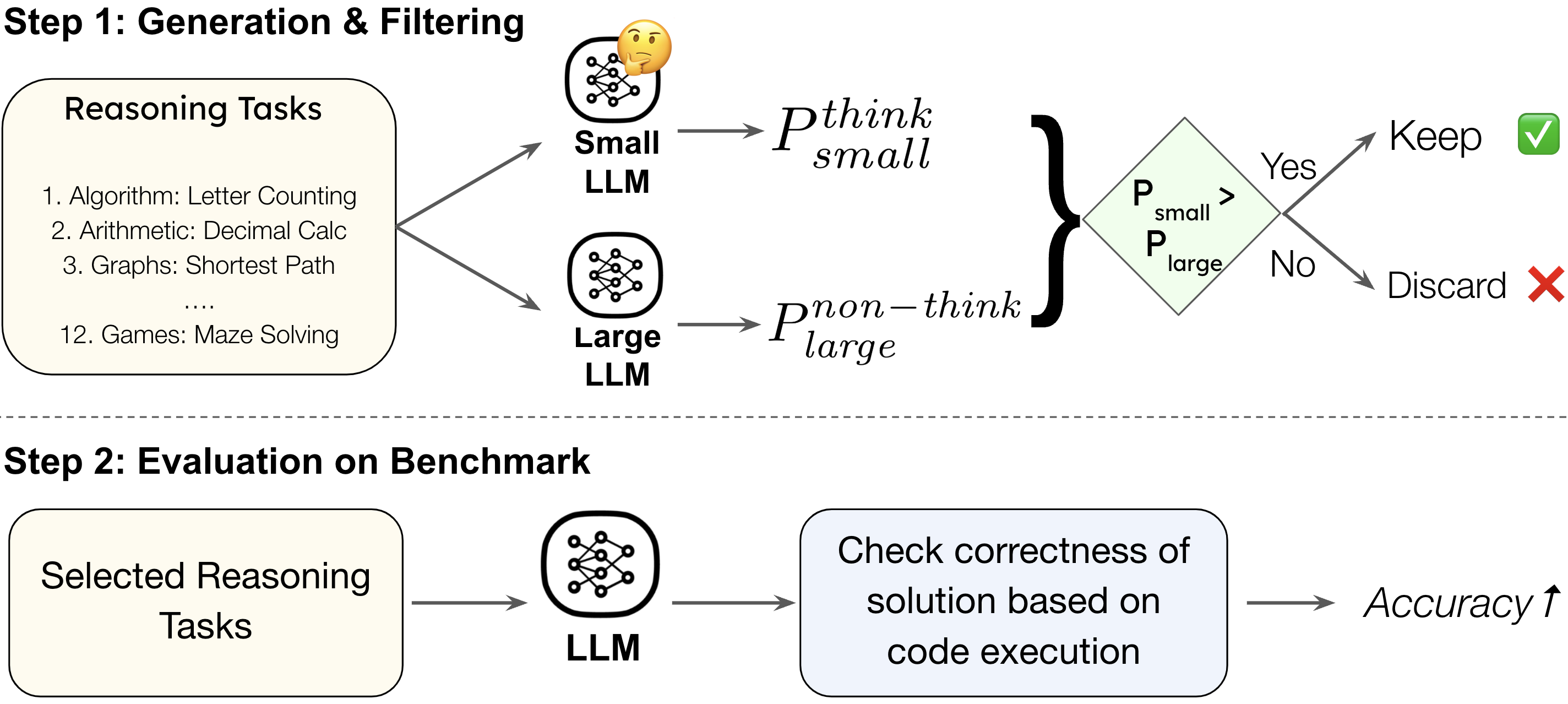

UnderthinkingBench targets complex reasoning tasks where step-by-step thinking is essential. Tasks are selected from Reasoning Gym, retaining only those where a small thinking model outperforms a large non-thinking model by a significant margin. The final set includes 11 reasoning types across six categories (games, algorithms, graphs, arithmetic, geometry, logic), with 550 procedurally generated questions.

Figure 3: UnderthinkingBench construction and evaluation, leveraging performance gaps between thinking and non-thinking models to select tasks.

Evaluation is based on programmatic verifiers that check the correctness of model outputs via code execution, unconstrained by token budgets.

Unified Metric

OptimalThinkingBench combines AUC_OAA from OverthinkingBench and accuracy from UnderthinkingBench into a single F1 score, enforcing that models must perform well on both axes to achieve high overall scores.

Experimental Results

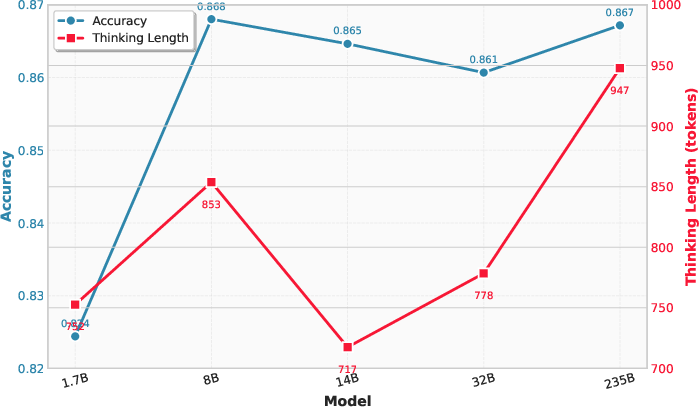

A comprehensive evaluation of 33 models (open/closed, thinking/non-thinking, hybrid) reveals that no current model achieves optimal balance between efficiency and reasoning capability. Notably:

Methods for Improving Optimal Thinking

Several strategies were evaluated to encourage optimal thinking:

- Efficient Reasoning Methods: Techniques such as length-based reward shaping, model merging, and auxiliary verification training reduce token usage by up to 68% but often degrade performance on complex reasoning tasks, resulting in limited overall F1 improvements.

- Routing Approaches: A trained router that selects between thinking and non-thinking modes based on question difficulty improves performance over static modes but remains significantly below the oracle router, which has access to ground-truth task complexity.

- Prompt Engineering: Explicitly instructing models to "not overthink" reduces token usage on simple queries without harming accuracy, while generic "think step-by-step" prompts exacerbate overthinking and can reduce accuracy on simple tasks.

Analysis of Thinking Behavior

Domain and Answer Type Effects

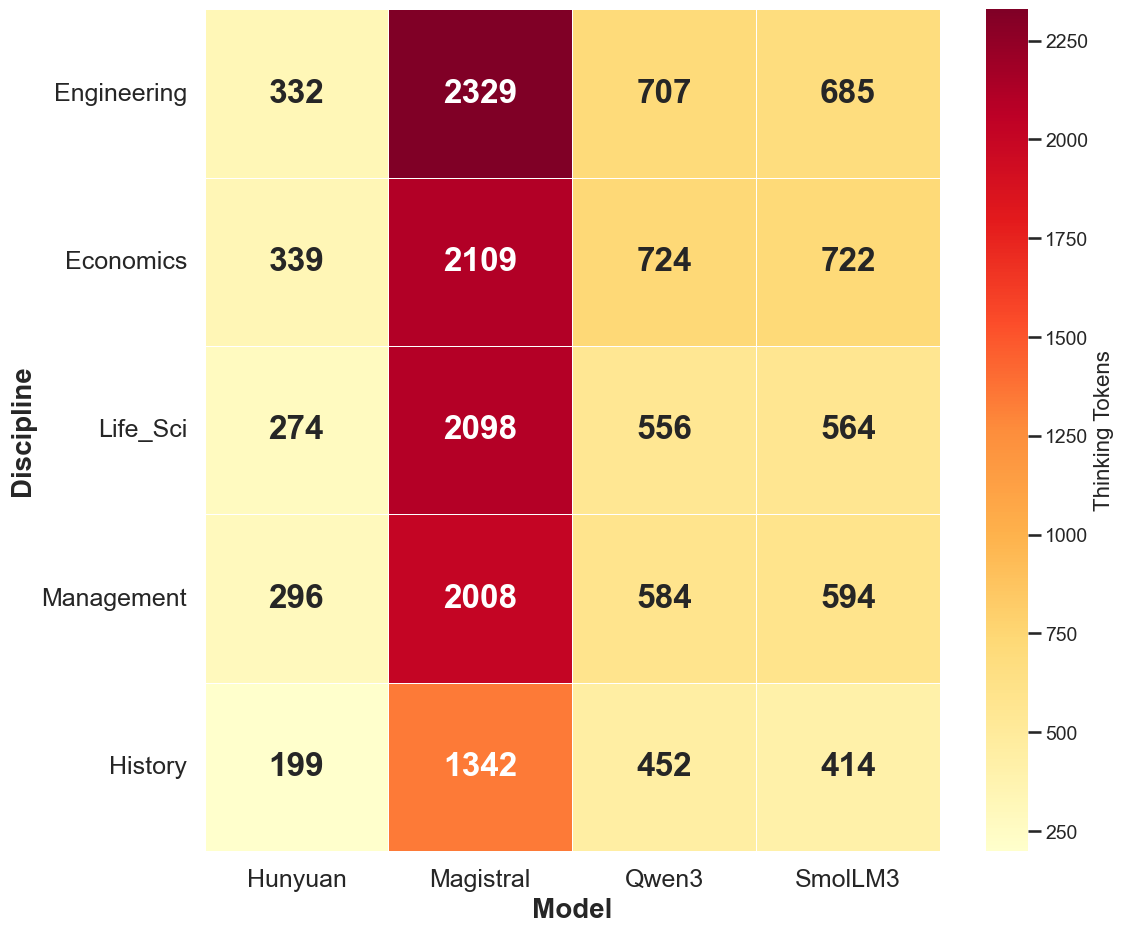

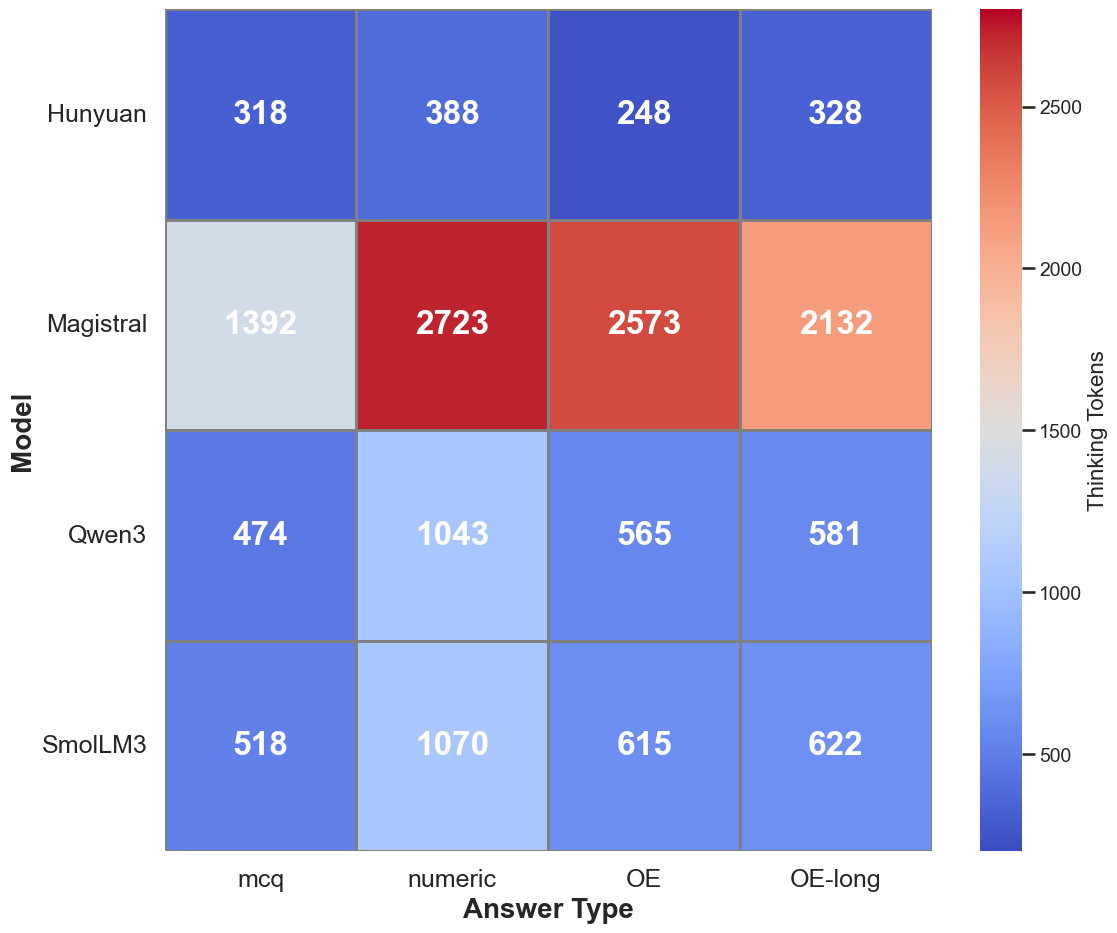

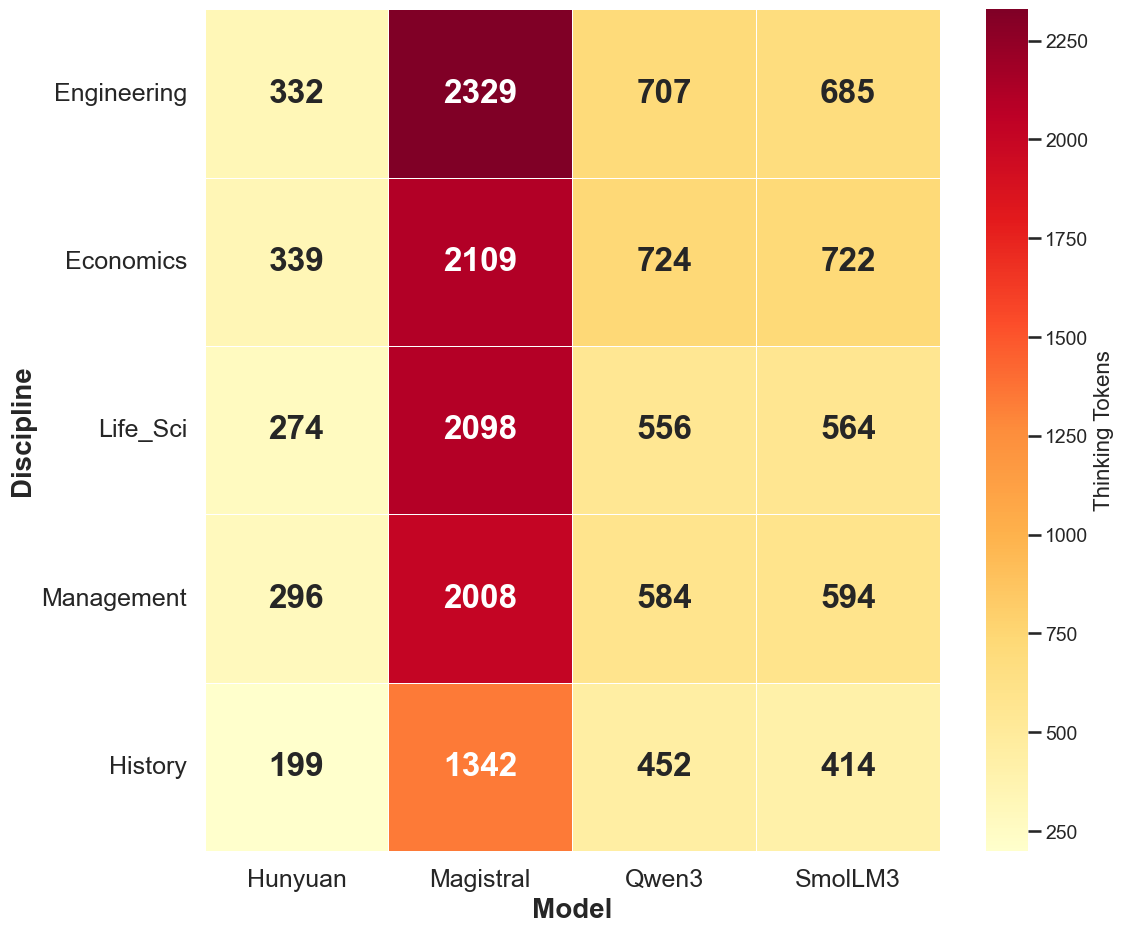

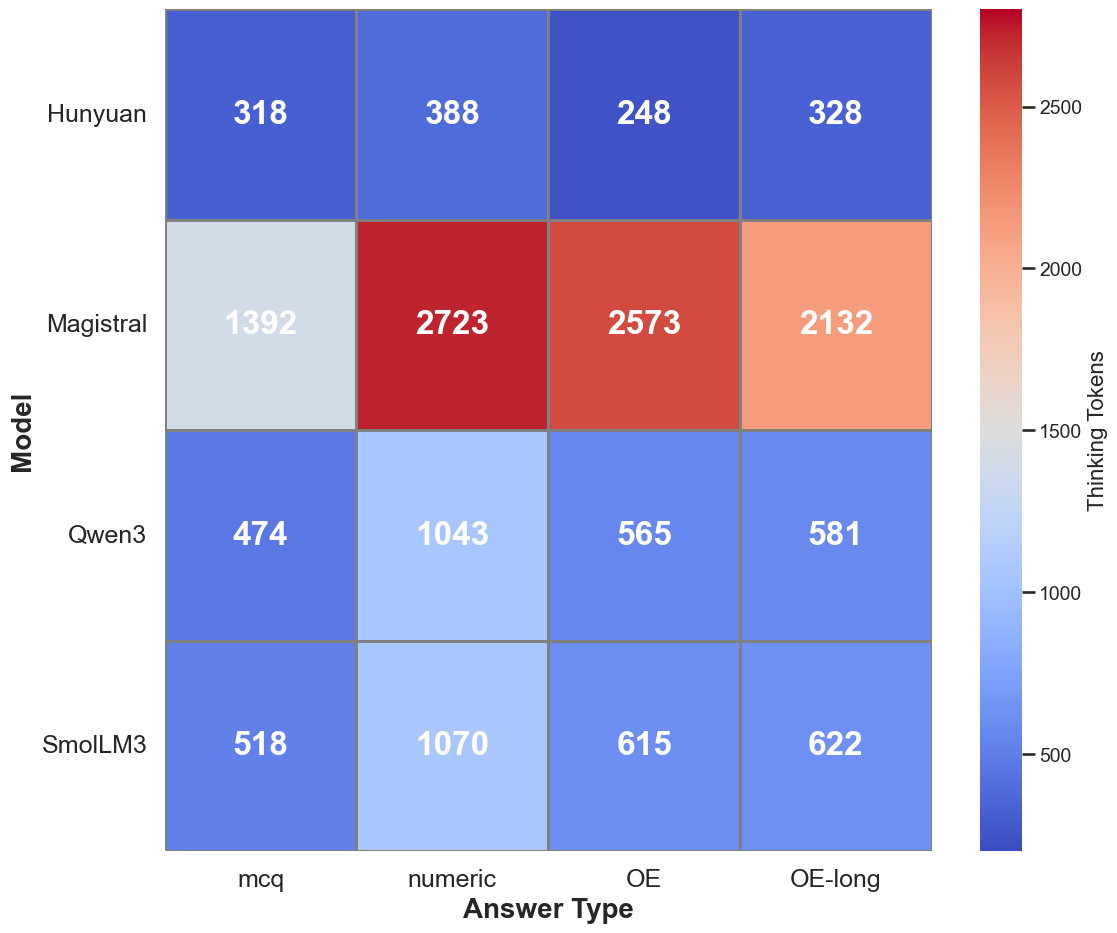

Analysis across domains and answer types reveals that:

- STEM domains elicit more thinking tokens, but this does not correlate with higher accuracy or greater need for reasoning.

- Numeric questions trigger more extensive reasoning, likely due to post-training biases, despite similar accuracy to non-thinking models.

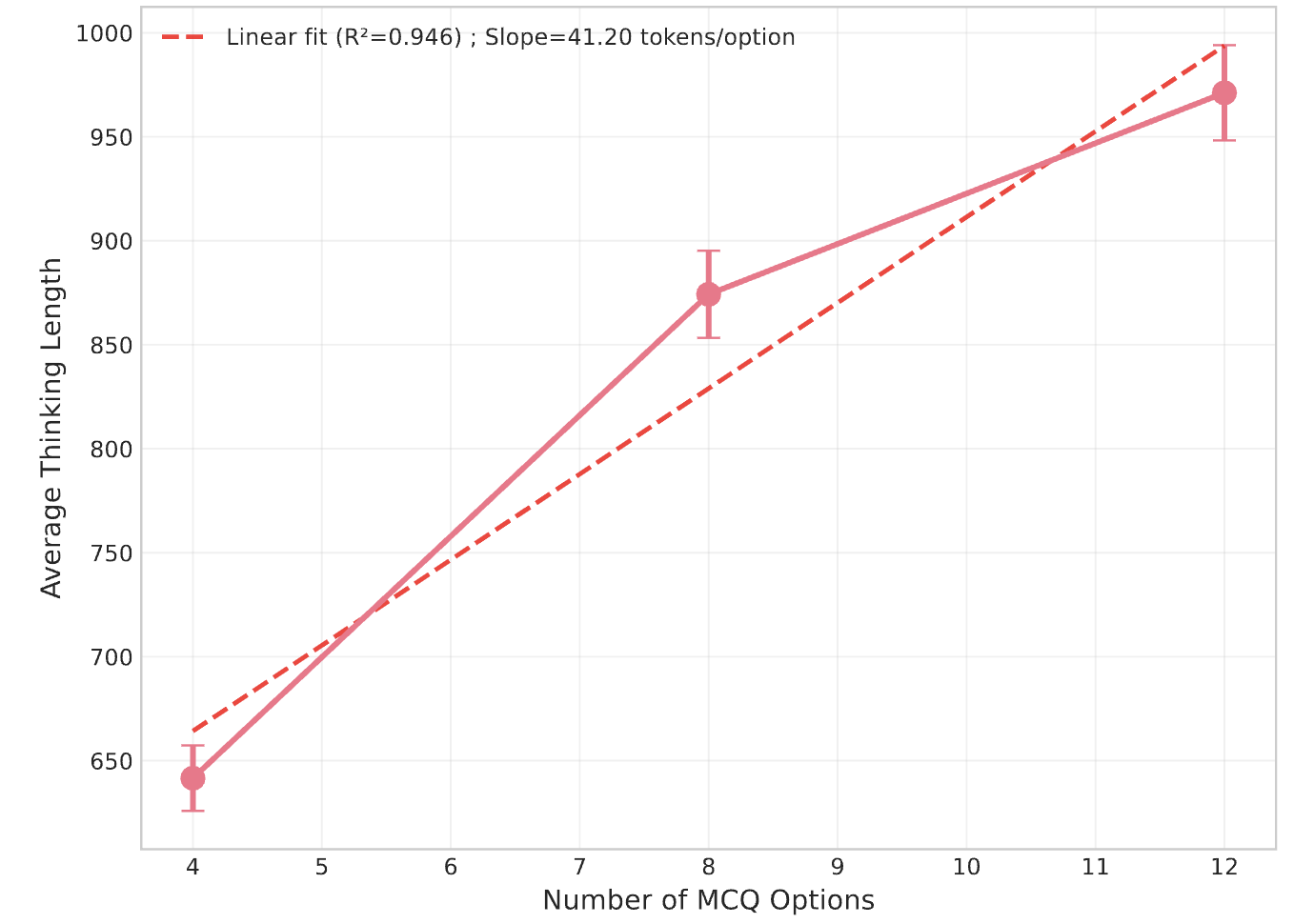

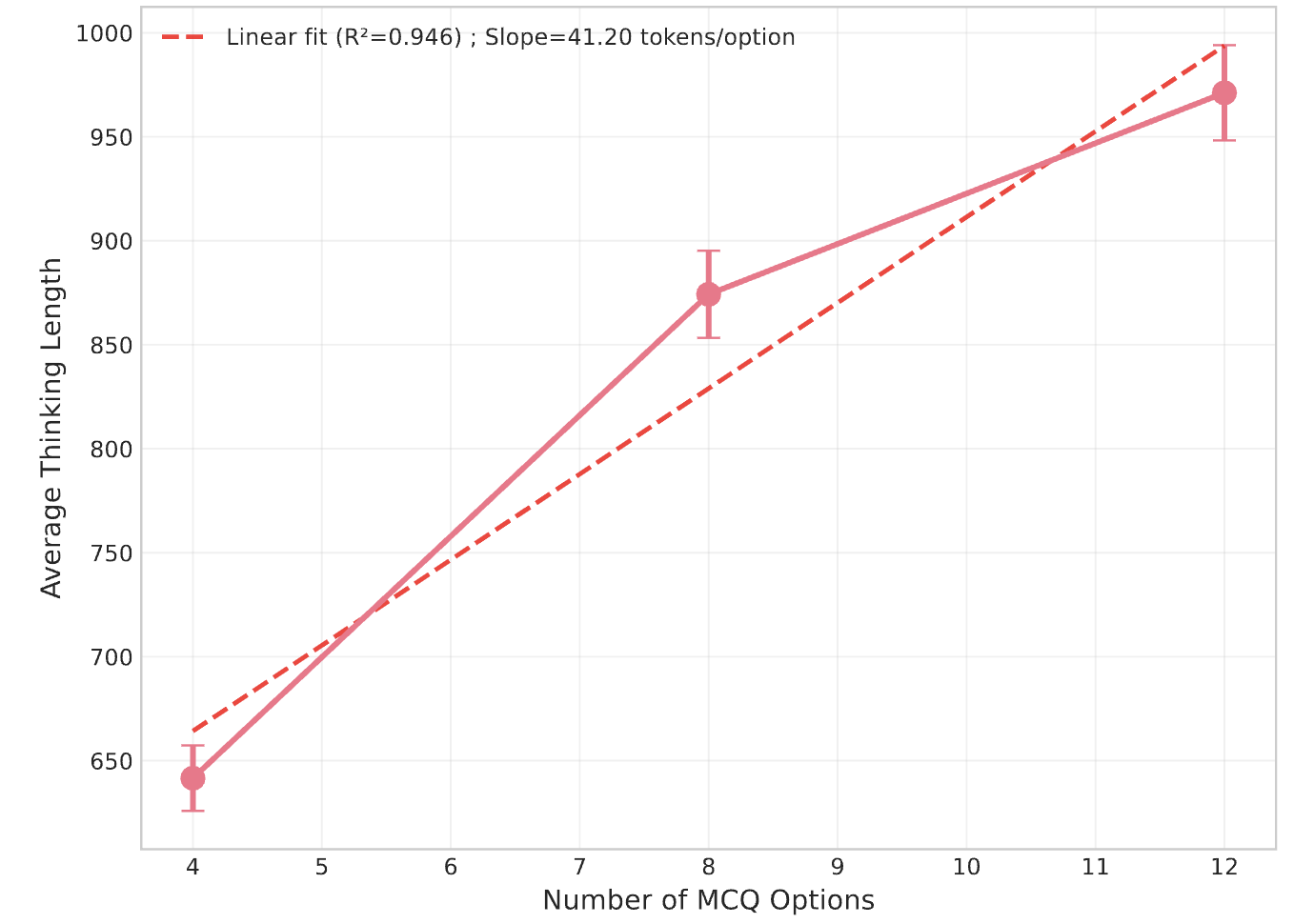

- MCQ distractors: Increasing the number of irrelevant options in MCQs leads to a near-linear increase in thinking tokens, indicating that models are sensitive to surface-level complexity even when it is spurious.

Figure 5: Thinking token usage varies by problem domain, with STEM domains eliciting more tokens.

Figure 6: Overthinking increases almost linearly with the number of MCQ options, despite most being distractors.

Qualitative Failure Modes

Qualitative analysis demonstrates that overthinking can lead to performance degradation on simple queries due to self-doubt and conflicting reasoning, while underthinking manifests as premature conclusions and lack of verification on complex tasks. In both cases, the inability to adaptively modulate reasoning depth is detrimental.

Implications and Future Directions

OptimalThinkingBench exposes a fundamental limitation in current LLMs: the lack of adaptive reasoning allocation. The benchmark's unified metric and dynamic, synthetic construction enable robust, contamination-resistant evaluation as models evolve. The results indicate that:

- Efficiency and performance trade-off is not resolved by current architectures or training paradigms.

- Routing and prompt-based interventions offer partial mitigation but are insufficient for general optimality.

- Scaling model size increases verbosity without clear accuracy gains, suggesting diminishing returns for brute-force scaling in the context of reasoning efficiency.

Future research should focus on:

- Unified architectures capable of dynamic reasoning allocation, possibly via meta-learning or adaptive computation modules.

- Improved routing mechanisms that can reliably infer task complexity and select appropriate reasoning strategies.

- Training objectives that explicitly penalize unnecessary computation and reward adaptive efficiency across diverse domains and answer types.

Conclusion

OptimalThinkingBench provides a rigorous, unified framework for evaluating and incentivizing optimal reasoning in LLMs. The benchmark reveals that current models are unable to simultaneously achieve high efficiency and high performance across the spectrum of query complexity. While several methods offer incremental improvements, a significant gap remains, underscoring the need for new approaches to adaptive reasoning in LLMs. The benchmark's extensibility and dynamic nature position it as a critical tool for tracking progress in this area as models and methods continue to advance.