Agent Lightning: Train ANY AI Agents with Reinforcement Learning (2508.03680v1)

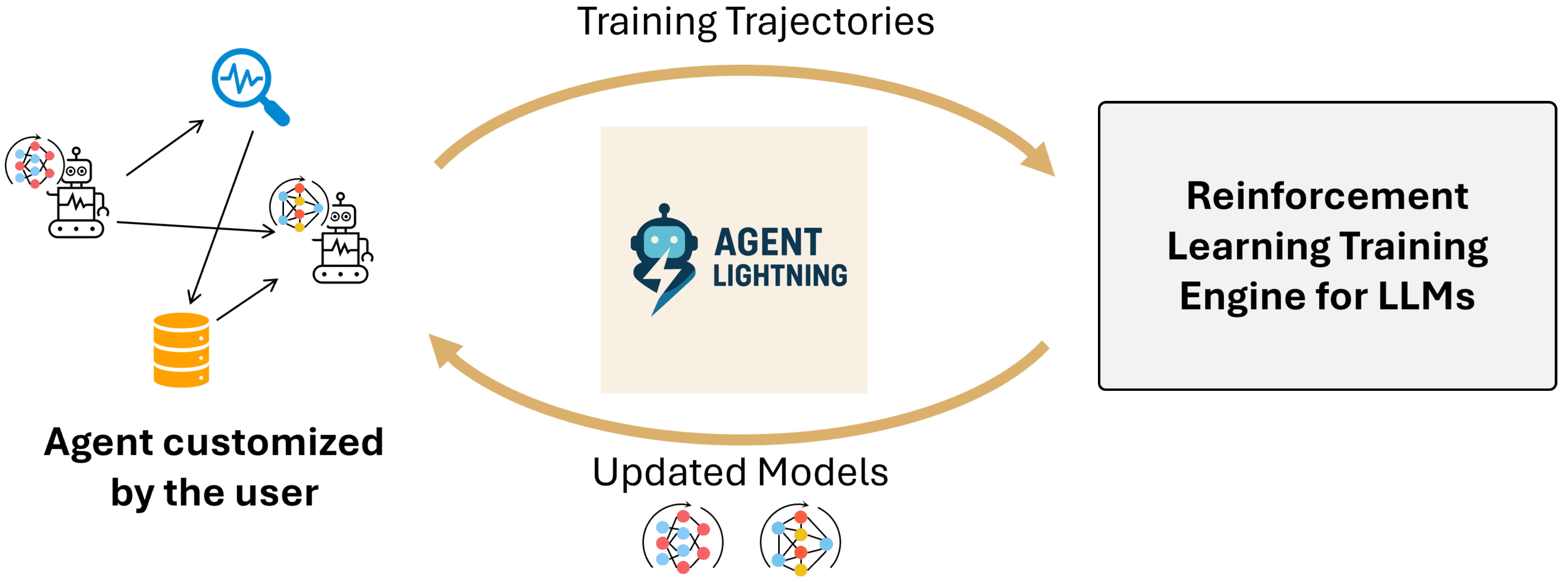

Abstract: We present Agent Lightning, a flexible and extensible framework that enables Reinforcement Learning (RL)-based training of LLMs for any AI agent. Unlike existing methods that tightly couple RL training with agent or rely on sequence concatenation with masking, Agent Lightning achieves complete decoupling between agent execution and training, allowing seamless integration with existing agents developed via diverse ways (e.g., using frameworks like LangChain, OpenAI Agents SDK, AutoGen, and building from scratch) with almost ZERO code modifications. By formulating agent execution as Markov decision process, we define an unified data interface and propose a hierarchical RL algorithm, LightningRL, which contains a credit assignment module, allowing us to decompose trajectories generated by ANY agents into training transition. This enables RL to handle complex interaction logic, such as multi-agent scenarios and dynamic workflows. For the system design, we introduce a Training-Agent Disaggregation architecture, and brings agent observability frameworks into agent runtime, providing a standardized agent finetuning interface. Experiments across text-to-SQL, retrieval-augmented generation, and math tool-use tasks demonstrate stable, continuous improvements, showcasing the framework's potential for real-world agent training and deployment.

Summary

- The paper introduces a decoupled RL framework that abstracts agent execution as a Markov Decision Process to optimize diverse multi-turn AI workflows.

- It presents the hierarchical LightningRL algorithm that decomposes trajectories for effective policy updates and robust credit assignment across tools.

- Agent Lightning decouples training from agent logic via a disaggregated architecture, achieving scalable optimization across multiple agent tasks.

Agent Lightning: A Unified RL Framework for Training Arbitrary AI Agents

Motivation and Problem Statement

The proliferation of LLM-powered agents for complex tasks—ranging from code generation to tool-augmented reasoning—has exposed significant limitations in current training paradigms. While prompt engineering and supervised fine-tuning offer incremental improvements, they fail to address the dynamic, multi-turn, and tool-integrated workflows characteristic of real-world agents. Existing RL frameworks are tightly coupled to agent logic or rely on brittle sequence concatenation and masking strategies, impeding scalability and generalization across heterogeneous agent ecosystems. Agent Lightning introduces a principled solution: a fully decoupled RL training framework for arbitrary agents, enabling seamless integration with diverse agent implementations and facilitating robust, scalable optimization.

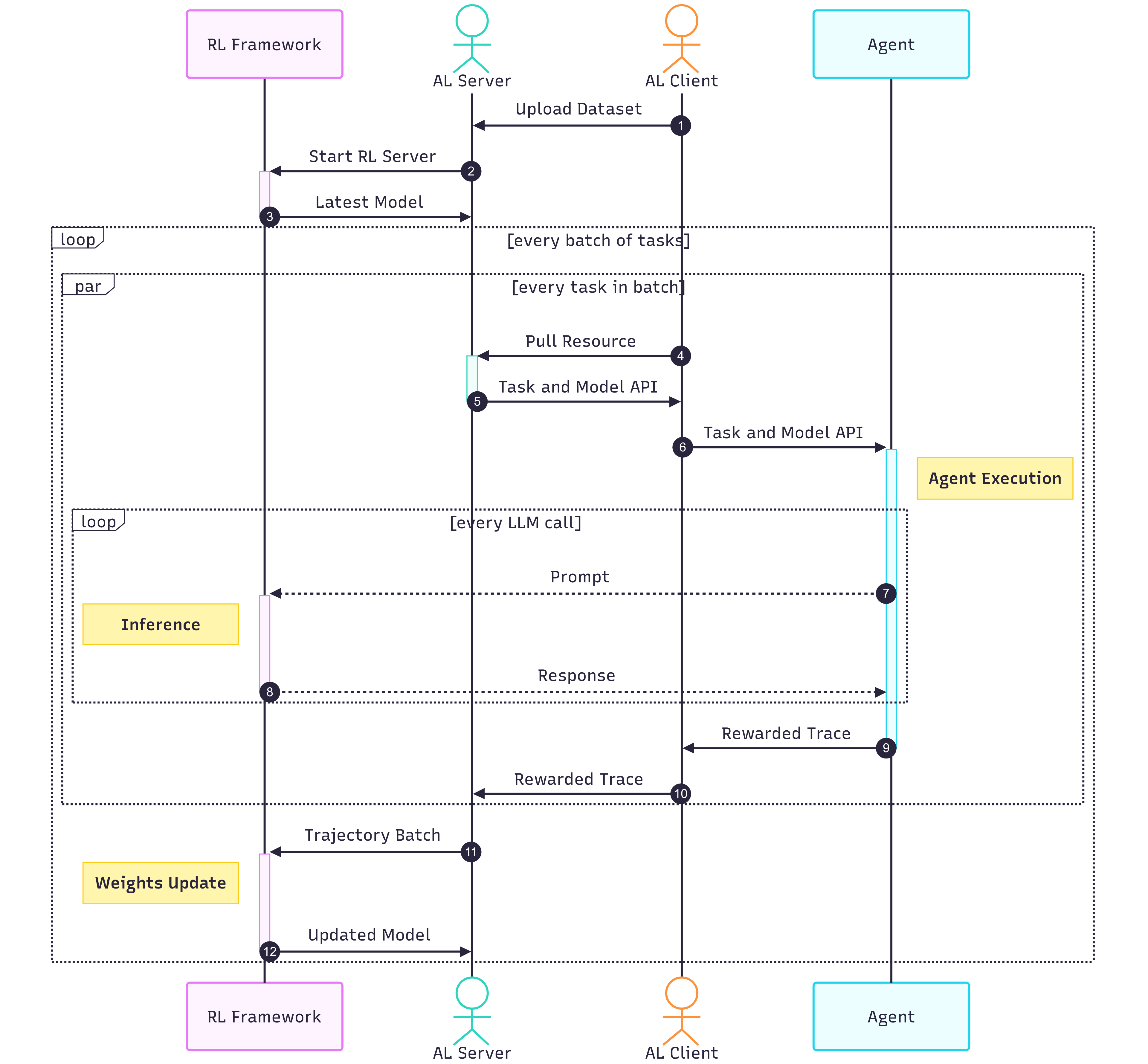

Figure 1: Overview of Agent Lightning, a flexible and extensible framework that enables reinforcement learning of LLMs for ANY AI agents.

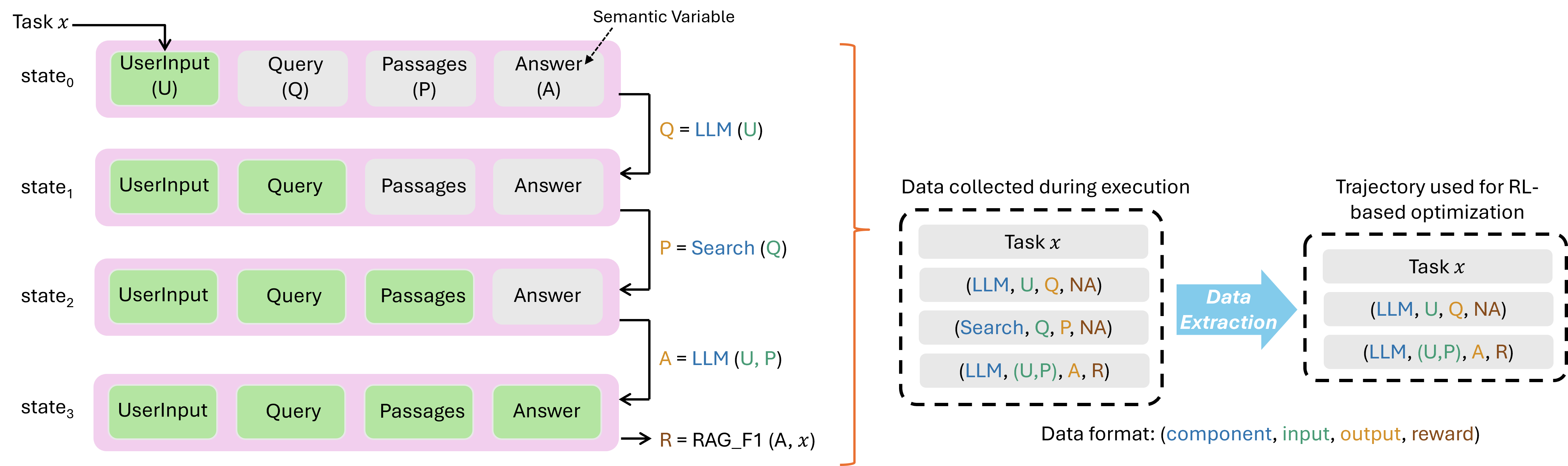

Unified Data Interface and MDP Formulation

Agent Lightning abstracts agent execution as a Markov Decision Process (MDP), where the agent state is a snapshot of semantic variables, and actions correspond to LLM or tool invocations. The framework defines a unified data interface that systematically captures transitions—each comprising the current state, action (LLM output), and reward—irrespective of agent architecture or orchestration logic. This abstraction enables RL algorithms to operate on agent trajectories without requiring knowledge of the underlying agent workflow, supporting arbitrary multi-agent, multi-tool, and multi-turn scenarios.

Figure 2: Unified data interface: agent execution flow (left) and trajectory collection (right), capturing all relevant transitions for RL-based optimization.

The interface supports both intermediate and terminal rewards, facilitating granular credit assignment and mitigating the sparse reward problem endemic to agentic RL. By focusing on semantic variables and component invocations, Agent Lightning avoids the need for explicit DAG parsing or custom trace processing, streamlining data extraction for RL.

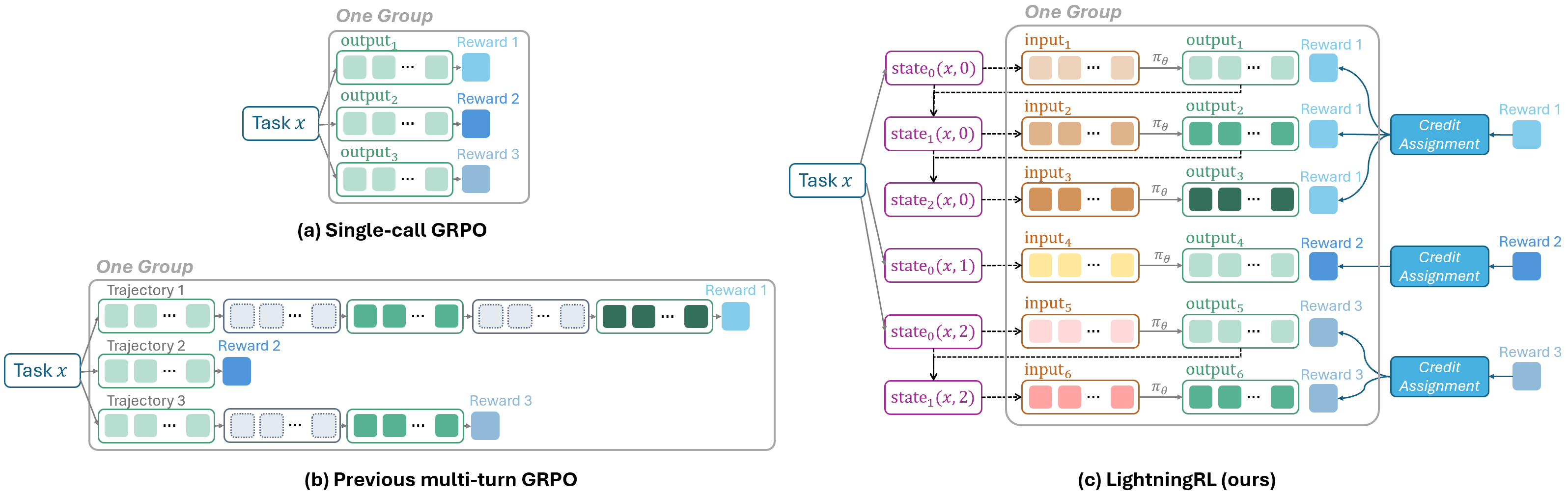

Hierarchical RL Algorithm: LightningRL

To bridge the gap between single-turn RL algorithms and complex agent workflows, Agent Lightning introduces LightningRL—a hierarchical RL algorithm that decomposes agent trajectories into transitions and applies credit assignment across actions and tokens. LightningRL is compatible with value-free methods (e.g., GRPO, REINFORCE++) and standard PPO, enabling efficient advantage estimation and policy updates without requiring custom masking or sequence concatenation.

Figure 3: LightningRL algorithm: (a) single-call GRPO, (b) previous multi-turn GRPO with masking, (c) LightningRL decomposing trajectories into transitions for grouped advantage estimation.

LightningRL's transition-based modeling offers several advantages:

- Flexible context construction: Each transition can encode arbitrary context, supporting modular and role-specific prompts.

- Selective agent optimization: Enables targeted training of specific agents within multi-agent systems.

- Scalability: Avoids accumulative context length issues and supports batch accumulation for efficient updates.

- Robustness: Eliminates positional encoding disruptions and implementation complexity associated with masking.

The credit assignment module is extensible, allowing for future integration of learned value functions or heuristic strategies for more nuanced reward distribution.

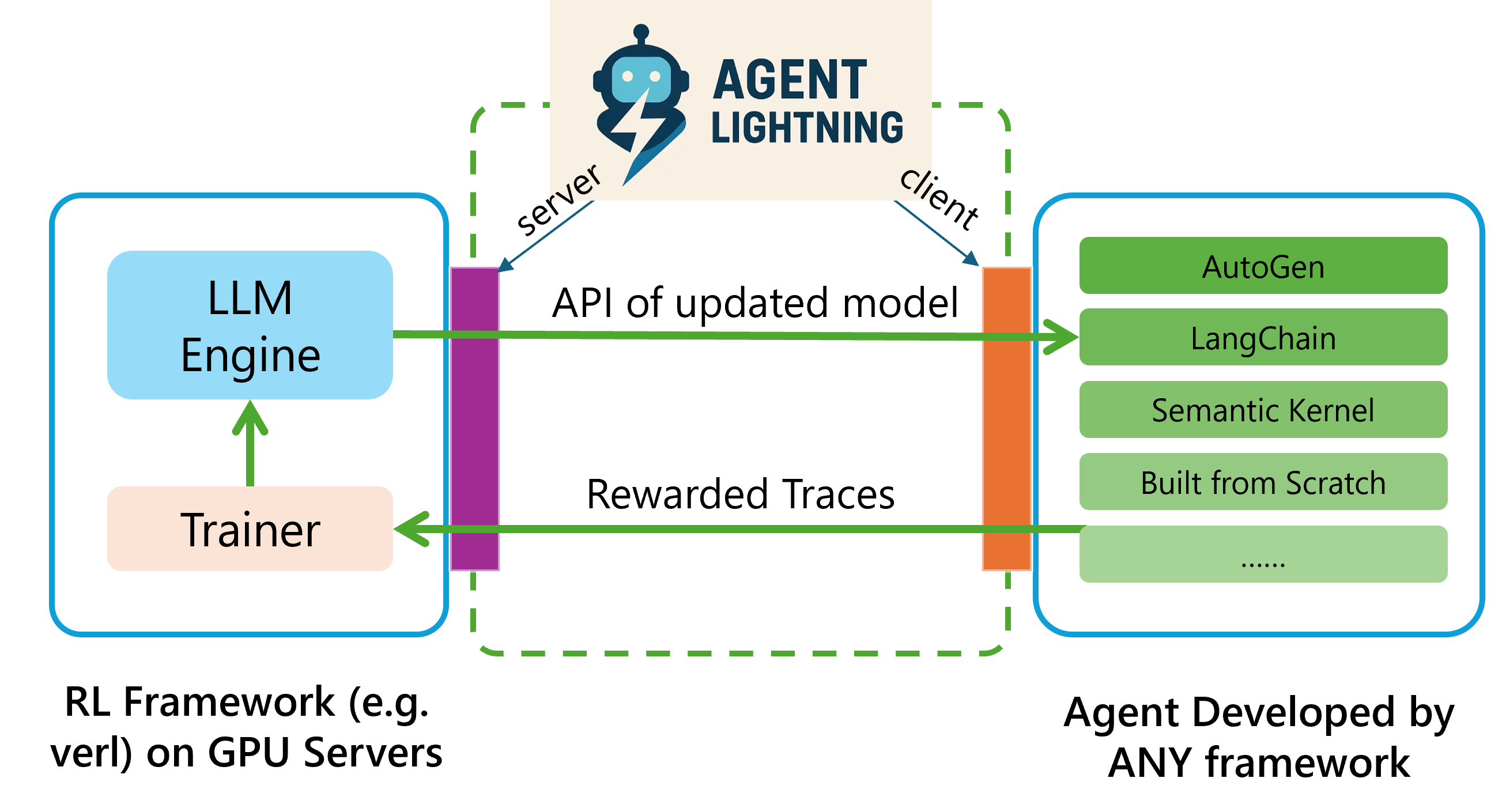

System Architecture: Training-Agent Disaggregation

Agent Lightning's infrastructure is built on a Training-Agent Disaggregation architecture, comprising a Lightning Server (RL trainer and model API) and Lightning Client (agent runtime and data collector). This design achieves mutual independence between training and agent execution, enabling agent-agnostic training and trainer-agnostic agents. The server exposes an OpenAI-like API for model inference, while the client manages agent execution, data capture, error handling, and reward computation.

Figure 4: Training-Agent Disaggregation architecture, decoupling RL training from agent execution for scalable, flexible optimization.

The client supports data parallelism (intra- and inter-node), automatic instrumentation via OpenTelemetry or lightweight tracing, robust error handling, and Automatic Intermediate Rewarding (AIR) for mining intermediate rewards from system monitoring signals. Environment and reward services can be hosted locally or as shared services, supporting scalable deployment across distributed systems.

Figure 5: Process diagram illustrating the interaction between Lightning Server, Lightning Client, agent execution, and RL training loop.

Empirical Results

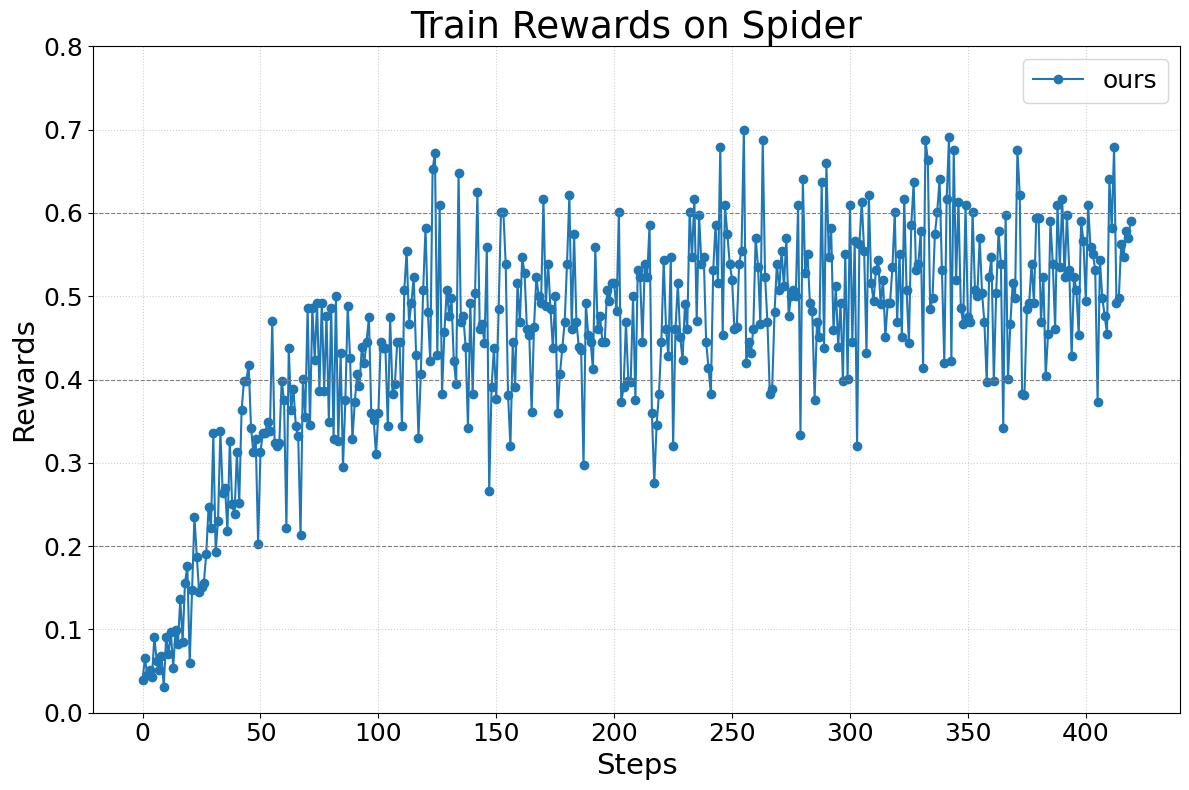

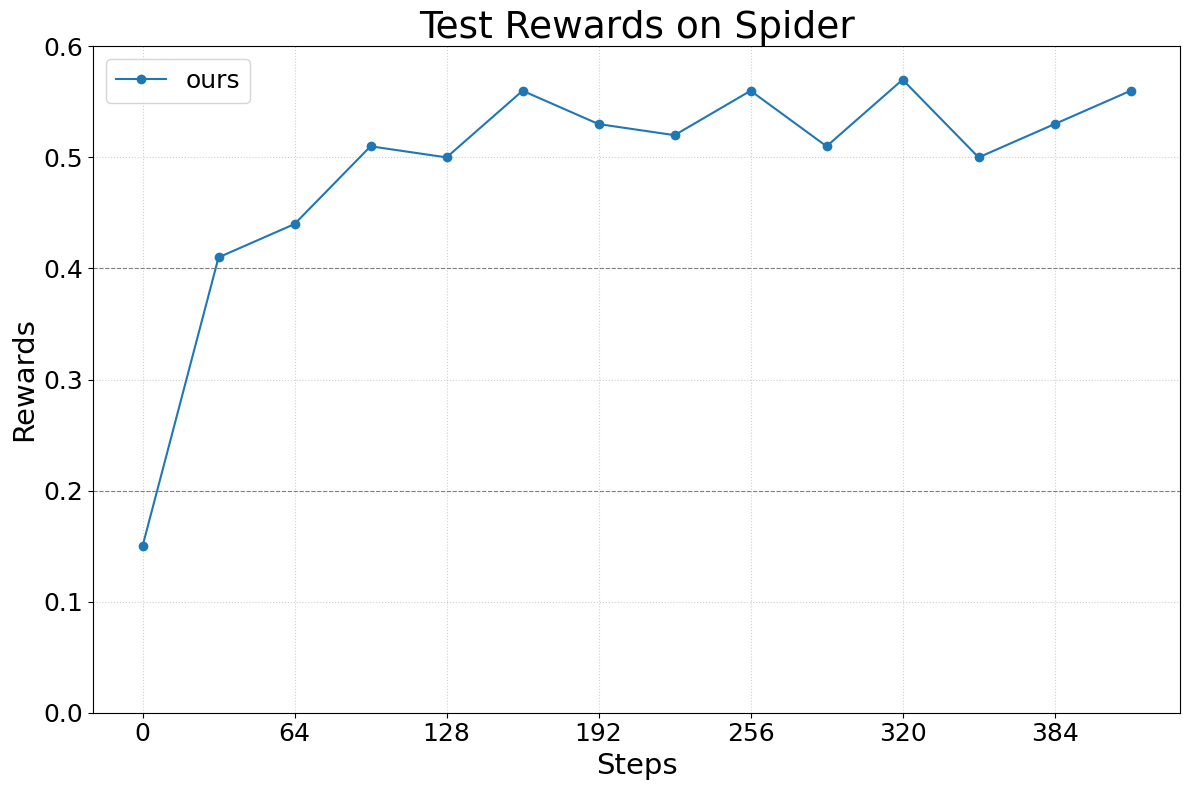

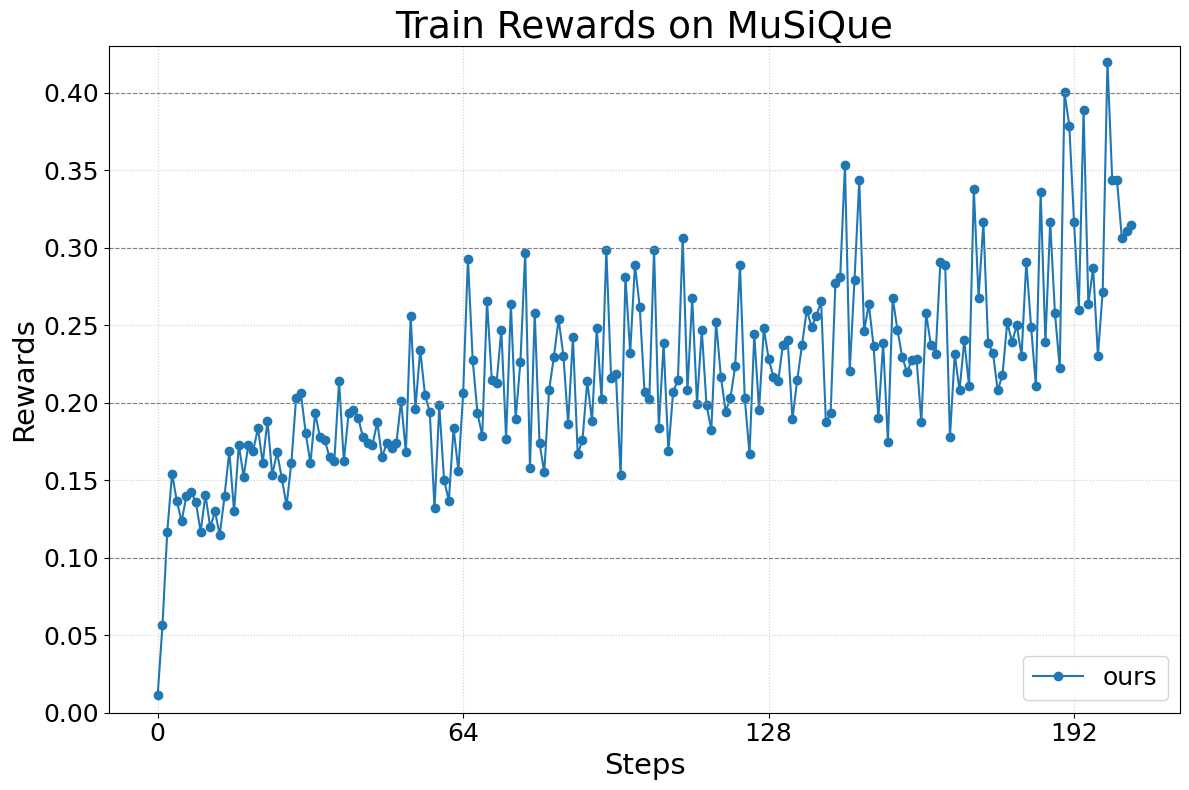

Agent Lightning demonstrates stable, continuous performance improvements across three representative tasks:

- Text-to-SQL (LangChain, Spider): Multi-agent workflow with SQL generation, checking, and rewriting. LightningRL enables simultaneous optimization of multiple agents, yielding consistent reward improvements.

Figure 6: Train reward curve for Text-to-SQL task.

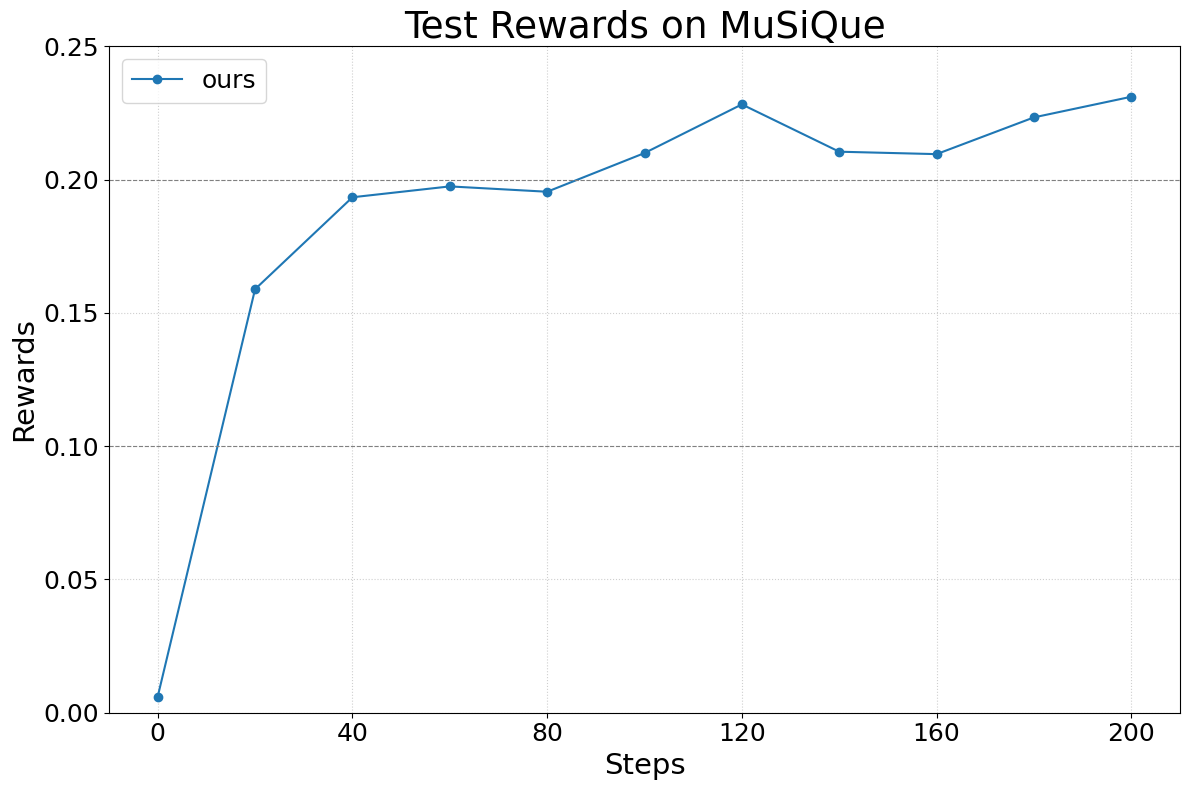

- Retrieval-Augmented Generation (OpenAI Agents SDK, MuSiQue): Single-agent RAG with large-scale Wikipedia retrieval. The framework supports open-ended query generation and multi-hop reasoning, with reward curves indicating robust learning.

Figure 7: Train reward curve for Retrieval-Augmented Generation task.

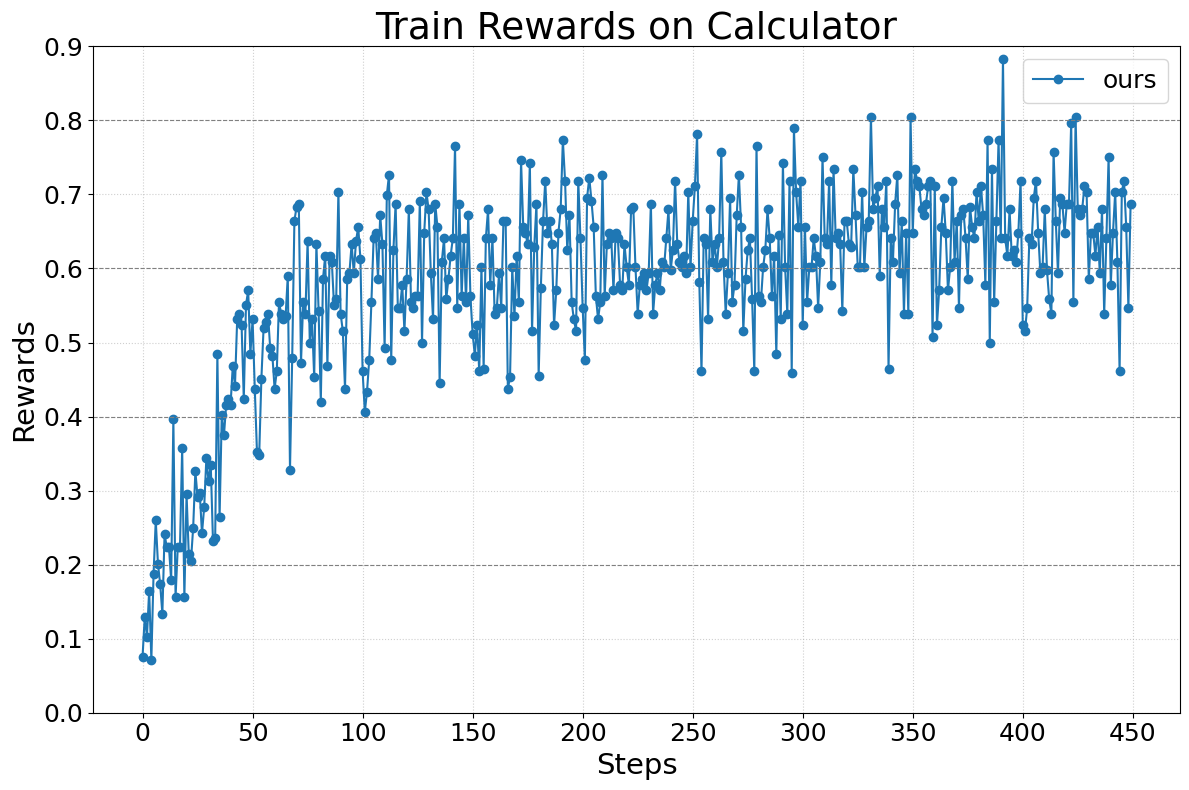

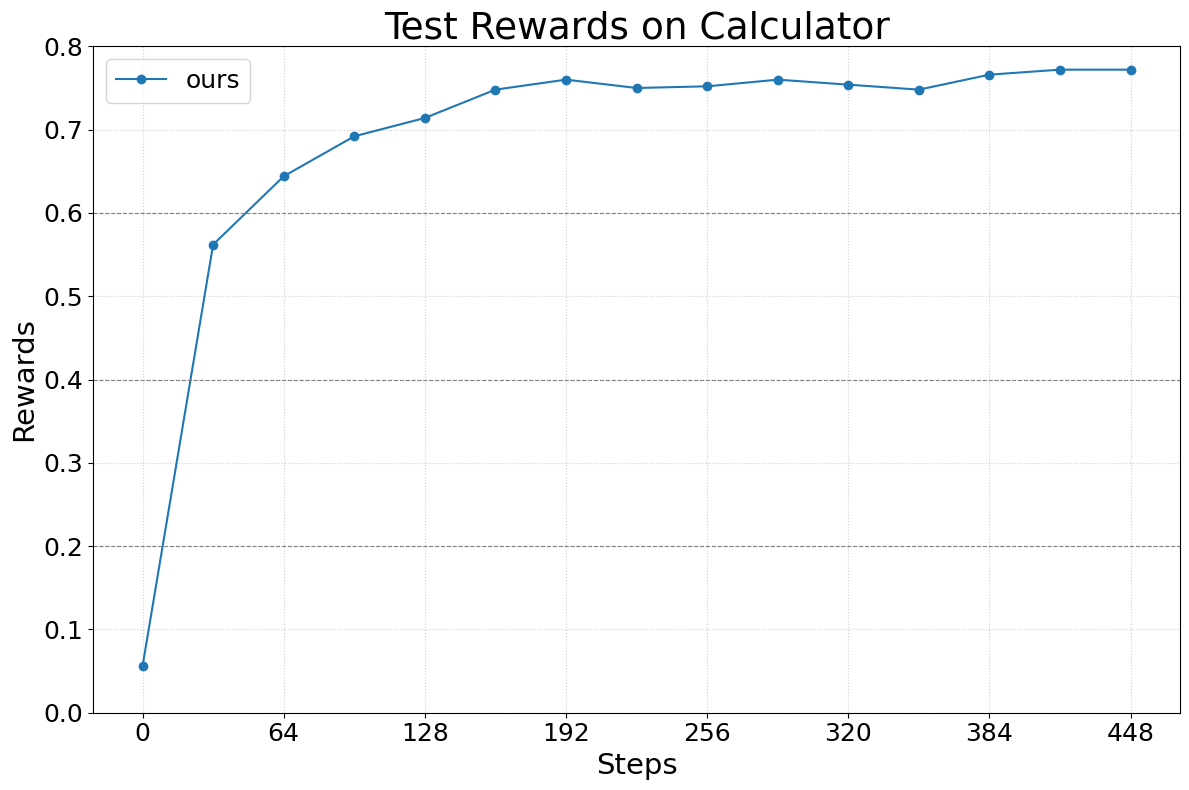

- Math QA with Tool Usage (AutoGen, Calc-X): Tool-augmented reasoning with calculator invocation. LightningRL facilitates precise tool integration and reasoning, with consistent reward gains.

Figure 8: Train reward curve for Calculator task.

Across all tasks, Agent Lightning achieves stable training dynamics, efficient credit assignment, and scalable optimization, validating its applicability to diverse agentic scenarios.

Comparative Analysis and Related Work

Agent Lightning advances the state of the art in agentic RL by:

- Decoupling RL training from agent logic: Unlike verl, OpenRLHF, TRL, and ROLL, which require agent re-implementation or tight coupling, Agent Lightning enables zero-modification integration with existing agents.

- Transition-based modeling: Surpasses concatenation/masking approaches (e.g., RAGEN, Trinity-RFT, rLLM, Search-R1) by supporting arbitrary workflows, alleviating context length issues, and enabling hierarchical RL.

- Extensibility: Supports multi-agent, multi-LLM, and tool-augmented scenarios, with future directions including prompt optimization, advanced credit assignment, and scalable serving.

Implications and Future Directions

Agent Lightning's unified framework has significant implications for both practical deployment and theoretical research:

- Scalable agent optimization: Facilitates large-scale RL training for heterogeneous agents, lowering barriers for real-world adoption.

- Algorithmic innovation: Transition-based modeling unlocks hierarchical RL, advanced exploration, and off-policy methods for complex agentic tasks.

- System co-design: Disaggregated architecture enables efficient resource utilization, robust error handling, and flexible environment integration.

- Generalization: The unified data interface and MDP abstraction provide a foundation for future research in agentic RL, multi-agent coordination, and tool-augmented reasoning.

Potential future developments include integration with advanced serving frameworks (e.g., Parrot), long-context acceleration (e.g., Minference), and serverless environment orchestration.

Conclusion

Agent Lightning presents a principled, extensible framework for RL-based training of arbitrary AI agents, achieving full decoupling between agent execution and RL optimization. Through unified data modeling, hierarchical RL algorithms, and scalable system architecture, it enables robust, efficient, and generalizable agent training across diverse workflows and environments. The empirical results substantiate its effectiveness, and its design lays the groundwork for future advances in agentic RL, scalable deployment, and algorithmic innovation.

Follow-up Questions

- How does Agent Lightning compare to traditional RL frameworks in handling multi-turn, tool-integrated workflows?

- What key challenges in multi-agent optimization does the decoupled RL approach overcome?

- In what ways does the hierarchical LightningRL algorithm improve credit assignment over conventional methods?

- How does the unified data interface facilitate scalable and flexible integration with heterogeneous AI agents?

- Find recent papers about agentic reinforcement learning.

Related Papers

- ArCHer: Training Language Model Agents via Hierarchical Multi-Turn RL (2024)

- AutoML-Agent: A Multi-Agent LLM Framework for Full-Pipeline AutoML (2024)

- DeepResearcher: Scaling Deep Research via Reinforcement Learning in Real-world Environments (2025)

- An Empirical Study on Reinforcement Learning for Reasoning-Search Interleaved LLM Agents (2025)

- ML-Agent: Reinforcing LLM Agents for Autonomous Machine Learning Engineering (2025)

- Towards AI Search Paradigm (2025)

- MemAgent: Reshaping Long-Context LLM with Multi-Conv RL-based Memory Agent (2025)

- AgentFly: Extensible and Scalable Reinforcement Learning for LM Agents (2025)

- Agentic Reinforced Policy Optimization (2025)

- RLVMR: Reinforcement Learning with Verifiable Meta-Reasoning Rewards for Robust Long-Horizon Agents (2025)

Tweets

alphaXiv

- Agent Lightning: Train ANY AI Agents with Reinforcement Learning (178 likes, 1 question)