Seed Diffusion: A Large-Scale Diffusion Language Model with High-Speed Inference (2508.02193v1)

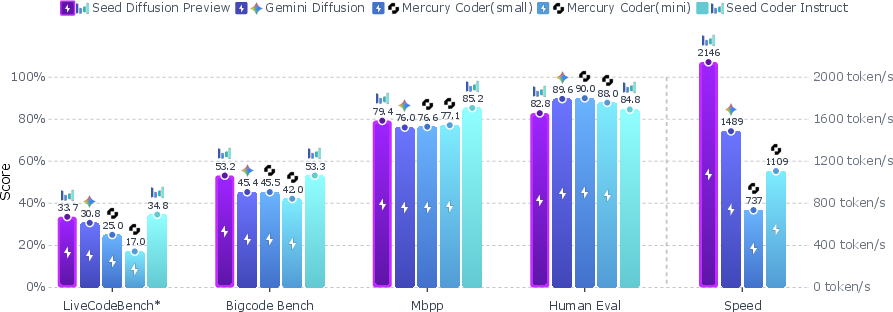

Abstract: We present Seed Diffusion Preview, a large-scale LLM based on discrete-state diffusion, offering remarkably fast inference speed. Thanks to non-sequential, parallel generation, discrete diffusion models provide a notable speedup to mitigate the inherent latency of token-by-token decoding, as demonstrated recently (e.g., Mercury Coder, Gemini Diffusion). Seed Diffusion Preview achieves an inference speed of 2,146 token/s over H20 GPUs while maintaining competitive performance across a sweep of standard code evaluation benchmarks, significantly faster than contemporary Mercury and Gemini Diffusion, establishing new state of the art on the speed-quality Pareto frontier for code models.

Summary

- The paper presents a large-scale discrete diffusion language model that generates code with high-speed inference, achieving 2146 tokens/second.

- It details a two-stage curriculum combining mask-based and edit-based corruption to enhance calibration and improve robustness in code generation.

- It introduces on-policy diffusion learning and constrained-order training to reduce denoising steps, thereby accelerating inference while maintaining quality.

Seed Diffusion: A Large-Scale Diffusion LLM with High-Speed Inference

Introduction and Motivation

Seed Diffusion introduces a large-scale discrete diffusion LLM (DLM) specifically optimized for code generation, with a focus on achieving high inference speed while maintaining competitive generation quality. The work addresses two persistent challenges in discrete diffusion for language: (1) the inefficiency of arbitrary token order modeling, which is misaligned with the sequential nature of natural language, and (2) the high inference latency inherent to iterative denoising procedures in diffusion models. The model, Seed Diffusion Preview, demonstrates that with targeted architectural and algorithmic choices, discrete diffusion can approach or surpass the speed-quality Pareto frontier established by autoregressive (AR) and prior non-autoregressive (NAR) models.

Model Architecture and Training Paradigm

Seed Diffusion Preview employs a standard dense Transformer backbone, eschewing architectural complexity to isolate the effects of diffusion-specific innovations. The model is trained exclusively on code and code-related data, leveraging the Seed Coder data pipeline.

Two-Stage Curriculum (TSC) for Diffusion Training

The training process is divided into two distinct stages:

- Mask-Based Forward Process (80% of training): Tokens in the input sequence are independently replaced with a [MASK] token according to a monotonically increasing noise schedule γt. This process is analytically tractable and aligns with prior work on masked diffusion for discrete data.

- Edit-Based Forward Process (20% of training): To mitigate overconfidence and spurious correlations induced by mask-only corruption, the model is further trained with edit-based corruption. Here, a controlled number of token-level edits (insertions, deletions, substitutions) are applied, parameterized by a scheduler αt and the Levenshtein distance. This augmentation compels the model to re-evaluate all tokens, including those not masked, improving calibration and robustness.

The overall objective is a hybrid ELBO, combining the analytical tractability of the mask-based process with the denoising benefits of edit-based corruption. Notably, the model does not employ "Carry Over Unmasking," in contrast to some prior work, to avoid the detrimental inductive bias that unmasked tokens are always correct.

Constrained-Order Diffusion Training

Recognizing that mask-based diffusion is equivalent to any-order AR modeling, the authors introduce a constrained-order fine-tuning phase. High-quality generation trajectories are distilled by sampling from the pre-trained model and selecting those maximizing the ELBO. The model is then fine-tuned on this distilled set, reducing the learning burden associated with arbitrary orderings and aligning the generation process more closely with the structure of code.

On-Policy Diffusion Learning

To further accelerate inference, an on-policy learning paradigm is introduced. The model is trained to minimize the expected trajectory length (i.e., the number of denoising steps) under its own sampling policy, subject to a model-based verifier that ensures sample validity. This is operationalized via a surrogate loss proportional to the expected inverse Levenshtein distance between trajectory states. The result is a model that learns to generate more tokens per step without sacrificing quality.

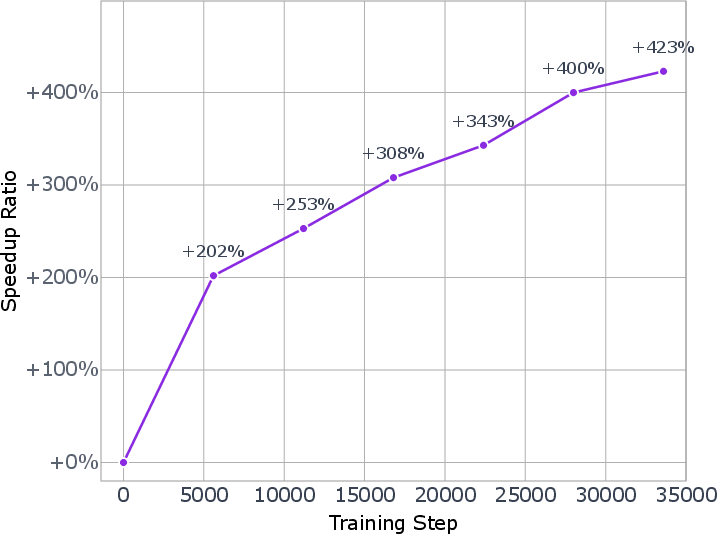

Figure 1: The speedup ratio increases during on-policy training as the model learns to generate larger blocks of tokens in parallel.

Inference and System Optimization

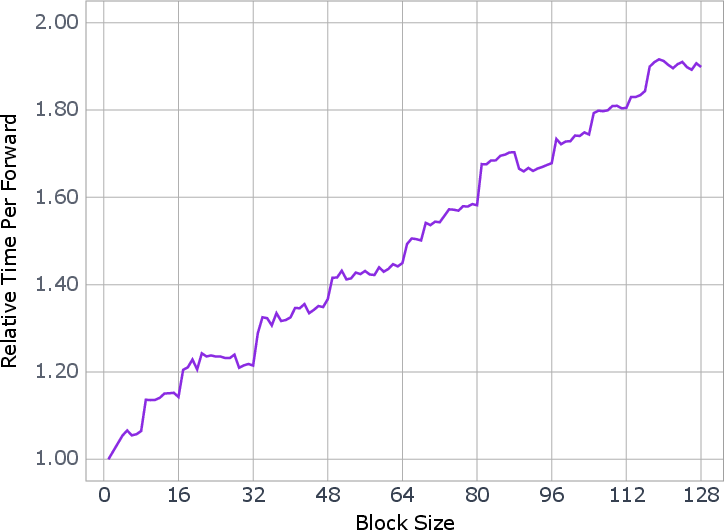

Inference is performed using a block-level parallel diffusion sampling scheme, where tokens are generated in blocks with causal dependencies between them. This semi-autoregressive approach balances the trade-off between parallelism and quality, as larger block sizes amortize the computational cost of each denoising step but may degrade output fidelity if not properly tuned.

The system infrastructure is optimized for block-wise inference, with specialized support for efficient diffusion sampling. The optimal block size is determined empirically, balancing single-pass latency and token throughput.

Empirical Results

Seed Diffusion Preview is evaluated on a comprehensive suite of code generation and code editing benchmarks, including HumanEval, MBPP, BigCodeBench, LiveCodeBench, MBXP, NaturalCodeBench, Aider, and CanItEdit. The model is compared against both AR and state-of-the-art diffusion-based baselines (e.g., Mercury Coder, Gemini Diffusion).

Key empirical findings:

- Inference Speed:

Seed Diffusion achieves an inference speed of 2146 tokens/second on H20 GPUs, substantially exceeding the throughput of comparable AR and prior DLMs.

Figure 2: Seed Diffusion's inference speed across eight open code benchmarks, highlighting its position on the speed-quality Pareto frontier.

- Quality:

On code generation and editing tasks, Seed Diffusion matches or exceeds the performance of similarly sized AR models and prior DLMs, particularly excelling on code editing benchmarks (Aider, CanItEdit).

- Editing Robustness:

The edit-based corruption and constrained-order training yield notable improvements in code editing tasks, where the model must modify existing code in response to instructions.

Theoretical and Practical Implications

The work demonstrates that discrete diffusion, when equipped with appropriate inductive biases and training strategies, can be competitive with AR models in both quality and speed. The explicit avoidance of arbitrary token orderings and the use of on-policy learning are critical for practical deployment. The results challenge the prevailing assumption that diffusion models are inherently slower than AR models for language, especially in the code domain.

Theoretically, the constrained-order approach provides a bridge between the flexibility of diffusion and the inductive biases of AR modeling, suggesting a continuum of modeling strategies. The on-policy paradigm aligns model training with inference-time objectives, a principle that may generalize to other NAR architectures.

Future Directions

Several avenues for further research are suggested:

- Scaling Laws:

Systematic exploration of scaling properties for discrete DLMs, particularly in domains beyond code, to assess generalization and robustness.

- Complex Reasoning:

Extension of diffusion-based approaches to tasks requiring multi-step reasoning, long-context understanding, or multi-modal integration.

- Hybrid Architectures:

Investigation of architectures that interpolate between AR and diffusion, leveraging the strengths of both paradigms.

- System-Level Optimization:

Continued co-design of model algorithms and inference infrastructure to maximize practical throughput and minimize latency.

Conclusion

Seed Diffusion Preview establishes that large-scale discrete diffusion LLMs, when equipped with targeted curriculum, constrained-order training, and on-policy optimization, can achieve high inference speed and competitive quality in code generation and editing. The work provides a blueprint for future DLM research, highlighting the importance of inductive bias, trajectory selection, and system-level optimization. The implications extend to both the theoretical understanding of sequence modeling and the practical deployment of high-throughput LLMs.

Follow-up Questions

- How does Seed Diffusion's performance compare to traditional autoregressive models on various code benchmarks?

- What are the advantages of using a two-stage curriculum in discrete diffusion language models for code generation?

- How does the edit-based corruption process improve the model's calibration and editing robustness?

- In what ways does on-policy learning contribute to reducing inference latency in diffusion models?

- Find recent papers about diffusion language models for code generation.

Related Papers

- DiffuSeq: Sequence to Sequence Text Generation with Diffusion Models (2022)

- A Cheaper and Better Diffusion Language Model with Soft-Masked Noise (2023)

- AR-Diffusion: Auto-Regressive Diffusion Model for Text Generation (2023)

- Speculative Diffusion Decoding: Accelerating Language Generation through Diffusion (2024)

- Scaling Diffusion Language Models via Adaptation from Autoregressive Models (2024)

- Theoretical Benefit and Limitation of Diffusion Language Model (2025)

- Block Diffusion: Interpolating Between Autoregressive and Diffusion Language Models (2025)

- Accelerating Diffusion Language Model Inference via Efficient KV Caching and Guided Diffusion (2025)

- The Diffusion Duality (2025)

- Mercury: Ultra-Fast Language Models Based on Diffusion (2025)

Authors (22)

First 10 authors:

Tweets

alphaXiv

- Seed Diffusion: A Large-Scale Diffusion Language Model with High-Speed Inference (75 likes, 0 questions)